Temporal Antialiasing (TAA) (#7291)

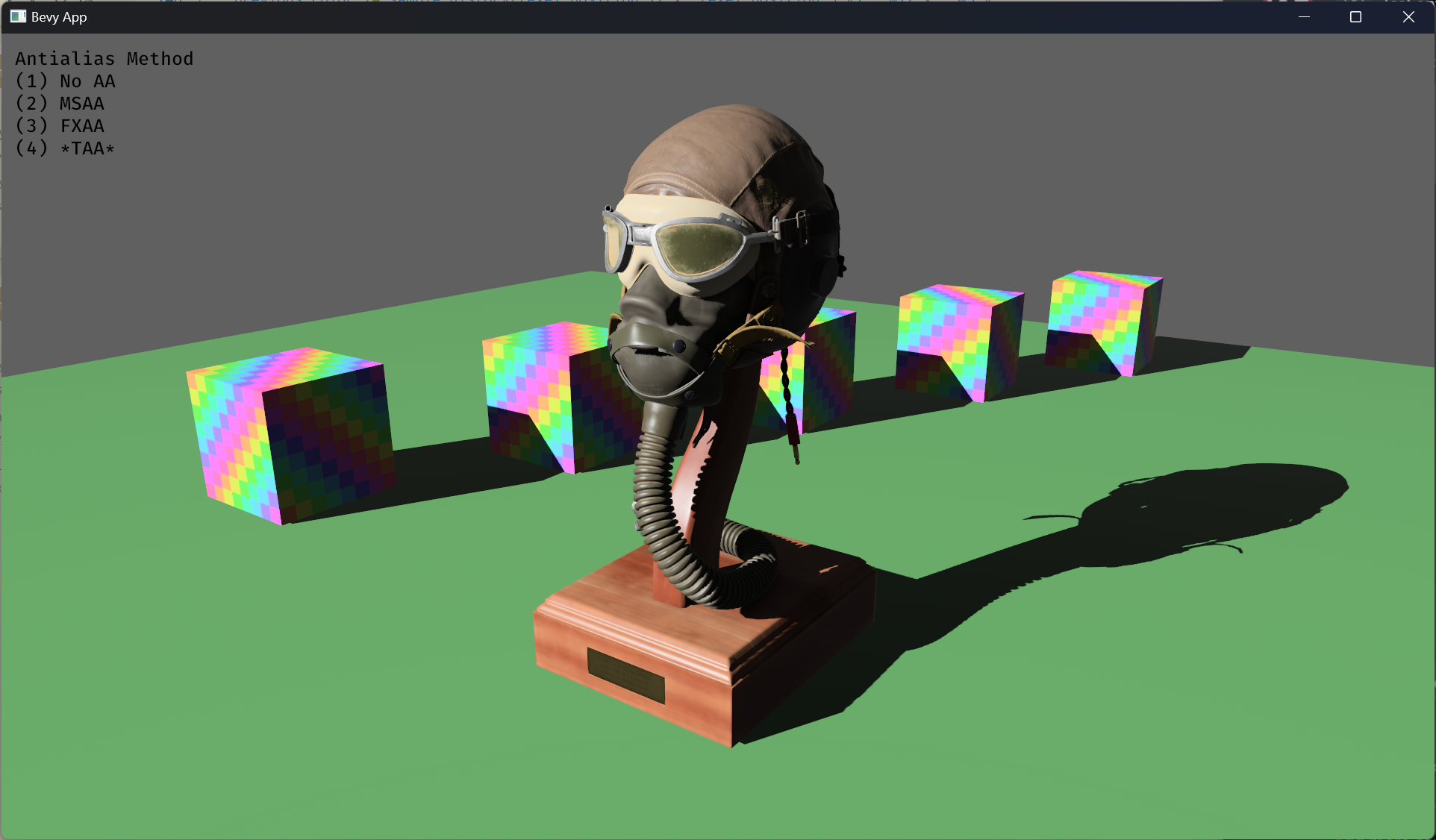

# Objective - Implement an alternative antialias technique - TAA scales based off of view resolution, not geometry complexity - TAA filters textures, firefly pixels, and other aliasing not covered by MSAA - TAA additionally will reduce noise / increase quality in future stochastic rendering techniques - Closes https://github.com/bevyengine/bevy/issues/3663 ## Solution - Add a temporal jitter component - Add a motion vector prepass - Add a TemporalAntialias component and plugin - Combine existing MSAA and FXAA examples and add TAA ## Followup Work - Prepass motion vector support for skinned meshes - Move uniforms needed for motion vectors into a separate bind group, instead of using different bind group layouts - Reuse previous frame's GPU view buffer for motion vectors, instead of recomputing - Mip biasing for sharper textures, and or unjitter texture UVs https://github.com/bevyengine/bevy/issues/7323 - Compute shader for better performance - Investigate FSR techniques - Historical depth based disocclusion tests, for geometry disocclusion - Historical luminance/hue based tests, for shading disocclusion - Pixel "locks" to reduce blending rate / revamp history confidence mechanism - Orthographic camera support for TemporalJitter - Figure out COD's 1-tap bicubic filter --- ## Changelog - Added MotionVectorPrepass and TemporalJitter - Added TemporalAntialiasPlugin, TemporalAntialiasBundle, and TemporalAntialiasSettings --------- Co-authored-by: IceSentry <c.giguere42@gmail.com> Co-authored-by: IceSentry <IceSentry@users.noreply.github.com> Co-authored-by: Robert Swain <robert.swain@gmail.com> Co-authored-by: Daniel Chia <danstryder@gmail.com> Co-authored-by: robtfm <50659922+robtfm@users.noreply.github.com> Co-authored-by: Brandon Dyer <brandondyer64@gmail.com> Co-authored-by: Edgar Geier <geieredgar@gmail.com>

This commit is contained in:

parent

3d8c7681a7

commit

53667dea56

30

Cargo.toml

30

Cargo.toml

@ -414,6 +414,16 @@ description = "A scene showcasing the built-in 3D shapes"

|

||||

category = "3D Rendering"

|

||||

wasm = true

|

||||

|

||||

[[example]]

|

||||

name = "anti_aliasing"

|

||||

path = "examples/3d/anti_aliasing.rs"

|

||||

|

||||

[package.metadata.example.anti_aliasing]

|

||||

name = "Anti-aliasing"

|

||||

description = "Compares different anti-aliasing methods"

|

||||

category = "3D Rendering"

|

||||

wasm = false

|

||||

|

||||

[[example]]

|

||||

name = "3d_gizmos"

|

||||

path = "examples/3d/3d_gizmos.rs"

|

||||

@ -515,26 +525,6 @@ description = "Compares tonemapping options"

|

||||

category = "3D Rendering"

|

||||

wasm = true

|

||||

|

||||

[[example]]

|

||||

name = "fxaa"

|

||||

path = "examples/3d/fxaa.rs"

|

||||

|

||||

[package.metadata.example.fxaa]

|

||||

name = "FXAA"

|

||||

description = "Compares MSAA (Multi-Sample Anti-Aliasing) and FXAA (Fast Approximate Anti-Aliasing)"

|

||||

category = "3D Rendering"

|

||||

wasm = true

|

||||

|

||||

[[example]]

|

||||

name = "msaa"

|

||||

path = "examples/3d/msaa.rs"

|

||||

|

||||

[package.metadata.example.msaa]

|

||||

name = "MSAA"

|

||||

description = "Configures MSAA (Multi-Sample Anti-Aliasing) for smoother edges"

|

||||

category = "3D Rendering"

|

||||

wasm = true

|

||||

|

||||

[[example]]

|

||||

name = "orthographic"

|

||||

path = "examples/3d/orthographic.rs"

|

||||

|

||||

@ -5,6 +5,7 @@

|

||||

struct ShowPrepassSettings {

|

||||

show_depth: u32,

|

||||

show_normals: u32,

|

||||

show_motion_vectors: u32,

|

||||

padding_1: u32,

|

||||

padding_2: u32,

|

||||

}

|

||||

@ -23,6 +24,9 @@ fn fragment(

|

||||

} else if settings.show_normals == 1u {

|

||||

let normal = prepass_normal(frag_coord, sample_index);

|

||||

return vec4(normal, 1.0);

|

||||

} else if settings.show_motion_vectors == 1u {

|

||||

let motion_vector = prepass_motion_vector(frag_coord, sample_index);

|

||||

return vec4(motion_vector / globals.delta_time, 0.0, 1.0);

|

||||

}

|

||||

|

||||

return vec4(0.0);

|

||||

|

||||

@ -21,6 +21,7 @@ tonemapping_luts = []

|

||||

# bevy

|

||||

bevy_app = { path = "../bevy_app", version = "0.11.0-dev" }

|

||||

bevy_asset = { path = "../bevy_asset", version = "0.11.0-dev" }

|

||||

bevy_core = { path = "../bevy_core", version = "0.11.0-dev" }

|

||||

bevy_derive = { path = "../bevy_derive", version = "0.11.0-dev" }

|

||||

bevy_ecs = { path = "../bevy_ecs", version = "0.11.0-dev" }

|

||||

bevy_reflect = { path = "../bevy_reflect", version = "0.11.0-dev" }

|

||||

|

||||

@ -23,8 +23,6 @@ use bevy_render::{

|

||||

view::ViewTarget,

|

||||

Render, RenderApp, RenderSet,

|

||||

};

|

||||

#[cfg(feature = "trace")]

|

||||

use bevy_utils::tracing::info_span;

|

||||

use downsampling_pipeline::{

|

||||

prepare_downsampling_pipeline, BloomDownsamplingPipeline, BloomDownsamplingPipelineIds,

|

||||

BloomUniforms,

|

||||

@ -150,9 +148,6 @@ impl Node for BloomNode {

|

||||

render_context: &mut RenderContext,

|

||||

world: &World,

|

||||

) -> Result<(), NodeRunError> {

|

||||

#[cfg(feature = "trace")]

|

||||

let _bloom_span = info_span!("bloom").entered();

|

||||

|

||||

let downsampling_pipeline_res = world.resource::<BloomDownsamplingPipeline>();

|

||||

let pipeline_cache = world.resource::<PipelineCache>();

|

||||

let uniforms = world.resource::<ComponentUniforms<BloomUniforms>>();

|

||||

|

||||

@ -1,7 +1,7 @@

|

||||

use crate::{

|

||||

clear_color::{ClearColor, ClearColorConfig},

|

||||

core_3d::{AlphaMask3d, Camera3d, Opaque3d, Transparent3d},

|

||||

prepass::{DepthPrepass, NormalPrepass},

|

||||

prepass::{DepthPrepass, MotionVectorPrepass, NormalPrepass},

|

||||

};

|

||||

use bevy_ecs::prelude::*;

|

||||

use bevy_render::{

|

||||

@ -29,6 +29,7 @@ pub struct MainPass3dNode {

|

||||

&'static ViewDepthTexture,

|

||||

Option<&'static DepthPrepass>,

|

||||

Option<&'static NormalPrepass>,

|

||||

Option<&'static MotionVectorPrepass>,

|

||||

),

|

||||

With<ExtractedView>,

|

||||

>,

|

||||

@ -64,6 +65,7 @@ impl Node for MainPass3dNode {

|

||||

depth,

|

||||

depth_prepass,

|

||||

normal_prepass,

|

||||

motion_vector_prepass,

|

||||

)) = self.query.get_manual(world, view_entity) else {

|

||||

// No window

|

||||

return Ok(());

|

||||

@ -94,7 +96,10 @@ impl Node for MainPass3dNode {

|

||||

view: &depth.view,

|

||||

// NOTE: The opaque main pass loads the depth buffer and possibly overwrites it

|

||||

depth_ops: Some(Operations {

|

||||

load: if depth_prepass.is_some() || normal_prepass.is_some() {

|

||||

load: if depth_prepass.is_some()

|

||||

|| normal_prepass.is_some()

|

||||

|| motion_vector_prepass.is_some()

|

||||

{

|

||||

// if any prepass runs, it will generate a depth buffer so we should use it,

|

||||

// even if only the normal_prepass is used.

|

||||

Camera3dDepthLoadOp::Load

|

||||

|

||||

@ -7,9 +7,17 @@ pub mod fullscreen_vertex_shader;

|

||||

pub mod fxaa;

|

||||

pub mod msaa_writeback;

|

||||

pub mod prepass;

|

||||

mod taa;

|

||||

pub mod tonemapping;

|

||||

pub mod upscaling;

|

||||

|

||||

/// Experimental features that are not yet finished. Please report any issues you encounter!

|

||||

pub mod experimental {

|

||||

pub mod taa {

|

||||

pub use crate::taa::*;

|

||||

}

|

||||

}

|

||||

|

||||

pub mod prelude {

|

||||

#[doc(hidden)]

|

||||

pub use crate::{

|

||||

|

||||

@ -1,4 +1,4 @@

|

||||

//! Run a prepass before the main pass to generate depth and/or normals texture, sometimes called a thin g-buffer.

|

||||

//! Run a prepass before the main pass to generate depth, normals, and/or motion vectors textures, sometimes called a thin g-buffer.

|

||||

//! These textures are useful for various screen-space effects and reducing overdraw in the main pass.

|

||||

//!

|

||||

//! The prepass only runs for opaque meshes or meshes with an alpha mask. Transparent meshes are ignored.

|

||||

@ -7,6 +7,7 @@

|

||||

//!

|

||||

//! [`DepthPrepass`]

|

||||

//! [`NormalPrepass`]

|

||||

//! [`MotionVectorPrepass`]

|

||||

//!

|

||||

//! The textures are automatically added to the default mesh view bindings. You can also get the raw textures

|

||||

//! by querying the [`ViewPrepassTextures`] component on any camera with a prepass component.

|

||||

@ -15,9 +16,9 @@

|

||||

//! to a separate texture unless the [`DepthPrepass`] is activated. This means that if any prepass component is present

|

||||

//! it will always create a depth buffer that will be used by the main pass.

|

||||

//!

|

||||

//! When using the default mesh view bindings you should be able to use `prepass_depth()`

|

||||

//! and `prepass_normal()` to load the related textures. These functions are defined in `bevy_pbr::prepass_utils`.

|

||||

//! See the `shader_prepass` example that shows how to use it.

|

||||

//! When using the default mesh view bindings you should be able to use `prepass_depth()`,

|

||||

//! `prepass_normal()`, and `prepass_motion_vector()` to load the related textures.

|

||||

//! These functions are defined in `bevy_pbr::prepass_utils`. See the `shader_prepass` example that shows how to use them.

|

||||

//!

|

||||

//! The prepass runs for each `Material`. You can control if the prepass should run per-material by setting the `prepass_enabled`

|

||||

//! flag on the `MaterialPlugin`.

|

||||

@ -39,6 +40,7 @@ use bevy_utils::FloatOrd;

|

||||

|

||||

pub const DEPTH_PREPASS_FORMAT: TextureFormat = TextureFormat::Depth32Float;

|

||||

pub const NORMAL_PREPASS_FORMAT: TextureFormat = TextureFormat::Rgb10a2Unorm;

|

||||

pub const MOTION_VECTOR_PREPASS_FORMAT: TextureFormat = TextureFormat::Rg16Float;

|

||||

|

||||

/// If added to a [`crate::prelude::Camera3d`] then depth values will be copied to a separate texture available to the main pass.

|

||||

#[derive(Component, Default, Reflect)]

|

||||

@ -49,6 +51,10 @@ pub struct DepthPrepass;

|

||||

#[derive(Component, Default, Reflect)]

|

||||

pub struct NormalPrepass;

|

||||

|

||||

/// If added to a [`crate::prelude::Camera3d`] then screen space motion vectors will be copied to a separate texture available to the main pass.

|

||||

#[derive(Component, Default, Reflect)]

|

||||

pub struct MotionVectorPrepass;

|

||||

|

||||

/// Textures that are written to by the prepass.

|

||||

///

|

||||

/// This component will only be present if any of the relevant prepass components are also present.

|

||||

@ -60,6 +66,9 @@ pub struct ViewPrepassTextures {

|

||||

/// The normals texture generated by the prepass.

|

||||

/// Exists only if [`NormalPrepass`] is added to the `ViewTarget`

|

||||

pub normal: Option<CachedTexture>,

|

||||

/// The motion vectors texture generated by the prepass.

|

||||

/// Exists only if [`MotionVectorPrepass`] is added to the `ViewTarget`

|

||||

pub motion_vectors: Option<CachedTexture>,

|

||||

/// The size of the textures.

|

||||

pub size: Extent3d,

|

||||

}

|

||||

|

||||

@ -64,15 +64,34 @@ impl Node for PrepassNode {

|

||||

};

|

||||

|

||||

let mut color_attachments = vec![];

|

||||

if let Some(view_normals_texture) = &view_prepass_textures.normal {

|

||||

color_attachments.push(Some(RenderPassColorAttachment {

|

||||

view: &view_normals_texture.default_view,

|

||||

color_attachments.push(

|

||||

view_prepass_textures

|

||||

.normal

|

||||

.as_ref()

|

||||

.map(|view_normals_texture| RenderPassColorAttachment {

|

||||

view: &view_normals_texture.default_view,

|

||||

resolve_target: None,

|

||||

ops: Operations {

|

||||

load: LoadOp::Clear(Color::BLACK.into()),

|

||||

store: true,

|

||||

},

|

||||

}),

|

||||

);

|

||||

color_attachments.push(view_prepass_textures.motion_vectors.as_ref().map(

|

||||

|view_motion_vectors_texture| RenderPassColorAttachment {

|

||||

view: &view_motion_vectors_texture.default_view,

|

||||

resolve_target: None,

|

||||

ops: Operations {

|

||||

load: LoadOp::Clear(Color::BLACK.into()),

|

||||

// Blue channel doesn't matter, but set to 1.0 for possible faster clear

|

||||

// https://gpuopen.com/performance/#clears

|

||||

load: LoadOp::Clear(Color::rgb_linear(1.0, 1.0, 1.0).into()),

|

||||

store: true,

|

||||

},

|

||||

}));

|

||||

},

|

||||

));

|

||||

if color_attachments.iter().all(Option::is_none) {

|

||||

// all attachments are none: clear the attachment list so that no fragment shader is required

|

||||

color_attachments.clear();

|

||||

}

|

||||

|

||||

{

|

||||

|

||||

557

crates/bevy_core_pipeline/src/taa/mod.rs

Normal file

557

crates/bevy_core_pipeline/src/taa/mod.rs

Normal file

@ -0,0 +1,557 @@

|

||||

use crate::{

|

||||

fullscreen_vertex_shader::fullscreen_shader_vertex_state,

|

||||

prelude::Camera3d,

|

||||

prepass::{DepthPrepass, MotionVectorPrepass, ViewPrepassTextures},

|

||||

};

|

||||

use bevy_app::{App, Plugin};

|

||||

use bevy_asset::{load_internal_asset, HandleUntyped};

|

||||

use bevy_core::FrameCount;

|

||||

use bevy_ecs::{

|

||||

prelude::{Bundle, Component, Entity},

|

||||

query::{QueryState, With},

|

||||

schedule::IntoSystemConfigs,

|

||||

system::{Commands, Query, Res, ResMut, Resource},

|

||||

world::{FromWorld, World},

|

||||

};

|

||||

use bevy_math::vec2;

|

||||

use bevy_reflect::{Reflect, TypeUuid};

|

||||

use bevy_render::{

|

||||

camera::{ExtractedCamera, TemporalJitter},

|

||||

prelude::{Camera, Projection},

|

||||

render_graph::{Node, NodeRunError, RenderGraph, RenderGraphContext},

|

||||

render_resource::{

|

||||

BindGroupDescriptor, BindGroupEntry, BindGroupLayout, BindGroupLayoutDescriptor,

|

||||

BindGroupLayoutEntry, BindingResource, BindingType, CachedRenderPipelineId,

|

||||

ColorTargetState, ColorWrites, Extent3d, FilterMode, FragmentState, MultisampleState,

|

||||

Operations, PipelineCache, PrimitiveState, RenderPassColorAttachment, RenderPassDescriptor,

|

||||

RenderPipelineDescriptor, Sampler, SamplerBindingType, SamplerDescriptor, Shader,

|

||||

ShaderStages, SpecializedRenderPipeline, SpecializedRenderPipelines, TextureDescriptor,

|

||||

TextureDimension, TextureFormat, TextureSampleType, TextureUsages, TextureViewDimension,

|

||||

},

|

||||

renderer::{RenderContext, RenderDevice},

|

||||

texture::{BevyDefault, CachedTexture, TextureCache},

|

||||

view::{prepare_view_uniforms, ExtractedView, Msaa, ViewTarget},

|

||||

ExtractSchedule, MainWorld, Render, RenderApp, RenderSet,

|

||||

};

|

||||

|

||||

mod draw_3d_graph {

|

||||

pub mod node {

|

||||

/// Label for the TAA render node.

|

||||

pub const TAA: &str = "taa";

|

||||

}

|

||||

}

|

||||

|

||||

const TAA_SHADER_HANDLE: HandleUntyped =

|

||||

HandleUntyped::weak_from_u64(Shader::TYPE_UUID, 656865235226276);

|

||||

|

||||

/// Plugin for temporal anti-aliasing. Disables multisample anti-aliasing (MSAA).

|

||||

///

|

||||

/// See [`TemporalAntiAliasSettings`] for more details.

|

||||

pub struct TemporalAntiAliasPlugin;

|

||||

|

||||

impl Plugin for TemporalAntiAliasPlugin {

|

||||

fn build(&self, app: &mut App) {

|

||||

load_internal_asset!(app, TAA_SHADER_HANDLE, "taa.wgsl", Shader::from_wgsl);

|

||||

|

||||

app.insert_resource(Msaa::Off)

|

||||

.register_type::<TemporalAntiAliasSettings>();

|

||||

|

||||

let Ok(render_app) = app.get_sub_app_mut(RenderApp) else { return };

|

||||

|

||||

render_app

|

||||

.init_resource::<TAAPipeline>()

|

||||

.init_resource::<SpecializedRenderPipelines<TAAPipeline>>()

|

||||

.add_systems(ExtractSchedule, extract_taa_settings)

|

||||

.add_systems(

|

||||

Render,

|

||||

(

|

||||

prepare_taa_jitter

|

||||

.before(prepare_view_uniforms)

|

||||

.in_set(RenderSet::Prepare),

|

||||

prepare_taa_history_textures.in_set(RenderSet::Prepare),

|

||||

prepare_taa_pipelines.in_set(RenderSet::Prepare),

|

||||

),

|

||||

);

|

||||

|

||||

let taa_node = TAANode::new(&mut render_app.world);

|

||||

let mut graph = render_app.world.resource_mut::<RenderGraph>();

|

||||

let draw_3d_graph = graph

|

||||

.get_sub_graph_mut(crate::core_3d::graph::NAME)

|

||||

.unwrap();

|

||||

draw_3d_graph.add_node(draw_3d_graph::node::TAA, taa_node);

|

||||

// MAIN_PASS -> TAA -> BLOOM -> TONEMAPPING

|

||||

draw_3d_graph.add_node_edge(

|

||||

crate::core_3d::graph::node::MAIN_PASS,

|

||||

draw_3d_graph::node::TAA,

|

||||

);

|

||||

draw_3d_graph.add_node_edge(draw_3d_graph::node::TAA, crate::core_3d::graph::node::BLOOM);

|

||||

draw_3d_graph.add_node_edge(

|

||||

draw_3d_graph::node::TAA,

|

||||

crate::core_3d::graph::node::TONEMAPPING,

|

||||

);

|

||||

}

|

||||

}

|

||||

|

||||

/// Bundle to apply temporal anti-aliasing.

|

||||

#[derive(Bundle, Default)]

|

||||

pub struct TemporalAntiAliasBundle {

|

||||

pub settings: TemporalAntiAliasSettings,

|

||||

pub jitter: TemporalJitter,

|

||||

pub depth_prepass: DepthPrepass,

|

||||

pub motion_vector_prepass: MotionVectorPrepass,

|

||||

}

|

||||

|

||||

/// Component to apply temporal anti-aliasing to a 3D perspective camera.

|

||||

///

|

||||

/// Temporal anti-aliasing (TAA) is a form of image smoothing/filtering, like

|

||||

/// multisample anti-aliasing (MSAA), or fast approximate anti-aliasing (FXAA).

|

||||

/// TAA works by blending (averaging) each frame with the past few frames.

|

||||

///

|

||||

/// # Tradeoffs

|

||||

///

|

||||

/// Pros:

|

||||

/// * Cost scales with screen/view resolution, unlike MSAA which scales with number of triangles

|

||||

/// * Filters more types of aliasing than MSAA, such as textures and singular bright pixels

|

||||

/// * Greatly increases the quality of stochastic rendering techniques such as SSAO, shadow mapping, etc

|

||||

///

|

||||

/// Cons:

|

||||

/// * Chance of "ghosting" - ghostly trails left behind moving objects

|

||||

/// * Thin geometry, lighting detail, or texture lines may flicker or disappear

|

||||

/// * Slightly blurs the image, leading to a softer look (using an additional sharpening pass can reduce this)

|

||||

///

|

||||

/// Because TAA blends past frames with the current frame, when the frames differ too much

|

||||

/// (such as with fast moving objects or camera cuts), ghosting artifacts may occur.

|

||||

///

|

||||

/// Artifacts tend to be reduced at higher framerates and rendering resolution.

|

||||

///

|

||||

/// # Usage Notes

|

||||

///

|

||||

/// Requires that you add [`TemporalAntiAliasPlugin`] to your app,

|

||||

/// and add the [`DepthPrepass`], [`MotionVectorPrepass`], and [`TemporalJitter`]

|

||||

/// components to your camera.

|

||||

///

|

||||

/// Cannot be used with [`bevy_render::camera::OrthographicProjection`].

|

||||

///

|

||||

/// Currently does not support skinned meshes. There will probably be ghosting artifacts if used with them.

|

||||

/// Does not work well with alpha-blended meshes as it requires depth writing to determine motion.

|

||||

///

|

||||

/// It is very important that correct motion vectors are written for everything on screen.

|

||||

/// Failure to do so will lead to ghosting artifacts. For instance, if particle effects

|

||||

/// are added using a third party library, the library must either:

|

||||

/// 1. Write particle motion vectors to the motion vectors prepass texture

|

||||

/// 2. Render particles after TAA

|

||||

#[derive(Component, Reflect, Clone)]

|

||||

pub struct TemporalAntiAliasSettings {

|

||||

/// Set to true to delete the saved temporal history (past frames).

|

||||

///

|

||||

/// Useful for preventing ghosting when the history is no longer

|

||||

/// representive of the current frame, such as in sudden camera cuts.

|

||||

///

|

||||

/// After setting this to true, it will automatically be toggled

|

||||

/// back to false after one frame.

|

||||

pub reset: bool,

|

||||

}

|

||||

|

||||

impl Default for TemporalAntiAliasSettings {

|

||||

fn default() -> Self {

|

||||

Self { reset: true }

|

||||

}

|

||||

}

|

||||

|

||||

struct TAANode {

|

||||

view_query: QueryState<(

|

||||

&'static ExtractedCamera,

|

||||

&'static ViewTarget,

|

||||

&'static TAAHistoryTextures,

|

||||

&'static ViewPrepassTextures,

|

||||

&'static TAAPipelineId,

|

||||

)>,

|

||||

}

|

||||

|

||||

impl TAANode {

|

||||

fn new(world: &mut World) -> Self {

|

||||

Self {

|

||||

view_query: QueryState::new(world),

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

impl Node for TAANode {

|

||||

fn update(&mut self, world: &mut World) {

|

||||

self.view_query.update_archetypes(world);

|

||||

}

|

||||

|

||||

fn run(

|

||||

&self,

|

||||

graph: &mut RenderGraphContext,

|

||||

render_context: &mut RenderContext,

|

||||

world: &World,

|

||||

) -> Result<(), NodeRunError> {

|

||||

let (

|

||||

Ok((camera, view_target, taa_history_textures, prepass_textures, taa_pipeline_id)),

|

||||

Some(pipelines),

|

||||

Some(pipeline_cache),

|

||||

) = (

|

||||

self.view_query.get_manual(world, graph.view_entity()),

|

||||

world.get_resource::<TAAPipeline>(),

|

||||

world.get_resource::<PipelineCache>(),

|

||||

) else {

|

||||

return Ok(());

|

||||

};

|

||||

let (

|

||||

Some(taa_pipeline),

|

||||

Some(prepass_motion_vectors_texture),

|

||||

Some(prepass_depth_texture),

|

||||

) = (

|

||||

pipeline_cache.get_render_pipeline(taa_pipeline_id.0),

|

||||

&prepass_textures.motion_vectors,

|

||||

&prepass_textures.depth,

|

||||

) else {

|

||||

return Ok(());

|

||||

};

|

||||

let view_target = view_target.post_process_write();

|

||||

|

||||

let taa_bind_group =

|

||||

render_context

|

||||

.render_device()

|

||||

.create_bind_group(&BindGroupDescriptor {

|

||||

label: Some("taa_bind_group"),

|

||||

layout: &pipelines.taa_bind_group_layout,

|

||||

entries: &[

|

||||

BindGroupEntry {

|

||||

binding: 0,

|

||||

resource: BindingResource::TextureView(view_target.source),

|

||||

},

|

||||

BindGroupEntry {

|

||||

binding: 1,

|

||||

resource: BindingResource::TextureView(

|

||||

&taa_history_textures.read.default_view,

|

||||

),

|

||||

},

|

||||

BindGroupEntry {

|

||||

binding: 2,

|

||||

resource: BindingResource::TextureView(

|

||||

&prepass_motion_vectors_texture.default_view,

|

||||

),

|

||||

},

|

||||

BindGroupEntry {

|

||||

binding: 3,

|

||||

resource: BindingResource::TextureView(

|

||||

&prepass_depth_texture.default_view,

|

||||

),

|

||||

},

|

||||

BindGroupEntry {

|

||||

binding: 4,

|

||||

resource: BindingResource::Sampler(&pipelines.nearest_sampler),

|

||||

},

|

||||

BindGroupEntry {

|

||||

binding: 5,

|

||||

resource: BindingResource::Sampler(&pipelines.linear_sampler),

|

||||

},

|

||||

],

|

||||

});

|

||||

|

||||

{

|

||||

let mut taa_pass = render_context.begin_tracked_render_pass(RenderPassDescriptor {

|

||||

label: Some("taa_pass"),

|

||||

color_attachments: &[

|

||||

Some(RenderPassColorAttachment {

|

||||

view: view_target.destination,

|

||||

resolve_target: None,

|

||||

ops: Operations::default(),

|

||||

}),

|

||||

Some(RenderPassColorAttachment {

|

||||

view: &taa_history_textures.write.default_view,

|

||||

resolve_target: None,

|

||||

ops: Operations::default(),

|

||||

}),

|

||||

],

|

||||

depth_stencil_attachment: None,

|

||||

});

|

||||

taa_pass.set_render_pipeline(taa_pipeline);

|

||||

taa_pass.set_bind_group(0, &taa_bind_group, &[]);

|

||||

if let Some(viewport) = camera.viewport.as_ref() {

|

||||

taa_pass.set_camera_viewport(viewport);

|

||||

}

|

||||

taa_pass.draw(0..3, 0..1);

|

||||

}

|

||||

|

||||

Ok(())

|

||||

}

|

||||

}

|

||||

|

||||

#[derive(Resource)]

|

||||

struct TAAPipeline {

|

||||

taa_bind_group_layout: BindGroupLayout,

|

||||

nearest_sampler: Sampler,

|

||||

linear_sampler: Sampler,

|

||||

}

|

||||

|

||||

impl FromWorld for TAAPipeline {

|

||||

fn from_world(world: &mut World) -> Self {

|

||||

let render_device = world.resource::<RenderDevice>();

|

||||

|

||||

let nearest_sampler = render_device.create_sampler(&SamplerDescriptor {

|

||||

label: Some("taa_nearest_sampler"),

|

||||

mag_filter: FilterMode::Nearest,

|

||||

min_filter: FilterMode::Nearest,

|

||||

..SamplerDescriptor::default()

|

||||

});

|

||||

let linear_sampler = render_device.create_sampler(&SamplerDescriptor {

|

||||

label: Some("taa_linear_sampler"),

|

||||

mag_filter: FilterMode::Linear,

|

||||

min_filter: FilterMode::Linear,

|

||||

..SamplerDescriptor::default()

|

||||

});

|

||||

|

||||

let taa_bind_group_layout =

|

||||

render_device.create_bind_group_layout(&BindGroupLayoutDescriptor {

|

||||

label: Some("taa_bind_group_layout"),

|

||||

entries: &[

|

||||

// View target (read)

|

||||

BindGroupLayoutEntry {

|

||||

binding: 0,

|

||||

visibility: ShaderStages::FRAGMENT,

|

||||

ty: BindingType::Texture {

|

||||

sample_type: TextureSampleType::Float { filterable: true },

|

||||

view_dimension: TextureViewDimension::D2,

|

||||

multisampled: false,

|

||||

},

|

||||

count: None,

|

||||

},

|

||||

// TAA History (read)

|

||||

BindGroupLayoutEntry {

|

||||

binding: 1,

|

||||

visibility: ShaderStages::FRAGMENT,

|

||||

ty: BindingType::Texture {

|

||||

sample_type: TextureSampleType::Float { filterable: true },

|

||||

view_dimension: TextureViewDimension::D2,

|

||||

multisampled: false,

|

||||

},

|

||||

count: None,

|

||||

},

|

||||

// Motion Vectors

|

||||

BindGroupLayoutEntry {

|

||||

binding: 2,

|

||||

visibility: ShaderStages::FRAGMENT,

|

||||

ty: BindingType::Texture {

|

||||

sample_type: TextureSampleType::Float { filterable: true },

|

||||

view_dimension: TextureViewDimension::D2,

|

||||

multisampled: false,

|

||||

},

|

||||

count: None,

|

||||

},

|

||||

// Depth

|

||||

BindGroupLayoutEntry {

|

||||

binding: 3,

|

||||

visibility: ShaderStages::FRAGMENT,

|

||||

ty: BindingType::Texture {

|

||||

sample_type: TextureSampleType::Depth,

|

||||

view_dimension: TextureViewDimension::D2,

|

||||

multisampled: false,

|

||||

},

|

||||

count: None,

|

||||

},

|

||||

// Nearest sampler

|

||||

BindGroupLayoutEntry {

|

||||

binding: 4,

|

||||

visibility: ShaderStages::FRAGMENT,

|

||||

ty: BindingType::Sampler(SamplerBindingType::NonFiltering),

|

||||

count: None,

|

||||

},

|

||||

// Linear sampler

|

||||

BindGroupLayoutEntry {

|

||||

binding: 5,

|

||||

visibility: ShaderStages::FRAGMENT,

|

||||

ty: BindingType::Sampler(SamplerBindingType::Filtering),

|

||||

count: None,

|

||||

},

|

||||

],

|

||||

});

|

||||

|

||||

TAAPipeline {

|

||||

taa_bind_group_layout,

|

||||

nearest_sampler,

|

||||

linear_sampler,

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

#[derive(PartialEq, Eq, Hash, Clone)]

|

||||

struct TAAPipelineKey {

|

||||

hdr: bool,

|

||||

reset: bool,

|

||||

}

|

||||

|

||||

impl SpecializedRenderPipeline for TAAPipeline {

|

||||

type Key = TAAPipelineKey;

|

||||

|

||||

fn specialize(&self, key: Self::Key) -> RenderPipelineDescriptor {

|

||||

let mut shader_defs = vec![];

|

||||

|

||||

let format = if key.hdr {

|

||||

shader_defs.push("TONEMAP".into());

|

||||

ViewTarget::TEXTURE_FORMAT_HDR

|

||||

} else {

|

||||

TextureFormat::bevy_default()

|

||||

};

|

||||

|

||||

if key.reset {

|

||||

shader_defs.push("RESET".into());

|

||||

}

|

||||

|

||||

RenderPipelineDescriptor {

|

||||

label: Some("taa_pipeline".into()),

|

||||

layout: vec![self.taa_bind_group_layout.clone()],

|

||||

vertex: fullscreen_shader_vertex_state(),

|

||||

fragment: Some(FragmentState {

|

||||

shader: TAA_SHADER_HANDLE.typed::<Shader>(),

|

||||

shader_defs,

|

||||

entry_point: "taa".into(),

|

||||

targets: vec![

|

||||

Some(ColorTargetState {

|

||||

format,

|

||||

blend: None,

|

||||

write_mask: ColorWrites::ALL,

|

||||

}),

|

||||

Some(ColorTargetState {

|

||||

format,

|

||||

blend: None,

|

||||

write_mask: ColorWrites::ALL,

|

||||

}),

|

||||

],

|

||||

}),

|

||||

primitive: PrimitiveState::default(),

|

||||

depth_stencil: None,

|

||||

multisample: MultisampleState::default(),

|

||||

push_constant_ranges: Vec::new(),

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

fn extract_taa_settings(mut commands: Commands, mut main_world: ResMut<MainWorld>) {

|

||||

let mut cameras_3d = main_world

|

||||

.query_filtered::<(Entity, &Camera, &Projection, &mut TemporalAntiAliasSettings), (

|

||||

With<Camera3d>,

|

||||

With<TemporalJitter>,

|

||||

With<DepthPrepass>,

|

||||

With<MotionVectorPrepass>,

|

||||

)>();

|

||||

|

||||

for (entity, camera, camera_projection, mut taa_settings) in

|

||||

cameras_3d.iter_mut(&mut main_world)

|

||||

{

|

||||

let has_perspective_projection = matches!(camera_projection, Projection::Perspective(_));

|

||||

if camera.is_active && has_perspective_projection {

|

||||

commands.get_or_spawn(entity).insert(taa_settings.clone());

|

||||

taa_settings.reset = false;

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

fn prepare_taa_jitter(

|

||||

frame_count: Res<FrameCount>,

|

||||

mut query: Query<&mut TemporalJitter, With<TemporalAntiAliasSettings>>,

|

||||

) {

|

||||

// Halton sequence (2, 3) - 0.5, skipping i = 0

|

||||

let halton_sequence = [

|

||||

vec2(0.0, -0.16666666),

|

||||

vec2(-0.25, 0.16666669),

|

||||

vec2(0.25, -0.3888889),

|

||||

vec2(-0.375, -0.055555552),

|

||||

vec2(0.125, 0.2777778),

|

||||

vec2(-0.125, -0.2777778),

|

||||

vec2(0.375, 0.055555582),

|

||||

vec2(-0.4375, 0.3888889),

|

||||

];

|

||||

|

||||

let offset = halton_sequence[frame_count.0 as usize % halton_sequence.len()];

|

||||

|

||||

for mut jitter in &mut query {

|

||||

jitter.offset = offset;

|

||||

}

|

||||

}

|

||||

|

||||

#[derive(Component)]

|

||||

struct TAAHistoryTextures {

|

||||

write: CachedTexture,

|

||||

read: CachedTexture,

|

||||

}

|

||||

|

||||

fn prepare_taa_history_textures(

|

||||

mut commands: Commands,

|

||||

mut texture_cache: ResMut<TextureCache>,

|

||||

render_device: Res<RenderDevice>,

|

||||

frame_count: Res<FrameCount>,

|

||||

views: Query<(Entity, &ExtractedCamera, &ExtractedView), With<TemporalAntiAliasSettings>>,

|

||||

) {

|

||||

for (entity, camera, view) in &views {

|

||||

if let Some(physical_viewport_size) = camera.physical_viewport_size {

|

||||

let mut texture_descriptor = TextureDescriptor {

|

||||

label: None,

|

||||

size: Extent3d {

|

||||

depth_or_array_layers: 1,

|

||||

width: physical_viewport_size.x,

|

||||

height: physical_viewport_size.y,

|

||||

},

|

||||

mip_level_count: 1,

|

||||

sample_count: 1,

|

||||

dimension: TextureDimension::D2,

|

||||

format: if view.hdr {

|

||||

ViewTarget::TEXTURE_FORMAT_HDR

|

||||

} else {

|

||||

TextureFormat::bevy_default()

|

||||

},

|

||||

usage: TextureUsages::TEXTURE_BINDING | TextureUsages::RENDER_ATTACHMENT,

|

||||

view_formats: &[],

|

||||

};

|

||||

|

||||

texture_descriptor.label = Some("taa_history_1_texture");

|

||||

let history_1_texture = texture_cache.get(&render_device, texture_descriptor.clone());

|

||||

|

||||

texture_descriptor.label = Some("taa_history_2_texture");

|

||||

let history_2_texture = texture_cache.get(&render_device, texture_descriptor);

|

||||

|

||||

let textures = if frame_count.0 % 2 == 0 {

|

||||

TAAHistoryTextures {

|

||||

write: history_1_texture,

|

||||

read: history_2_texture,

|

||||

}

|

||||

} else {

|

||||

TAAHistoryTextures {

|

||||

write: history_2_texture,

|

||||

read: history_1_texture,

|

||||

}

|

||||

};

|

||||

|

||||

commands.entity(entity).insert(textures);

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

#[derive(Component)]

|

||||

struct TAAPipelineId(CachedRenderPipelineId);

|

||||

|

||||

fn prepare_taa_pipelines(

|

||||

mut commands: Commands,

|

||||

pipeline_cache: Res<PipelineCache>,

|

||||

mut pipelines: ResMut<SpecializedRenderPipelines<TAAPipeline>>,

|

||||

pipeline: Res<TAAPipeline>,

|

||||

views: Query<(Entity, &ExtractedView, &TemporalAntiAliasSettings)>,

|

||||

) {

|

||||

for (entity, view, taa_settings) in &views {

|

||||

let mut pipeline_key = TAAPipelineKey {

|

||||

hdr: view.hdr,

|

||||

reset: taa_settings.reset,

|

||||

};

|

||||

let pipeline_id = pipelines.specialize(&pipeline_cache, &pipeline, pipeline_key.clone());

|

||||

|

||||

// Prepare non-reset pipeline anyways - it will be necessary next frame

|

||||

if pipeline_key.reset {

|

||||

pipeline_key.reset = false;

|

||||

pipelines.specialize(&pipeline_cache, &pipeline, pipeline_key);

|

||||

}

|

||||

|

||||

commands.entity(entity).insert(TAAPipelineId(pipeline_id));

|

||||

}

|

||||

}

|

||||

196

crates/bevy_core_pipeline/src/taa/taa.wgsl

Normal file

196

crates/bevy_core_pipeline/src/taa/taa.wgsl

Normal file

@ -0,0 +1,196 @@

|

||||

// References:

|

||||

// https://www.elopezr.com/temporal-aa-and-the-quest-for-the-holy-trail

|

||||

// http://behindthepixels.io/assets/files/TemporalAA.pdf

|

||||

// http://leiy.cc/publications/TAA/TAA_EG2020_Talk.pdf

|

||||

// https://advances.realtimerendering.com/s2014/index.html#_HIGH-QUALITY_TEMPORAL_SUPERSAMPLING

|

||||

|

||||

// Controls how much to blend between the current and past samples

|

||||

// Lower numbers = less of the current sample and more of the past sample = more smoothing

|

||||

// Values chosen empirically

|

||||

const DEFAULT_HISTORY_BLEND_RATE: f32 = 0.1; // Default blend rate to use when no confidence in history

|

||||

const MIN_HISTORY_BLEND_RATE: f32 = 0.015; // Minimum blend rate allowed, to ensure at least some of the current sample is used

|

||||

|

||||

#import bevy_core_pipeline::fullscreen_vertex_shader

|

||||

|

||||

@group(0) @binding(0) var view_target: texture_2d<f32>;

|

||||

@group(0) @binding(1) var history: texture_2d<f32>;

|

||||

@group(0) @binding(2) var motion_vectors: texture_2d<f32>;

|

||||

@group(0) @binding(3) var depth: texture_depth_2d;

|

||||

@group(0) @binding(4) var nearest_sampler: sampler;

|

||||

@group(0) @binding(5) var linear_sampler: sampler;

|

||||

|

||||

struct Output {

|

||||

@location(0) view_target: vec4<f32>,

|

||||

@location(1) history: vec4<f32>,

|

||||

};

|

||||

|

||||

// TAA is ideally applied after tonemapping, but before post processing

|

||||

// Post processing wants to go before tonemapping, which conflicts

|

||||

// Solution: Put TAA before tonemapping, tonemap TAA input, apply TAA, invert-tonemap TAA output

|

||||

// https://advances.realtimerendering.com/s2014/index.html#_HIGH-QUALITY_TEMPORAL_SUPERSAMPLING, slide 20

|

||||

// https://gpuopen.com/learn/optimized-reversible-tonemapper-for-resolve

|

||||

fn rcp(x: f32) -> f32 { return 1.0 / x; }

|

||||

fn max3(x: vec3<f32>) -> f32 { return max(x.r, max(x.g, x.b)); }

|

||||

fn tonemap(color: vec3<f32>) -> vec3<f32> { return color * rcp(max3(color) + 1.0); }

|

||||

fn reverse_tonemap(color: vec3<f32>) -> vec3<f32> { return color * rcp(1.0 - max3(color)); }

|

||||

|

||||

// The following 3 functions are from Playdead (MIT-licensed)

|

||||

// https://github.com/playdeadgames/temporal/blob/master/Assets/Shaders/TemporalReprojection.shader

|

||||

fn RGB_to_YCoCg(rgb: vec3<f32>) -> vec3<f32> {

|

||||

let y = (rgb.r / 4.0) + (rgb.g / 2.0) + (rgb.b / 4.0);

|

||||

let co = (rgb.r / 2.0) - (rgb.b / 2.0);

|

||||

let cg = (-rgb.r / 4.0) + (rgb.g / 2.0) - (rgb.b / 4.0);

|

||||

return vec3(y, co, cg);

|

||||

}

|

||||

|

||||

fn YCoCg_to_RGB(ycocg: vec3<f32>) -> vec3<f32> {

|

||||

let r = ycocg.x + ycocg.y - ycocg.z;

|

||||

let g = ycocg.x + ycocg.z;

|

||||

let b = ycocg.x - ycocg.y - ycocg.z;

|

||||

return saturate(vec3(r, g, b));

|

||||

}

|

||||

|

||||

fn clip_towards_aabb_center(history_color: vec3<f32>, current_color: vec3<f32>, aabb_min: vec3<f32>, aabb_max: vec3<f32>) -> vec3<f32> {

|

||||

let p_clip = 0.5 * (aabb_max + aabb_min);

|

||||

let e_clip = 0.5 * (aabb_max - aabb_min) + 0.00000001;

|

||||

let v_clip = history_color - p_clip;

|

||||

let v_unit = v_clip / e_clip;

|

||||

let a_unit = abs(v_unit);

|

||||

let ma_unit = max3(a_unit);

|

||||

if ma_unit > 1.0 {

|

||||

return p_clip + (v_clip / ma_unit);

|

||||

} else {

|

||||

return history_color;

|

||||

}

|

||||

}

|

||||

|

||||

fn sample_history(u: f32, v: f32) -> vec3<f32> {

|

||||

return textureSample(history, linear_sampler, vec2(u, v)).rgb;

|

||||

}

|

||||

|

||||

fn sample_view_target(uv: vec2<f32>) -> vec3<f32> {

|

||||

var sample = textureSample(view_target, nearest_sampler, uv).rgb;

|

||||

#ifdef TONEMAP

|

||||

sample = tonemap(sample);

|

||||

#endif

|

||||

return RGB_to_YCoCg(sample);

|

||||

}

|

||||

|

||||

@fragment

|

||||

fn taa(@location(0) uv: vec2<f32>) -> Output {

|

||||

let texture_size = vec2<f32>(textureDimensions(view_target));

|

||||

let texel_size = 1.0 / texture_size;

|

||||

|

||||

// Fetch the current sample

|

||||

let original_color = textureSample(view_target, nearest_sampler, uv);

|

||||

var current_color = original_color.rgb;

|

||||

#ifdef TONEMAP

|

||||

current_color = tonemap(current_color);

|

||||

#endif

|

||||

|

||||

#ifndef RESET

|

||||

// Pick the closest motion_vector from 5 samples (reduces aliasing on the edges of moving entities)

|

||||

// https://advances.realtimerendering.com/s2014/index.html#_HIGH-QUALITY_TEMPORAL_SUPERSAMPLING, slide 27

|

||||

let offset = texel_size * 2.0;

|

||||

let d_uv_tl = uv + vec2(-offset.x, offset.y);

|

||||

let d_uv_tr = uv + vec2(offset.x, offset.y);

|

||||

let d_uv_bl = uv + vec2(-offset.x, -offset.y);

|

||||

let d_uv_br = uv + vec2(offset.x, -offset.y);

|

||||

var closest_uv = uv;

|

||||

let d_tl = textureSample(depth, nearest_sampler, d_uv_tl);

|

||||

let d_tr = textureSample(depth, nearest_sampler, d_uv_tr);

|

||||

var closest_depth = textureSample(depth, nearest_sampler, uv);

|

||||

let d_bl = textureSample(depth, nearest_sampler, d_uv_bl);

|

||||

let d_br = textureSample(depth, nearest_sampler, d_uv_br);

|

||||

if d_tl > closest_depth {

|

||||

closest_uv = d_uv_tl;

|

||||

closest_depth = d_tl;

|

||||

}

|

||||

if d_tr > closest_depth {

|

||||

closest_uv = d_uv_tr;

|

||||

closest_depth = d_tr;

|

||||

}

|

||||

if d_bl > closest_depth {

|

||||

closest_uv = d_uv_bl;

|

||||

closest_depth = d_bl;

|

||||

}

|

||||

if d_br > closest_depth {

|

||||

closest_uv = d_uv_br;

|

||||

}

|

||||

let closest_motion_vector = textureSample(motion_vectors, nearest_sampler, closest_uv).rg;

|

||||

|

||||

// Reproject to find the equivalent sample from the past

|

||||

// Uses 5-sample Catmull-Rom filtering (reduces blurriness)

|

||||

// Catmull-Rom filtering: https://gist.github.com/TheRealMJP/c83b8c0f46b63f3a88a5986f4fa982b1

|

||||

// Ignoring corners: https://www.activision.com/cdn/research/Dynamic_Temporal_Antialiasing_and_Upsampling_in_Call_of_Duty_v4.pdf#page=68

|

||||

// Technically we should renormalize the weights since we're skipping the corners, but it's basically the same result

|

||||

let history_uv = uv - closest_motion_vector;

|

||||

let sample_position = history_uv * texture_size;

|

||||

let texel_center = floor(sample_position - 0.5) + 0.5;

|

||||

let f = sample_position - texel_center;

|

||||

let w0 = f * (-0.5 + f * (1.0 - 0.5 * f));

|

||||

let w1 = 1.0 + f * f * (-2.5 + 1.5 * f);

|

||||

let w2 = f * (0.5 + f * (2.0 - 1.5 * f));

|

||||

let w3 = f * f * (-0.5 + 0.5 * f);

|

||||

let w12 = w1 + w2;

|

||||

let texel_position_0 = (texel_center - 1.0) * texel_size;

|

||||

let texel_position_3 = (texel_center + 2.0) * texel_size;

|

||||

let texel_position_12 = (texel_center + (w2 / w12)) * texel_size;

|

||||

var history_color = sample_history(texel_position_12.x, texel_position_0.y) * w12.x * w0.y;

|

||||

history_color += sample_history(texel_position_0.x, texel_position_12.y) * w0.x * w12.y;

|

||||

history_color += sample_history(texel_position_12.x, texel_position_12.y) * w12.x * w12.y;

|

||||

history_color += sample_history(texel_position_3.x, texel_position_12.y) * w3.x * w12.y;

|

||||

history_color += sample_history(texel_position_12.x, texel_position_3.y) * w12.x * w3.y;

|

||||

|

||||

// Constrain past sample with 3x3 YCoCg variance clipping (reduces ghosting)

|

||||

// YCoCg: https://advances.realtimerendering.com/s2014/index.html#_HIGH-QUALITY_TEMPORAL_SUPERSAMPLING, slide 33

|

||||

// Variance clipping: https://developer.download.nvidia.com/gameworks/events/GDC2016/msalvi_temporal_supersampling.pdf

|

||||

let s_tl = sample_view_target(uv + vec2(-texel_size.x, texel_size.y));

|

||||

let s_tm = sample_view_target(uv + vec2( 0.0, texel_size.y));

|

||||

let s_tr = sample_view_target(uv + vec2( texel_size.x, texel_size.y));

|

||||

let s_ml = sample_view_target(uv + vec2(-texel_size.x, 0.0));

|

||||

let s_mm = RGB_to_YCoCg(current_color);

|

||||

let s_mr = sample_view_target(uv + vec2( texel_size.x, 0.0));

|

||||

let s_bl = sample_view_target(uv + vec2(-texel_size.x, -texel_size.y));

|

||||

let s_bm = sample_view_target(uv + vec2( 0.0, -texel_size.y));

|

||||

let s_br = sample_view_target(uv + vec2( texel_size.x, -texel_size.y));

|

||||

let moment_1 = s_tl + s_tm + s_tr + s_ml + s_mm + s_mr + s_bl + s_bm + s_br;

|

||||

let moment_2 = (s_tl * s_tl) + (s_tm * s_tm) + (s_tr * s_tr) + (s_ml * s_ml) + (s_mm * s_mm) + (s_mr * s_mr) + (s_bl * s_bl) + (s_bm * s_bm) + (s_br * s_br);

|

||||

let mean = moment_1 / 9.0;

|

||||

let variance = (moment_2 / 9.0) - (mean * mean);

|

||||

let std_deviation = sqrt(max(variance, vec3(0.0)));

|

||||

history_color = RGB_to_YCoCg(history_color);

|

||||

history_color = clip_towards_aabb_center(history_color, s_mm, mean - std_deviation, mean + std_deviation);

|

||||

history_color = YCoCg_to_RGB(history_color);

|

||||

|

||||

// How confident we are that the history is representative of the current frame

|

||||

var history_confidence = textureSample(history, nearest_sampler, uv).a;

|

||||

let pixel_motion_vector = abs(closest_motion_vector) * texture_size;

|

||||

if pixel_motion_vector.x < 0.01 && pixel_motion_vector.y < 0.01 {

|

||||

// Increment when pixels are not moving

|

||||

history_confidence += 10.0;

|

||||

} else {

|

||||

// Else reset

|

||||

history_confidence = 1.0;

|

||||

}

|

||||

|

||||

// Blend current and past sample

|

||||

// Use more of the history if we're confident in it (reduces noise when there is no motion)

|

||||

// https://hhoppe.com/supersample.pdf, section 4.1

|

||||

let current_color_factor = clamp(1.0 / history_confidence, MIN_HISTORY_BLEND_RATE, DEFAULT_HISTORY_BLEND_RATE);

|

||||

current_color = mix(history_color, current_color, current_color_factor);

|

||||

#endif // #ifndef RESET

|

||||

|

||||

|

||||

// Write output to history and view target

|

||||

var out: Output;

|

||||

#ifdef RESET

|

||||

let history_confidence = 1.0 / MIN_HISTORY_BLEND_RATE;

|

||||

#endif

|

||||

out.history = vec4(current_color, history_confidence);

|

||||

#ifdef TONEMAP

|

||||

current_color = reverse_tonemap(current_color);

|

||||

#endif

|

||||

out.view_target = vec4(current_color, original_color.a);

|

||||

return out;

|

||||

}

|

||||

@ -170,6 +170,7 @@ fn extract_gizmo_data(

|

||||

MeshUniform {

|

||||

flags: 0,

|

||||

transform,

|

||||

previous_transform: transform,

|

||||

inverse_transpose_model,

|

||||

},

|

||||

),

|

||||

|

||||

@ -168,7 +168,7 @@ pub struct StandardMaterial {

|

||||

/// This is usually generated and stored automatically ("baked") by 3D-modelling software.

|

||||

///

|

||||

/// Typically, steep concave parts of a model (such as the armpit of a shirt) are darker,

|

||||

/// because they have little exposed to light.

|

||||

/// because they have little exposure to light.

|

||||

/// An occlusion map specifies those parts of the model that light doesn't reach well.

|

||||

///

|

||||

/// The material will be less lit in places where this texture is dark.

|

||||

|

||||

@ -1,10 +1,11 @@

|

||||

use bevy_app::Plugin;

|

||||

use bevy_app::{Plugin, PreUpdate, Update};

|

||||

use bevy_asset::{load_internal_asset, AssetServer, Handle, HandleUntyped};

|

||||

use bevy_core_pipeline::{

|

||||

prelude::Camera3d,

|

||||

prepass::{

|

||||

AlphaMask3dPrepass, DepthPrepass, NormalPrepass, Opaque3dPrepass, ViewPrepassTextures,

|

||||

DEPTH_PREPASS_FORMAT, NORMAL_PREPASS_FORMAT,

|

||||

AlphaMask3dPrepass, DepthPrepass, MotionVectorPrepass, NormalPrepass, Opaque3dPrepass,

|

||||

ViewPrepassTextures, DEPTH_PREPASS_FORMAT, MOTION_VECTOR_PREPASS_FORMAT,

|

||||

NORMAL_PREPASS_FORMAT,

|

||||

},

|

||||

};

|

||||

use bevy_ecs::{

|

||||

@ -14,6 +15,7 @@ use bevy_ecs::{

|

||||

SystemParamItem,

|

||||

},

|

||||

};

|

||||

use bevy_math::Mat4;

|

||||

use bevy_reflect::TypeUuid;

|

||||

use bevy_render::{

|

||||

camera::ExtractedCamera,

|

||||

@ -29,24 +31,24 @@ use bevy_render::{

|

||||

BindGroup, BindGroupDescriptor, BindGroupEntry, BindGroupLayout, BindGroupLayoutDescriptor,

|

||||

BindGroupLayoutEntry, BindingResource, BindingType, BlendState, BufferBindingType,

|

||||

ColorTargetState, ColorWrites, CompareFunction, DepthBiasState, DepthStencilState,

|

||||

Extent3d, FragmentState, FrontFace, MultisampleState, PipelineCache, PolygonMode,

|

||||

PrimitiveState, RenderPipelineDescriptor, Shader, ShaderDefVal, ShaderRef, ShaderStages,

|

||||

ShaderType, SpecializedMeshPipeline, SpecializedMeshPipelineError,

|

||||

DynamicUniformBuffer, Extent3d, FragmentState, FrontFace, MultisampleState, PipelineCache,

|

||||

PolygonMode, PrimitiveState, RenderPipelineDescriptor, Shader, ShaderDefVal, ShaderRef,

|

||||

ShaderStages, ShaderType, SpecializedMeshPipeline, SpecializedMeshPipelineError,

|

||||

SpecializedMeshPipelines, StencilFaceState, StencilState, TextureDescriptor,

|

||||

TextureDimension, TextureFormat, TextureSampleType, TextureUsages, TextureViewDimension,

|

||||

VertexState,

|

||||

TextureDimension, TextureSampleType, TextureUsages, TextureViewDimension, VertexState,

|

||||

},

|

||||

renderer::RenderDevice,

|

||||

renderer::{RenderDevice, RenderQueue},

|

||||

texture::{FallbackImagesDepth, FallbackImagesMsaa, TextureCache},

|

||||

view::{ExtractedView, Msaa, ViewUniform, ViewUniformOffset, ViewUniforms, VisibleEntities},

|

||||

Extract, ExtractSchedule, Render, RenderApp, RenderSet,

|

||||

};

|

||||

use bevy_transform::prelude::GlobalTransform;

|

||||

use bevy_utils::{tracing::error, HashMap};

|

||||

|

||||

use crate::{

|

||||

AlphaMode, DrawMesh, Material, MaterialPipeline, MaterialPipelineKey, MeshPipeline,

|

||||

MeshPipelineKey, MeshUniform, RenderMaterials, SetMaterialBindGroup, SetMeshBindGroup,

|

||||

MAX_CASCADES_PER_LIGHT, MAX_DIRECTIONAL_LIGHTS,

|

||||

prepare_lights, AlphaMode, DrawMesh, Material, MaterialPipeline, MaterialPipelineKey,

|

||||

MeshPipeline, MeshPipelineKey, MeshUniform, RenderMaterials, SetMaterialBindGroup,

|

||||

SetMeshBindGroup, MAX_CASCADES_PER_LIGHT, MAX_DIRECTIONAL_LIGHTS,

|

||||

};

|

||||

|

||||

use std::{hash::Hash, marker::PhantomData};

|

||||

@ -98,8 +100,8 @@ where

|

||||

);

|

||||

|

||||

let Ok(render_app) = app.get_sub_app_mut(RenderApp) else {

|

||||

return;

|

||||

};

|

||||

return;

|

||||

};

|

||||

|

||||

render_app

|

||||

.add_systems(

|

||||

@ -108,7 +110,8 @@ where

|

||||

)

|

||||

.init_resource::<PrepassPipeline<M>>()

|

||||

.init_resource::<PrepassViewBindGroup>()

|

||||

.init_resource::<SpecializedMeshPipelines<PrepassPipeline<M>>>();

|

||||

.init_resource::<SpecializedMeshPipelines<PrepassPipeline<M>>>()

|

||||

.init_resource::<PreviousViewProjectionUniforms>();

|

||||

}

|

||||

}

|

||||

|

||||

@ -128,33 +131,95 @@ where

|

||||

M::Data: PartialEq + Eq + Hash + Clone,

|

||||

{

|

||||

fn build(&self, app: &mut bevy_app::App) {

|

||||

let no_prepass_plugin_loaded = app.world.get_resource::<AnyPrepassPluginLoaded>().is_none();

|

||||

|

||||

if no_prepass_plugin_loaded {

|

||||

app.insert_resource(AnyPrepassPluginLoaded)

|

||||

.add_systems(Update, update_previous_view_projections)

|

||||

// At the start of each frame, last frame's GlobalTransforms become this frame's PreviousGlobalTransforms

|

||||

.add_systems(PreUpdate, update_mesh_previous_global_transforms);

|

||||

}

|

||||

|

||||

let Ok(render_app) = app.get_sub_app_mut(RenderApp) else {

|

||||

return;

|

||||

};

|

||||

|

||||

if no_prepass_plugin_loaded {

|

||||

render_app

|

||||

.init_resource::<DrawFunctions<Opaque3dPrepass>>()

|

||||

.init_resource::<DrawFunctions<AlphaMask3dPrepass>>()

|

||||

.add_systems(ExtractSchedule, extract_camera_prepass_phase)

|

||||

.add_systems(

|

||||

Render,

|

||||

(

|

||||

prepare_prepass_textures

|

||||

.in_set(RenderSet::Prepare)

|

||||

.after(bevy_render::view::prepare_windows),

|

||||

prepare_previous_view_projection_uniforms

|

||||

.in_set(RenderSet::Prepare)

|

||||

.after(PrepassLightsViewFlush),

|

||||

apply_system_buffers

|

||||

.in_set(RenderSet::Prepare)

|

||||

.in_set(PrepassLightsViewFlush)

|

||||

.after(prepare_lights),

|

||||

sort_phase_system::<Opaque3dPrepass>.in_set(RenderSet::PhaseSort),

|

||||

sort_phase_system::<AlphaMask3dPrepass>.in_set(RenderSet::PhaseSort),

|

||||

),

|

||||

);

|

||||

}

|

||||

|

||||

render_app

|

||||

.add_systems(ExtractSchedule, extract_camera_prepass_phase)

|

||||

.add_render_command::<Opaque3dPrepass, DrawPrepass<M>>()

|

||||

.add_render_command::<AlphaMask3dPrepass, DrawPrepass<M>>()

|

||||

.add_systems(

|

||||

Render,

|

||||

(

|

||||

prepare_prepass_textures

|

||||

.in_set(RenderSet::Prepare)

|

||||

.after(bevy_render::view::prepare_windows),

|

||||

queue_prepass_material_meshes::<M>.in_set(RenderSet::Queue),

|

||||

sort_phase_system::<Opaque3dPrepass>.in_set(RenderSet::PhaseSort),

|

||||

sort_phase_system::<AlphaMask3dPrepass>.in_set(RenderSet::PhaseSort),

|

||||

),

|

||||

)

|

||||

.init_resource::<DrawFunctions<Opaque3dPrepass>>()

|

||||

.init_resource::<DrawFunctions<AlphaMask3dPrepass>>()

|

||||

.add_render_command::<Opaque3dPrepass, DrawPrepass<M>>()

|

||||

.add_render_command::<AlphaMask3dPrepass, DrawPrepass<M>>();

|

||||

queue_prepass_material_meshes::<M>.in_set(RenderSet::Queue),

|

||||

);

|

||||

}

|

||||

}

|

||||

|

||||

#[derive(Resource)]

|

||||

struct AnyPrepassPluginLoaded;

|

||||

|

||||

#[derive(Component, ShaderType, Clone)]

|

||||

pub struct PreviousViewProjection {

|

||||

pub view_proj: Mat4,

|

||||

}

|

||||

|

||||

pub fn update_previous_view_projections(

|

||||

mut commands: Commands,

|

||||

query: Query<(Entity, &Camera, &GlobalTransform), (With<Camera3d>, With<MotionVectorPrepass>)>,

|

||||

) {

|

||||

for (entity, camera, camera_transform) in &query {

|

||||

commands.entity(entity).insert(PreviousViewProjection {

|

||||

view_proj: camera.projection_matrix() * camera_transform.compute_matrix().inverse(),

|

||||

});

|

||||

}

|

||||

}

|

||||

|

||||

#[derive(Component)]

|

||||

pub struct PreviousGlobalTransform(pub Mat4);

|

||||

|

||||

pub fn update_mesh_previous_global_transforms(

|

||||

mut commands: Commands,

|

||||

views: Query<&Camera, (With<Camera3d>, With<MotionVectorPrepass>)>,

|

||||

meshes: Query<(Entity, &GlobalTransform), With<Handle<Mesh>>>,

|

||||

) {

|

||||

let should_run = views.iter().any(|camera| camera.is_active);

|

||||

|

||||

if should_run {

|

||||

for (entity, transform) in &meshes {

|

||||

commands

|

||||

.entity(entity)

|

||||

.insert(PreviousGlobalTransform(transform.compute_matrix()));

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

#[derive(Resource)]

|

||||

pub struct PrepassPipeline<M: Material> {

|

||||

pub view_layout: BindGroupLayout,

|

||||

pub view_layout_motion_vectors: BindGroupLayout,

|

||||

pub view_layout_no_motion_vectors: BindGroupLayout,

|

||||

pub mesh_layout: BindGroupLayout,

|

||||

pub skinned_mesh_layout: BindGroupLayout,

|

||||

pub material_layout: BindGroupLayout,

|

||||

@ -169,38 +234,80 @@ impl<M: Material> FromWorld for PrepassPipeline<M> {

|

||||

let render_device = world.resource::<RenderDevice>();

|

||||

let asset_server = world.resource::<AssetServer>();

|

||||

|

||||

let view_layout = render_device.create_bind_group_layout(&BindGroupLayoutDescriptor {

|

||||

entries: &[

|

||||

// View

|

||||

BindGroupLayoutEntry {

|

||||

binding: 0,

|

||||

visibility: ShaderStages::VERTEX_FRAGMENT,

|

||||

ty: BindingType::Buffer {

|

||||

ty: BufferBindingType::Uniform,

|

||||

has_dynamic_offset: true,

|

||||

min_binding_size: Some(ViewUniform::min_size()),

|

||||

let view_layout_motion_vectors =

|

||||

render_device.create_bind_group_layout(&BindGroupLayoutDescriptor {

|

||||

entries: &[

|

||||

// View

|

||||

BindGroupLayoutEntry {

|

||||

binding: 0,

|

||||

visibility: ShaderStages::VERTEX | ShaderStages::FRAGMENT,

|

||||

ty: BindingType::Buffer {

|

||||

ty: BufferBindingType::Uniform,

|

||||

has_dynamic_offset: true,

|

||||

min_binding_size: Some(ViewUniform::min_size()),

|

||||

},

|

||||

count: None,

|

||||

},

|

||||

count: None,

|

||||

},

|

||||

// Globals

|

||||

BindGroupLayoutEntry {

|

||||

binding: 1,

|

||||

visibility: ShaderStages::VERTEX_FRAGMENT,

|

||||

ty: BindingType::Buffer {

|

||||

ty: BufferBindingType::Uniform,

|

||||

has_dynamic_offset: false,

|

||||

min_binding_size: Some(GlobalsUniform::min_size()),

|

||||

// Globals

|

||||

BindGroupLayoutEntry {

|

||||

binding: 1,

|

||||

visibility: ShaderStages::VERTEX_FRAGMENT,

|

||||

ty: BindingType::Buffer {

|

||||

ty: BufferBindingType::Uniform,

|

||||

has_dynamic_offset: false,

|

||||

min_binding_size: Some(GlobalsUniform::min_size()),

|

||||

},

|

||||

count: None,

|

||||

},

|

||||

count: None,

|

||||

},

|

||||

],

|

||||

label: Some("prepass_view_layout"),

|

||||

});

|

||||

// PreviousViewProjection

|

||||

BindGroupLayoutEntry {

|

||||

binding: 2,

|

||||

visibility: ShaderStages::VERTEX | ShaderStages::FRAGMENT,

|

||||

ty: BindingType::Buffer {

|

||||

ty: BufferBindingType::Uniform,

|

||||

has_dynamic_offset: true,

|

||||

min_binding_size: Some(PreviousViewProjection::min_size()),

|

||||

},

|

||||

count: None,

|

||||

},

|

||||

],

|

||||

label: Some("prepass_view_layout_motion_vectors"),

|

||||

});

|

||||

|

||||

let view_layout_no_motion_vectors =

|

||||

render_device.create_bind_group_layout(&BindGroupLayoutDescriptor {

|

||||

entries: &[

|

||||

// View

|

||||

BindGroupLayoutEntry {

|

||||

binding: 0,

|

||||

visibility: ShaderStages::VERTEX | ShaderStages::FRAGMENT,

|

||||

ty: BindingType::Buffer {

|

||||

ty: BufferBindingType::Uniform,

|

||||

has_dynamic_offset: true,

|

||||

min_binding_size: Some(ViewUniform::min_size()),

|

||||

},

|

||||

count: None,

|

||||

},

|

||||

// Globals

|

||||

BindGroupLayoutEntry {

|

||||

binding: 1,

|

||||

visibility: ShaderStages::VERTEX_FRAGMENT,

|

||||

ty: BindingType::Buffer {

|

||||

ty: BufferBindingType::Uniform,

|

||||

has_dynamic_offset: false,

|

||||

min_binding_size: Some(GlobalsUniform::min_size()),

|

||||

},

|

||||

count: None,

|

||||

},

|

||||

],

|

||||

label: Some("prepass_view_layout_no_motion_vectors"),

|

||||

});

|

||||

|

||||

let mesh_pipeline = world.resource::<MeshPipeline>();

|

||||

|

||||

PrepassPipeline {

|

||||

view_layout,

|

||||

view_layout_motion_vectors,

|

||||

view_layout_no_motion_vectors,

|

||||

mesh_layout: mesh_pipeline.mesh_layout.clone(),

|

||||

skinned_mesh_layout: mesh_pipeline.skinned_mesh_layout.clone(),

|

||||

material_vertex_shader: match M::prepass_vertex_shader() {

|

||||

@ -231,13 +338,20 @@ where

|

||||

key: Self::Key,

|

||||

layout: &MeshVertexBufferLayout,

|

||||

) -> Result<RenderPipelineDescriptor, SpecializedMeshPipelineError> {

|

||||

let mut bind_group_layout = vec![self.view_layout.clone()];

|

||||

let mut bind_group_layouts = vec![if key

|

||||

.mesh_key

|