a919cb0a17

12 Commits

| Author | SHA1 | Message | Date | |

|---|---|---|---|---|

|

|

16d28ccb91

|

RenderGraph Labelization (#10644)

# Objective

The whole `Cow<'static, str>` naming for nodes and subgraphs in

`RenderGraph` is a mess.

## Solution

Replaces hardcoded and potentially overlapping strings for nodes and

subgraphs inside `RenderGraph` with bevy's labelsystem.

---

## Changelog

* Two new labels: `RenderLabel` and `RenderSubGraph`.

* Replaced all uses for hardcoded strings with those labels

* Moved `Taa` label from its own mod to all the other `Labels3d`

* `add_render_graph_edges` now needs a tuple of labels

* Moved `ScreenSpaceAmbientOcclusion` label from its own mod with the

`ShadowPass` label to `LabelsPbr`

* Removed `NodeId`

* Renamed `Edges.id()` to `Edges.label()`

* Removed `NodeLabel`

* Changed examples according to the new label system

* Introduced new `RenderLabel`s: `Labels2d`, `Labels3d`, `LabelsPbr`,

`LabelsUi`

* Introduced new `RenderSubGraph`s: `SubGraph2d`, `SubGraph3d`,

`SubGraphUi`

* Removed `Reflect` and `Default` derive from `CameraRenderGraph`

component struct

* Improved some error messages

## Migration Guide

For Nodes and SubGraphs, instead of using hardcoded strings, you now

pass labels, which can be derived with structs and enums.

```rs

// old

#[derive(Default)]

struct MyRenderNode;

impl MyRenderNode {

pub const NAME: &'static str = "my_render_node"

}

render_app

.add_render_graph_node::<ViewNodeRunner<MyRenderNode>>(

core_3d::graph::NAME,

MyRenderNode::NAME,

)

.add_render_graph_edges(

core_3d::graph::NAME,

&[

core_3d::graph::node::TONEMAPPING,

MyRenderNode::NAME,

core_3d::graph::node::END_MAIN_PASS_POST_PROCESSING,

],

);

// new

use bevy::core_pipeline::core_3d::graph::{Labels3d, SubGraph3d};

#[derive(Debug, Hash, PartialEq, Eq, Clone, RenderLabel)]

pub struct MyRenderLabel;

#[derive(Default)]

struct MyRenderNode;

render_app

.add_render_graph_node::<ViewNodeRunner<MyRenderNode>>(

SubGraph3d,

MyRenderLabel,

)

.add_render_graph_edges(

SubGraph3d,

(

Labels3d::Tonemapping,

MyRenderLabel,

Labels3d::EndMainPassPostProcessing,

),

);

```

### SubGraphs

#### in `bevy_core_pipeline::core_2d::graph`

| old string-based path | new label |

|-----------------------|-----------|

| `NAME` | `SubGraph2d` |

#### in `bevy_core_pipeline::core_3d::graph`

| old string-based path | new label |

|-----------------------|-----------|

| `NAME` | `SubGraph3d` |

#### in `bevy_ui::render`

| old string-based path | new label |

|-----------------------|-----------|

| `draw_ui_graph::NAME` | `graph::SubGraphUi` |

### Nodes

#### in `bevy_core_pipeline::core_2d::graph`

| old string-based path | new label |

|-----------------------|-----------|

| `node::MSAA_WRITEBACK` | `Labels2d::MsaaWriteback` |

| `node::MAIN_PASS` | `Labels2d::MainPass` |

| `node::BLOOM` | `Labels2d::Bloom` |

| `node::TONEMAPPING` | `Labels2d::Tonemapping` |

| `node::FXAA` | `Labels2d::Fxaa` |

| `node::UPSCALING` | `Labels2d::Upscaling` |

| `node::CONTRAST_ADAPTIVE_SHARPENING` |

`Labels2d::ConstrastAdaptiveSharpening` |

| `node::END_MAIN_PASS_POST_PROCESSING` |

`Labels2d::EndMainPassPostProcessing` |

#### in `bevy_core_pipeline::core_3d::graph`

| old string-based path | new label |

|-----------------------|-----------|

| `node::MSAA_WRITEBACK` | `Labels3d::MsaaWriteback` |

| `node::PREPASS` | `Labels3d::Prepass` |

| `node::DEFERRED_PREPASS` | `Labels3d::DeferredPrepass` |

| `node::COPY_DEFERRED_LIGHTING_ID` | `Labels3d::CopyDeferredLightingId`

|

| `node::END_PREPASSES` | `Labels3d::EndPrepasses` |

| `node::START_MAIN_PASS` | `Labels3d::StartMainPass` |

| `node::MAIN_OPAQUE_PASS` | `Labels3d::MainOpaquePass` |

| `node::MAIN_TRANSMISSIVE_PASS` | `Labels3d::MainTransmissivePass` |

| `node::MAIN_TRANSPARENT_PASS` | `Labels3d::MainTransparentPass` |

| `node::END_MAIN_PASS` | `Labels3d::EndMainPass` |

| `node::BLOOM` | `Labels3d::Bloom` |

| `node::TONEMAPPING` | `Labels3d::Tonemapping` |

| `node::FXAA` | `Labels3d::Fxaa` |

| `node::UPSCALING` | `Labels3d::Upscaling` |

| `node::CONTRAST_ADAPTIVE_SHARPENING` |

`Labels3d::ContrastAdaptiveSharpening` |

| `node::END_MAIN_PASS_POST_PROCESSING` |

`Labels3d::EndMainPassPostProcessing` |

#### in `bevy_core_pipeline`

| old string-based path | new label |

|-----------------------|-----------|

| `taa::draw_3d_graph::node::TAA` | `Labels3d::Taa` |

#### in `bevy_pbr`

| old string-based path | new label |

|-----------------------|-----------|

| `draw_3d_graph::node::SHADOW_PASS` | `LabelsPbr::ShadowPass` |

| `ssao::draw_3d_graph::node::SCREEN_SPACE_AMBIENT_OCCLUSION` |

`LabelsPbr::ScreenSpaceAmbientOcclusion` |

| `deferred::DEFFERED_LIGHTING_PASS` | `LabelsPbr::DeferredLightingPass`

|

#### in `bevy_render`

| old string-based path | new label |

|-----------------------|-----------|

| `main_graph::node::CAMERA_DRIVER` | `graph::CameraDriverLabel` |

#### in `bevy_ui::render`

| old string-based path | new label |

|-----------------------|-----------|

| `draw_ui_graph::node::UI_PASS` | `graph::LabelsUi::UiPass` |

---

## Future work

* Make `NodeSlot`s also use types. Ideally, we have an enum with unit

variants where every variant resembles one slot. Then to make sure you

are using the right slot enum and make rust-analyzer play nicely with

it, we should make an associated type in the `Node` trait. With today's

system, we can introduce 3rd party slots to a node, and i wasnt sure if

this was used, so I didn't do this in this PR.

## Unresolved Questions

When looking at the `post_processing` example, we have a struct for the

label and a struct for the node, this seems like boilerplate and on

discord, @IceSentry (sowy for the ping)

[asked](https://discord.com/channels/691052431525675048/743663924229963868/1175197016947699742)

if a node could automatically introduce a label (or i completely

misunderstood that). The problem with that is, that nodes like

`EmptyNode` exist multiple times *inside the same* (sub)graph, so there

we need extern labels to distinguish between those. Hopefully we can

find a way to reduce boilerplate and still have everything unique. For

EmptyNode, we could maybe make a macro which implements an "empty node"

for a type, but for nodes which contain code and need to be present

multiple times, this could get nasty...

|

||

|

|

70a592f31a

|

Update to wgpu 0.18 (#10266)

# Objective Keep up to date with wgpu. ## Solution Update the wgpu version. Currently blocked on naga_oil updating to naga 0.14 and releasing a new version. 3d scenes (or maybe any scene with lighting?) currently don't render anything due to ``` error: naga_oil bug, please file a report: composer failed to build a valid header: Type [2] '' is invalid = Capability Capabilities(CUBE_ARRAY_TEXTURES) is required ``` I'm not sure what should be passed in for `wgpu::InstanceFlags`, or if we want to make the gles3minorversion configurable (might be useful for debugging?) Currently blocked on https://github.com/bevyengine/naga_oil/pull/63, and https://github.com/gfx-rs/wgpu/issues/4569 to be fixed upstream in wgpu first. ## Known issues Amd+windows+vulkan has issues with texture_binding_arrays (see the image [here](https://github.com/bevyengine/bevy/pull/10266#issuecomment-1819946278)), but that'll be fixed in the next wgpu/naga version, and you can just use dx12 as a workaround for now (Amd+linux mesa+vulkan texture_binding_arrays are fixed though). --- ## Changelog Updated wgpu to 0.18, naga to 0.14.2, and naga_oil to 0.11. - Windows desktop GL should now be less painful as it no longer requires Angle. - You can now toggle shader validation and debug information for debug and release builds using `WgpuSettings.instance_flags` and [InstanceFlags](https://docs.rs/wgpu/0.18.0/wgpu/struct.InstanceFlags.html) ## Migration Guide - `RenderPassDescriptor` `color_attachments` (as well as `RenderPassColorAttachment`, and `RenderPassDepthStencilAttachment`) now use `StoreOp::Store` or `StoreOp::Discard` instead of a `boolean` to declare whether or not they should be stored. - `RenderPassDescriptor` now have `timestamp_writes` and `occlusion_query_set` fields. These can safely be set to `None`. - `ComputePassDescriptor` now have a `timestamp_writes` field. This can be set to `None` for now. - See the [wgpu changelog](https://github.com/gfx-rs/wgpu/blob/trunk/CHANGELOG.md#v0180-2023-10-25) for additional details |

||

|

|

75da2e7adf

|

Disable camera on window close (#8802)

# Objective - When a window is closed, the associated camera keeps rendering even if the RenderTarget isn't valid anymore. - This is essentially just wasting a lot of performance. ## Solution - Detect the window close event and disable any camera that used the window has a RenderTarget. ## Notes It's possible a similar thing could be done for camera that use an image handle, but I would fix that in a separate PR. |

||

|

|

9db70da96f

|

Add screenshot api (#7163)

Fixes https://github.com/bevyengine/bevy/issues/1207 # Objective Right now, it's impossible to capture a screenshot of the entire window without forking bevy. This is because - The swapchain texture never has the COPY_SRC usage - It can't be accessed without taking ownership of it - Taking ownership of it breaks *a lot* of stuff ## Solution - Introduce a dedicated api for taking a screenshot of a given bevy window, and guarantee this screenshot will always match up with what gets put on the screen. --- ## Changelog - Added the `ScreenshotManager` resource with two functions, `take_screenshot` and `save_screenshot_to_disk` |

||

|

|

2c21d423fd

|

Make render graph slots optional for most cases (#8109)

# Objective

- Currently, the render graph slots are only used to pass the

view_entity around. This introduces significant boilerplate for very

little value. Instead of using slots for this, make the view_entity part

of the `RenderGraphContext`. This also means we won't need to have

`IN_VIEW` on every node and and we'll be able to use the default impl of

`Node::input()`.

## Solution

- Add `view_entity: Option<Entity>` to the `RenderGraphContext`

- Update all nodes to use this instead of entity slot input

---

## Changelog

- Add optional `view_entity` to `RenderGraphContext`

## Migration Guide

You can now get the view_entity directly from the `RenderGraphContext`.

When implementing the Node:

```rust

// 0.10

struct FooNode;

impl FooNode {

const IN_VIEW: &'static str = "view";

}

impl Node for FooNode {

fn input(&self) -> Vec<SlotInfo> {

vec![SlotInfo::new(Self::IN_VIEW, SlotType::Entity)]

}

fn run(

&self,

graph: &mut RenderGraphContext,

// ...

) -> Result<(), NodeRunError> {

let view_entity = graph.get_input_entity(Self::IN_VIEW)?;

// ...

Ok(())

}

}

// 0.11

struct FooNode;

impl Node for FooNode {

fn run(

&self,

graph: &mut RenderGraphContext,

// ...

) -> Result<(), NodeRunError> {

let view_entity = graph.view_entity();

// ...

Ok(())

}

}

```

When adding the node to the graph, you don't need to specify a slot_edge

for the view_entity.

```rust

// 0.10

let mut graph = RenderGraph::default();

graph.add_node(FooNode::NAME, node);

let input_node_id = draw_2d_graph.set_input(vec![SlotInfo::new(

graph::input::VIEW_ENTITY,

SlotType::Entity,

)]);

graph.add_slot_edge(

input_node_id,

graph::input::VIEW_ENTITY,

FooNode::NAME,

FooNode::IN_VIEW,

);

// add_node_edge ...

// 0.11

let mut graph = RenderGraph::default();

graph.add_node(FooNode::NAME, node);

// add_node_edge ...

```

## Notes

This PR paired with #8007 will help reduce a lot of annoying boilerplate

with the render nodes. Depending on which one gets merged first. It will

require a bit of clean up work to make both compatible.

I tagged this as a breaking change, because using the old system to get

the view_entity will break things because it's not a node input slot

anymore.

## Notes for reviewers

A lot of the diffs are just removing the slots in every nodes and graph

creation. The important part is mostly in the

graph_runner/CameraDriverNode.

|

||

|

|

abcb0661e3 |

Camera Output Modes, MSAA Writeback, and BlitPipeline (#7671)

# Objective Alternative to #7490. I wrote all of the code in this PR, but I have added @robtfm as co-author on commits that build on ideas from #7490. I would not have been able to solve these problems on my own without much more time investment and I'm largely just rephrasing the ideas from that PR. Fixes #7435 Fixes #7361 Fixes #5721 ## Solution This implements the solution I [outlined here](https://github.com/bevyengine/bevy/pull/7490#issuecomment-1426580633). * Adds "msaa writeback" as an explicit "msaa camera feature" and default to msaa_writeback: true for each camera. If this is true, a camera has MSAA enabled, and it isn't the first camera for the target, add a writeback before the main pass for that camera. * Adds a CameraOutputMode, which can be used to configure if (and how) the results of a camera's rendering will be written to the final RenderTarget output texture (via the upscaling node). The `blend_state` and `color_attachment_load_op` are now configurable, giving much more control over how a camera will write to the output texture. * Made cameras with the same target share the same main_texture tracker by using `Arc<AtomicUsize>`, which ensures continuity across cameras. This was previously broken / could produce weird results in some cases. `ViewTarget::main_texture()` is now correct in every context. * Added a new generic / specializable BlitPipeline, which the new MsaaWritebackNode uses internally. The UpscalingPipelineNode now uses BlitPipeline instead of its own pipeline. We might ultimately need to fork this back out if we choose to add more configurability to the upscaling, but for now this will save on binary size by not embedding the same shader twice. * Moved the "camera sorting" logic from the camera driver node to its own system. The results are now stored in the `SortedCameras` resource, which can be used anywhere in the renderer. MSAA writeback makes use of this. --- ## Changelog - Added `Camera::msaa_writeback` which can enable and disable msaa writeback. - Added specializable `BlitPipeline` and ported the upscaling node to use this. - Added SortedCameras, exposing information that was previously internal to the camera driver node. - Made cameras with the same target share the same main_texture tracker, which ensures continuity across cameras. |

||

|

|

a85b740f24 |

Support recording multiple CommandBuffers in RenderContext (#7248)

# Objective `RenderContext`, the core abstraction for running the render graph, currently only supports recording one `CommandBuffer` across the entire render graph. This means the entire buffer must be recorded sequentially, usually via the render graph itself. This prevents parallelization and forces users to only encode their commands in the render graph. ## Solution Allow `RenderContext` to store a `Vec<CommandBuffer>` that it progressively appends to. By default, the context will not have a command encoder, but will create one as soon as either `begin_tracked_render_pass` or the `command_encoder` accesor is first called. `RenderContext::add_command_buffer` allows users to interrupt the current command encoder, flush it to the vec, append a user-provided `CommandBuffer` and reset the command encoder to start a new buffer. Users or the render graph will call `RenderContext::finish` to retrieve the series of buffers for submitting to the queue. This allows users to encode their own `CommandBuffer`s outside of the render graph, potentially in different threads, and store them in components or resources. Ideally, in the future, the core pipeline passes can run in `RenderStage::Render` systems and end up saving the completed command buffers to either `Commands` or a field in `RenderPhase`. ## Alternatives The alternative is to use to use wgpu's `RenderBundle`s, which can achieve similar results; however it's not universally available (no OpenGL, WebGL, and DX11). --- ## Changelog Added: `RenderContext::new` Added: `RenderContext::add_command_buffer` Added: `RenderContext::finish` Changed: `RenderContext::render_device` is now private. Use the accessor `RenderContext::render_device()` instead. Changed: `RenderContext::command_encoder` is now private. Use the accessor `RenderContext::command_encoder()` instead. Changed: `RenderContext` now supports adding external `CommandBuffer`s for inclusion into the render graphs. These buffers can be encoded outside of the render graph (i.e. in a system). ## Migration Guide `RenderContext`'s fields are now private. Use the accessors on `RenderContext` instead, and construct it with `RenderContext::new`. |

||

|

|

ddfafab971 |

Windows as Entities (#5589)

# Objective Fix https://github.com/bevyengine/bevy/issues/4530 - Make it easier to open/close/modify windows by setting them up as `Entity`s with a `Window` component. - Make multiple windows very simple to set up. (just add a `Window` component to an entity and it should open) ## Solution - Move all properties of window descriptor to ~components~ a component. - Replace `WindowId` with `Entity`. - ~Use change detection for components to update backend rather than events/commands. (The `CursorMoved`/`WindowResized`/... events are kept for user convenience.~ Check each field individually to see what we need to update, events are still kept for user convenience. --- ## Changelog - `WindowDescriptor` renamed to `Window`. - Width/height consolidated into a `WindowResolution` component. - Requesting maximization/minimization is done on the [`Window::state`] field. - `WindowId` is now `Entity`. ## Migration Guide - Replace `WindowDescriptor` with `Window`. - Change `width` and `height` fields in a `WindowResolution`, either by doing ```rust WindowResolution::new(width, height) // Explicitly // or using From<_> for tuples for convenience (1920., 1080.).into() ``` - Replace any `WindowCommand` code to just modify the `Window`'s fields directly and creating/closing windows is now by spawning/despawning an entity with a `Window` component like so: ```rust let window = commands.spawn(Window { ... }).id(); // open window commands.entity(window).despawn(); // close window ``` ## Unresolved - ~How do we tell when a window is minimized by a user?~ ~Currently using the `Resize(0, 0)` as an indicator of minimization.~ No longer attempting to tell given how finnicky this was across platforms, now the user can only request that a window be maximized/minimized. ## Future work - Move `exit_on_close` functionality out from windowing and into app(?) - https://github.com/bevyengine/bevy/issues/5621 - https://github.com/bevyengine/bevy/issues/7099 - https://github.com/bevyengine/bevy/issues/7098 Co-authored-by: Carter Anderson <mcanders1@gmail.com> |

||

|

|

8ad9a7c7c4 |

Rename camera "priority" to "order" (#6908)

# Objective The documentation for camera priority is very confusing at the moment, it requires a bit of "double negative" kind of thinking. # Solution Flipping the wording on the documentation to reflect more common usecases like having an overlay camera and also renaming it to "order", since priority implies that it will override the other camera rather than have both run. |

||

|

|

e5905379de |

Use new let-else syntax where possible (#6463)

# Objective Let-else syntax is now stable! ## Solution Use it where possible! |

||

|

|

814f8d1635 |

update wgpu to 0.13 (#5168)

# Objective - Update wgpu to 0.13 - ~~Wait, is wgpu 0.13 released? No, but I had most of the changes already ready since playing with webgpu~~ well it has been released now - Also update parking_lot to 0.12 and naga to 0.9 ## Solution - Update syntax for wgsl shaders https://github.com/gfx-rs/wgpu/blob/master/CHANGELOG.md#wgsl-syntax - Add a few options, remove some references: https://github.com/gfx-rs/wgpu/blob/master/CHANGELOG.md#other-breaking-changes - fragment inputs should now exactly match vertex outputs for locations, so I added exports for those to be able to reuse them https://github.com/gfx-rs/wgpu/pull/2704 |

||

|

|

f487407e07 |

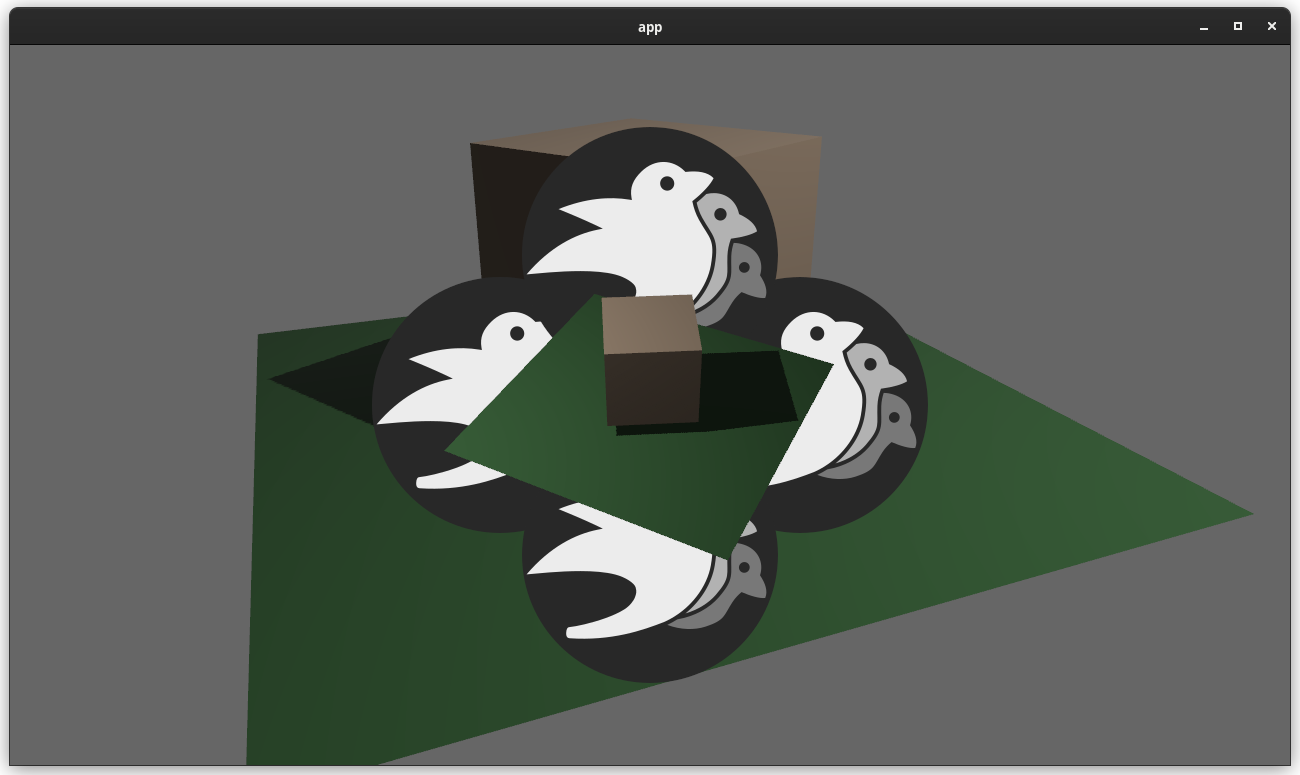

Camera Driven Rendering (#4745)

This adds "high level camera driven rendering" to Bevy. The goal is to give users more control over what gets rendered (and where) without needing to deal with render logic. This will make scenarios like "render to texture", "multiple windows", "split screen", "2d on 3d", "3d on 2d", "pass layering", and more significantly easier. Here is an [example of a 2d render sandwiched between two 3d renders (each from a different perspective)](https://gist.github.com/cart/4fe56874b2e53bc5594a182fc76f4915):  Users can now spawn a camera, point it at a RenderTarget (a texture or a window), and it will "just work". Rendering to a second window is as simple as spawning a second camera and assigning it to a specific window id: ```rust // main camera (main window) commands.spawn_bundle(Camera2dBundle::default()); // second camera (other window) commands.spawn_bundle(Camera2dBundle { camera: Camera { target: RenderTarget::Window(window_id), ..default() }, ..default() }); ``` Rendering to a texture is as simple as pointing the camera at a texture: ```rust commands.spawn_bundle(Camera2dBundle { camera: Camera { target: RenderTarget::Texture(image_handle), ..default() }, ..default() }); ``` Cameras now have a "render priority", which controls the order they are drawn in. If you want to use a camera's output texture as a texture in the main pass, just set the priority to a number lower than the main pass camera (which defaults to `0`). ```rust // main pass camera with a default priority of 0 commands.spawn_bundle(Camera2dBundle::default()); commands.spawn_bundle(Camera2dBundle { camera: Camera { target: RenderTarget::Texture(image_handle.clone()), priority: -1, ..default() }, ..default() }); commands.spawn_bundle(SpriteBundle { texture: image_handle, ..default() }) ``` Priority can also be used to layer to cameras on top of each other for the same RenderTarget. This is what "2d on top of 3d" looks like in the new system: ```rust commands.spawn_bundle(Camera3dBundle::default()); commands.spawn_bundle(Camera2dBundle { camera: Camera { // this will render 2d entities "on top" of the default 3d camera's render priority: 1, ..default() }, ..default() }); ``` There is no longer the concept of a global "active camera". Resources like `ActiveCamera<Camera2d>` and `ActiveCamera<Camera3d>` have been replaced with the camera-specific `Camera::is_active` field. This does put the onus on users to manage which cameras should be active. Cameras are now assigned a single render graph as an "entry point", which is configured on each camera entity using the new `CameraRenderGraph` component. The old `PerspectiveCameraBundle` and `OrthographicCameraBundle` (generic on camera marker components like Camera2d and Camera3d) have been replaced by `Camera3dBundle` and `Camera2dBundle`, which set 3d and 2d default values for the `CameraRenderGraph` and projections. ```rust // old 3d perspective camera commands.spawn_bundle(PerspectiveCameraBundle::default()) // new 3d perspective camera commands.spawn_bundle(Camera3dBundle::default()) ``` ```rust // old 2d orthographic camera commands.spawn_bundle(OrthographicCameraBundle::new_2d()) // new 2d orthographic camera commands.spawn_bundle(Camera2dBundle::default()) ``` ```rust // old 3d orthographic camera commands.spawn_bundle(OrthographicCameraBundle::new_3d()) // new 3d orthographic camera commands.spawn_bundle(Camera3dBundle { projection: OrthographicProjection { scale: 3.0, scaling_mode: ScalingMode::FixedVertical, ..default() }.into(), ..default() }) ``` Note that `Camera3dBundle` now uses a new `Projection` enum instead of hard coding the projection into the type. There are a number of motivators for this change: the render graph is now a part of the bundle, the way "generic bundles" work in the rust type system prevents nice `..default()` syntax, and changing projections at runtime is much easier with an enum (ex for editor scenarios). I'm open to discussing this choice, but I'm relatively certain we will all come to the same conclusion here. Camera2dBundle and Camera3dBundle are much clearer than being generic on marker components / using non-default constructors. If you want to run a custom render graph on a camera, just set the `CameraRenderGraph` component: ```rust commands.spawn_bundle(Camera3dBundle { camera_render_graph: CameraRenderGraph::new(some_render_graph_name), ..default() }) ``` Just note that if the graph requires data from specific components to work (such as `Camera3d` config, which is provided in the `Camera3dBundle`), make sure the relevant components have been added. Speaking of using components to configure graphs / passes, there are a number of new configuration options: ```rust commands.spawn_bundle(Camera3dBundle { camera_3d: Camera3d { // overrides the default global clear color clear_color: ClearColorConfig::Custom(Color::RED), ..default() }, ..default() }) commands.spawn_bundle(Camera3dBundle { camera_3d: Camera3d { // disables clearing clear_color: ClearColorConfig::None, ..default() }, ..default() }) ``` Expect to see more of the "graph configuration Components on Cameras" pattern in the future. By popular demand, UI no longer requires a dedicated camera. `UiCameraBundle` has been removed. `Camera2dBundle` and `Camera3dBundle` now both default to rendering UI as part of their own render graphs. To disable UI rendering for a camera, disable it using the CameraUi component: ```rust commands .spawn_bundle(Camera3dBundle::default()) .insert(CameraUi { is_enabled: false, ..default() }) ``` ## Other Changes * The separate clear pass has been removed. We should revisit this for things like sky rendering, but I think this PR should "keep it simple" until we're ready to properly support that (for code complexity and performance reasons). We can come up with the right design for a modular clear pass in a followup pr. * I reorganized bevy_core_pipeline into Core2dPlugin and Core3dPlugin (and core_2d / core_3d modules). Everything is pretty much the same as before, just logically separate. I've moved relevant types (like Camera2d, Camera3d, Camera3dBundle, Camera2dBundle) into their relevant modules, which is what motivated this reorganization. * I adapted the `scene_viewer` example (which relied on the ActiveCameras behavior) to the new system. I also refactored bits and pieces to be a bit simpler. * All of the examples have been ported to the new camera approach. `render_to_texture` and `multiple_windows` are now _much_ simpler. I removed `two_passes` because it is less relevant with the new approach. If someone wants to add a new "layered custom pass with CameraRenderGraph" example, that might fill a similar niche. But I don't feel much pressure to add that in this pr. * Cameras now have `target_logical_size` and `target_physical_size` fields, which makes finding the size of a camera's render target _much_ simpler. As a result, the `Assets<Image>` and `Windows` parameters were removed from `Camera::world_to_screen`, making that operation much more ergonomic. * Render order ambiguities between cameras with the same target and the same priority now produce a warning. This accomplishes two goals: 1. Now that there is no "global" active camera, by default spawning two cameras will result in two renders (one covering the other). This would be a silent performance killer that would be hard to detect after the fact. By detecting ambiguities, we can provide a helpful warning when this occurs. 2. Render order ambiguities could result in unexpected / unpredictable render results. Resolving them makes sense. ## Follow Up Work * Per-Camera viewports, which will make it possible to render to a smaller area inside of a RenderTarget (great for something like splitscreen) * Camera-specific MSAA config (should use the same "overriding" pattern used for ClearColor) * Graph Based Camera Ordering: priorities are simple, but they make complicated ordering constraints harder to express. We should consider adopting a "graph based" camera ordering model with "before" and "after" relationships to other cameras (or build it "on top" of the priority system). * Consider allowing graphs to run subgraphs from any nest level (aka a global namespace for graphs). Right now the 2d and 3d graphs each need their own UI subgraph, which feels "fine" in the short term. But being able to share subgraphs between other subgraphs seems valuable. * Consider splitting `bevy_core_pipeline` into `bevy_core_2d` and `bevy_core_3d` packages. Theres a shared "clear color" dependency here, which would need a new home. |