e49527e34d

8 Commits

| Author | SHA1 | Message | Date | |

|---|---|---|---|---|

|

|

4dadebd9c4

|

Improve performance by binning together opaque items instead of sorting them. (#12453)

Today, we sort all entities added to all phases, even the phases that don't strictly need sorting, such as the opaque and shadow phases. This results in a performance loss because our `PhaseItem`s are rather large in memory, so sorting is slow. Additionally, determining the boundaries of batches is an O(n) process. This commit makes Bevy instead applicable place phase items into *bins* keyed by *bin keys*, which have the invariant that everything in the same bin is potentially batchable. This makes determining batch boundaries O(1), because everything in the same bin can be batched. Instead of sorting each entity, we now sort only the bin keys. This drops the sorting time to near-zero on workloads with few bins like `many_cubes --no-frustum-culling`. Memory usage is improved too, with batch boundaries and dynamic indices now implicit instead of explicit. The improved memory usage results in a significant win even on unbatchable workloads like `many_cubes --no-frustum-culling --vary-material-data-per-instance`, presumably due to cache effects. Not all phases can be binned; some, such as transparent and transmissive phases, must still be sorted. To handle this, this commit splits `PhaseItem` into `BinnedPhaseItem` and `SortedPhaseItem`. Most of the logic that today deals with `PhaseItem`s has been moved to `SortedPhaseItem`. `BinnedPhaseItem` has the new logic. Frame time results (in ms/frame) are as follows: | Benchmark | `binning` | `main` | Speedup | | ------------------------ | --------- | ------- | ------- | | `many_cubes -nfc -vpi` | 232.179 | 312.123 | 34.43% | | `many_cubes -nfc` | 25.874 | 30.117 | 16.40% | | `many_foxes` | 3.276 | 3.515 | 7.30% | (`-nfc` is short for `--no-frustum-culling`; `-vpi` is short for `--vary-per-instance`.) --- ## Changelog ### Changed * Render phases have been split into binned and sorted phases. Binned phases, such as the common opaque phase, achieve improved CPU performance by avoiding the sorting step. ## Migration Guide - `PhaseItem` has been split into `BinnedPhaseItem` and `SortedPhaseItem`. If your code has custom `PhaseItem`s, you will need to migrate them to one of these two types. `SortedPhaseItem` requires the fewest code changes, but you may want to pick `BinnedPhaseItem` if your phase doesn't require sorting, as that enables higher performance. ## Tracy graphs `many-cubes --no-frustum-culling`, `main` branch: <img width="1064" alt="Screenshot 2024-03-12 180037" src="https://github.com/bevyengine/bevy/assets/157897/e1180ce8-8e89-46d2-85e3-f59f72109a55"> `many-cubes --no-frustum-culling`, this branch: <img width="1064" alt="Screenshot 2024-03-12 180011" src="https://github.com/bevyengine/bevy/assets/157897/0899f036-6075-44c5-a972-44d95895f46c"> You can see that `batch_and_prepare_binned_render_phase` is a much smaller fraction of the time. Zooming in on that function, with yellow being this branch and red being `main`, we see: <img width="1064" alt="Screenshot 2024-03-12 175832" src="https://github.com/bevyengine/bevy/assets/157897/0dfc8d3f-49f4-496e-8825-a66e64d356d0"> The binning happens in `queue_material_meshes`. Again with yellow being this branch and red being `main`: <img width="1064" alt="Screenshot 2024-03-12 175755" src="https://github.com/bevyengine/bevy/assets/157897/b9b20dc1-11c8-400c-a6cc-1c2e09c1bb96"> We can see that there is a small regression in `queue_material_meshes` performance, but it's not nearly enough to outweigh the large gains in `batch_and_prepare_binned_render_phase`. --------- Co-authored-by: James Liu <contact@jamessliu.com> |

||

|

|

b35974010b

|

Get Bevy building for WebAssembly with multithreading (#12205)

# Objective This gets Bevy building on Wasm when the `atomics` flag is enabled. This does not yet multithread Bevy itself, but it allows Bevy users to use a crate like `wasm_thread` to spawn their own threads and manually parallelize work. This is a first step towards resolving #4078 . Also fixes #9304. This provides a foothold so that Bevy contributors can begin to think about multithreaded Wasm's constraints and Bevy can work towards changes to get the engine itself multithreaded. Some flags need to be set on the Rust compiler when compiling for Wasm multithreading. Here's what my build script looks like, with the correct flags set, to test out Bevy examples on web: ```bash set -e RUSTFLAGS='-C target-feature=+atomics,+bulk-memory,+mutable-globals' \ cargo build --example breakout --target wasm32-unknown-unknown -Z build-std=std,panic_abort --release wasm-bindgen --out-name wasm_example \ --out-dir examples/wasm/target \ --target web target/wasm32-unknown-unknown/release/examples/breakout.wasm devserver --header Cross-Origin-Opener-Policy='same-origin' --header Cross-Origin-Embedder-Policy='require-corp' --path examples/wasm ``` A few notes: 1. `cpal` crashes immediately when the `atomics` flag is set. That is patched in https://github.com/RustAudio/cpal/pull/837, but not yet in the latest crates.io release. That can be temporarily worked around by patching Cpal like so: ```toml [patch.crates-io] cpal = { git = "https://github.com/RustAudio/cpal" } ``` 2. When testing out `wasm_thread` you need to enable the `es_modules` feature. ## Solution The largest obstacle to compiling Bevy with `atomics` on web is that `wgpu` types are _not_ Send and Sync. Longer term Bevy will need an approach to handle that, but in the near term Bevy is already configured to be single-threaded on web. Therefor it is enough to wrap `wgpu` types in a `send_wrapper::SendWrapper` that _is_ Send / Sync, but panics if accessed off the `wgpu` thread. --- ## Changelog - `wgpu` types that are not `Send` are wrapped in `send_wrapper::SendWrapper` on Wasm + 'atomics' - CommandBuffers are not generated in parallel on Wasm + 'atomics' ## Questions - Bevy should probably add CI checks to make sure this doesn't regress. Should that go in this PR or a separate PR? **Edit:** Added checks to build Wasm with atomics --------- Co-authored-by: François <mockersf@gmail.com> Co-authored-by: Alice Cecile <alice.i.cecile@gmail.com> Co-authored-by: daxpedda <daxpedda@gmail.com> Co-authored-by: François <francois.mockers@vleue.com> |

||

|

|

6d547d7ce6

|

Allow Mesh-related queue phase systems to parallelize (#11804)

# Objective Partially addresses #3548. `queue_shadows` and `queue_material_meshes` cannot parallelize because of the `ResMut<RenderMeshInstances>` parameter for `queue_material_meshes`. ## Solution Change the `material_bind_group` field to use atomics instead of needing full mutable access. Change the `ResMut` to a `Res`, which should allow both sets of systems to parallelize without issue. ## Performance Tested against `many_foxes`, this has a significant improvement over the entire render schedule. (Yellow is this PR, red is main)  The use of atomics does seem to have a negative effect on `queue_material_meshes` (roughly a 8.29% increase in time spent in the system).  `queue_shadows` seems to be ever so slightly slower (1.6% more time spent) in the system.  `batch_and_prepare_render_phase` seems to be a mix, but overall seems to be slightly *faster* by about 5%.  |

||

|

|

e67cfdf82b

|

Enable clippy::undocumented_unsafe_blocks warning across the workspace (#10646)

# Objective Enables warning on `clippy::undocumented_unsafe_blocks` across the workspace rather than only in `bevy_ecs`, `bevy_transform` and `bevy_utils`. This adds a little awkwardness in a few areas of code that have trivial safety or explain safety for multiple unsafe blocks with one comment however automatically prevents these comments from being missed. ## Solution This adds `undocumented_unsafe_blocks = "warn"` to the workspace `Cargo.toml` and fixes / adds a few missed safety comments. I also added `#[allow(clippy::undocumented_unsafe_blocks)]` where the safety is explained somewhere above. There are a couple of safety comments I added I'm not 100% sure about in `bevy_animation` and `bevy_render/src/view` and I'm not sure about the use of `#[allow(clippy::undocumented_unsafe_blocks)]` compared to adding comments like `// SAFETY: See above`. |

||

|

|

8f81be9845 |

remove potential ub in render_resource_wrapper (#7279)

# Objective [as noted](https://github.com/bevyengine/bevy/pull/5950#discussion_r1080762807) by james, transmuting arcs may be UB. we now store a `*const ()` pointer internally, and only rely on `ptr.cast::<()>().cast::<T>() == ptr`. as a happy side effect this removes the need for boxing the value, so todo: potentially use this for release mode as well |

||

|

|

bef9bc1844 |

Reduce branching in TrackedRenderPass (#7053)

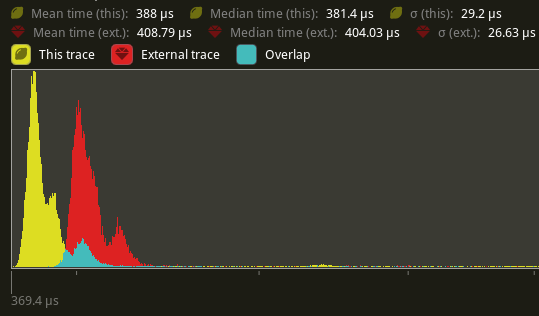

# Objective Speed up the render phase for rendering. ## Solution - Follow up #6988 and make the internals of atomic IDs `NonZeroU32`. This niches the `Option`s of the IDs in draw state, which reduces the size and branching behavior when evaluating for equality. - Require `&RenderDevice` to get the device's `Limits` when initializing a `TrackedRenderPass` to preallocate the bind groups and vertex buffer state in `DrawState`, this removes the branch on needing to resize those `Vec`s. ## Performance This produces a similar speed up akin to that of #6885. This shows an approximate 6% speed up in `main_opaque_pass_3d` on `many_foxes` (408.79 us -> 388us). This should be orthogonal to the gains seen there.  --- ## Changelog Added: `RenderContext::begin_tracked_render_pass`. Changed: `TrackedRenderPass` now requires a `&RenderDevice` on construction. Removed: `bevy_render::render_phase::DrawState`. It was not usable in any form outside of `bevy_render`. ## Migration Guide TODO |

||

|

|

965ebeff59 |

Replace UUID based IDs with a atomic-counted ones (#6988)

# Objective - alternative to #2895 - as mentioned in #2535 the uuid based ids in the render module should be replaced with atomic-counted ones ## Solution - instead of generating a random UUID for each render resource, this implementation increases an atomic counter - this might be replaced by the ids of wgpu if they expose them directly in the future - I have not benchmarked this solution yet, but this should be slightly faster in theory. - Bevymark does not seem to be affected much by this change, which is to be expected. - Nothing of our API has changed, other than that the IDs have lost their IMO rather insignificant documentation. - Maybe the documentation could be added back into the macro, but this would complicate the code. |

||

|

|

2cd0bd7575 |

improve compile time by type-erasing wgpu structs (#5950)

# Objective structs containing wgpu types take a long time to compile. this is particularly bad for generics containing the wgpu structs (like the depth pipeline builder with `#[derive(SystemParam)]` i've been working on). we can avoid that by boxing and type-erasing in the bevy `render_resource` wrappers. type system magic is not a strength of mine so i guess there will be a cleaner way to achieve this, happy to take feedback or for it to be taken as a proof of concept if someone else wants to do a better job. ## Solution - add macros to box and type-erase in debug mode - leave current impl for release mode timings: <html xmlns:v="urn:schemas-microsoft-com:vml" xmlns:o="urn:schemas-microsoft-com:office:office" xmlns:x="urn:schemas-microsoft-com:office:excel" xmlns="http://www.w3.org/TR/REC-html40"> <head> <meta name=ProgId content=Excel.Sheet> <meta name=Generator content="Microsoft Excel 15"> <link id=Main-File rel=Main-File href="file:///C:/Users/robfm/AppData/Local/Temp/msohtmlclip1/01/clip.htm"> <link rel=File-List href="file:///C:/Users/robfm/AppData/Local/Temp/msohtmlclip1/01/clip_filelist.xml"> <!--table {mso-displayed-decimal-separator:"\."; mso-displayed-thousand-separator:"\,";} @page {margin:.75in .7in .75in .7in; mso-header-margin:.3in; mso-footer-margin:.3in;} tr {mso-height-source:auto;} col {mso-width-source:auto;} br {mso-data-placement:same-cell;} td {padding-top:1px; padding-right:1px; padding-left:1px; mso-ignore:padding; color:black; font-size:11.0pt; font-weight:400; font-style:normal; text-decoration:none; font-family:Calibri, sans-serif; mso-font-charset:0; mso-number-format:General; text-align:general; vertical-align:bottom; border:none; mso-background-source:auto; mso-pattern:auto; mso-protection:locked visible; white-space:nowrap; mso-rotate:0;} .xl65 {mso-number-format:0%;} .xl66 {vertical-align:middle; white-space:normal;} .xl67 {vertical-align:middle;} --> </head> <body link="#0563C1" vlink="#954F72"> current | | | -- | -- | -- | -- | Total time: | 64.9s | | bevy_pbr v0.9.0-dev | 19.2s | | bevy_render v0.9.0-dev | 17.0s | | bevy_sprite v0.9.0-dev | 15.1s | | DepthPipelineBuilder | 18.7s | | | | with type-erasing | | | diff | Total time: | 49.0s | -24% | bevy_render v0.9.0-dev | 12.0s | -38% | bevy_pbr v0.9.0-dev | 8.7s | -49% | bevy_sprite v0.9.0-dev | 6.1s | -60% | DepthPipelineBuilder | 1.2s | -94% </body> </html> the depth pipeline builder is a binary with body: ```rust use std::{marker::PhantomData, hash::Hash}; use bevy::{prelude::*, ecs::system::SystemParam, pbr::{RenderMaterials, MaterialPipeline, ShadowPipeline}, render::{renderer::RenderDevice, render_resource::{SpecializedMeshPipelines, PipelineCache}, render_asset::RenderAssets}}; fn main() { println!("Hello, world p!\n"); } #[derive(SystemParam)] pub struct DepthPipelineBuilder<'w, 's, M: Material> where M::Data: Eq + Hash + Clone, { render_device: Res<'w, RenderDevice>, material_pipeline: Res<'w, MaterialPipeline<M>>, material_pipelines: ResMut<'w, SpecializedMeshPipelines<MaterialPipeline<M>>>, shadow_pipeline: Res<'w, ShadowPipeline>, pipeline_cache: ResMut<'w, PipelineCache>, render_meshes: Res<'w, RenderAssets<Mesh>>, render_materials: Res<'w, RenderMaterials<M>>, msaa: Res<'w, Msaa>, #[system_param(ignore)] _p: PhantomData<&'s M>, } ``` |