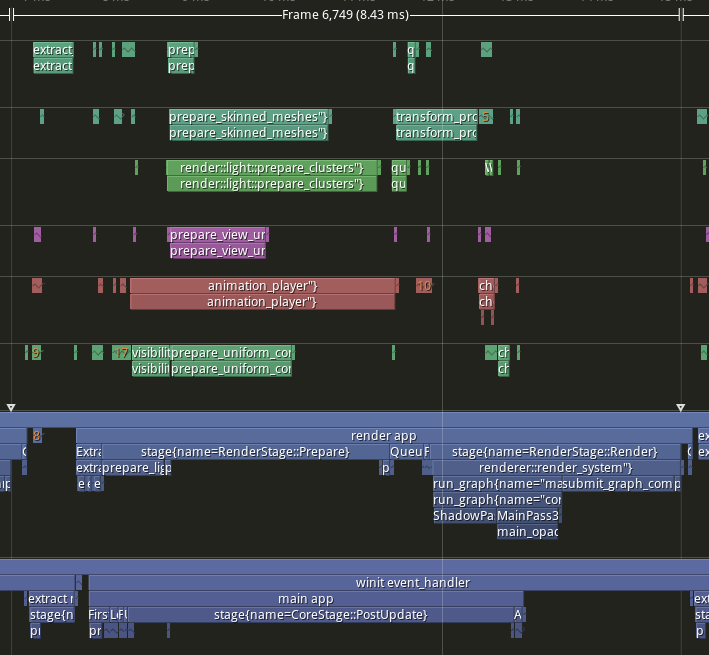

# Objective - Implement pipelined rendering - Fixes #5082 - Fixes #4718 ## User Facing Description Bevy now implements piplelined rendering! Pipelined rendering allows the app logic and rendering logic to run on different threads leading to large gains in performance.  *tracy capture of many_foxes example* To use pipelined rendering, you just need to add the `PipelinedRenderingPlugin`. If you're using `DefaultPlugins` then it will automatically be added for you on all platforms except wasm. Bevy does not currently support multithreading on wasm which is needed for this feature to work. If you aren't using `DefaultPlugins` you can add the plugin manually. ```rust use bevy::prelude::*; use bevy::render::pipelined_rendering::PipelinedRenderingPlugin; fn main() { App::new() // whatever other plugins you need .add_plugin(RenderPlugin) // needs to be added after RenderPlugin .add_plugin(PipelinedRenderingPlugin) .run(); } ``` If for some reason pipelined rendering needs to be removed. You can also disable the plugin the normal way. ```rust use bevy::prelude::*; use bevy::render::pipelined_rendering::PipelinedRenderingPlugin; fn main() { App::new.add_plugins(DefaultPlugins.build().disable::<PipelinedRenderingPlugin>()); } ``` ### A setup function was added to plugins A optional plugin lifecycle function was added to the `Plugin trait`. This function is called after all plugins have been built, but before the app runner is called. This allows for some final setup to be done. In the case of pipelined rendering, the function removes the sub app from the main app and sends it to the render thread. ```rust struct MyPlugin; impl Plugin for MyPlugin { fn build(&self, app: &mut App) { } // optional function fn setup(&self, app: &mut App) { // do some final setup before runner is called } } ``` ### A Stage for Frame Pacing In the `RenderExtractApp` there is a stage labelled `BeforeIoAfterRenderStart` that systems can be added to. The specific use case for this stage is for a frame pacing system that can delay the start of main app processing in render bound apps to reduce input latency i.e. "frame pacing". This is not currently built into bevy, but exists as `bevy` ```text |-------------------------------------------------------------------| | | BeforeIoAfterRenderStart | winit events | main schedule | | extract |---------------------------------------------------------| | | extract commands | rendering schedule | |-------------------------------------------------------------------| ``` ### Small API additions * `Schedule::remove_stage` * `App::insert_sub_app` * `App::remove_sub_app` * `TaskPool::scope_with_executor` ## Problems and Solutions ### Moving render app to another thread Most of the hard bits for this were done with the render redo. This PR just sends the render app back and forth through channels which seems to work ok. I originally experimented with using a scope to run the render task. It was cuter, but that approach didn't allow render to start before i/o processing. So I switched to using channels. There is much complexity in the coordination that needs to be done, but it's worth it. By moving rendering during i/o processing the frame times should be much more consistent in render bound apps. See https://github.com/bevyengine/bevy/issues/4691. ### Unsoundness with Sending World with NonSend resources Dropping !Send things on threads other than the thread they were spawned on is considered unsound. The render world doesn't have any nonsend resources. So if we tell the users to "pretty please don't spawn nonsend resource on the render world", we can avoid this problem. More seriously there is this https://github.com/bevyengine/bevy/pull/6534 pr, which patches the unsoundness by aborting the app if a nonsend resource is dropped on the wrong thread. ~~That PR should probably be merged before this one.~~ For a longer term solution we have this discussion going https://github.com/bevyengine/bevy/discussions/6552. ### NonSend Systems in render world The render world doesn't have any !Send resources, but it does have a non send system. While Window is Send, winit does have some API's that can only be accessed on the main thread. `prepare_windows` in the render schedule thus needs to be scheduled on the main thread. Currently we run nonsend systems by running them on the thread the TaskPool::scope runs on. When we move render to another thread this no longer works. To fix this, a new `scope_with_executor` method was added that takes a optional `TheadExecutor` that can only be ticked on the thread it was initialized on. The render world then holds a `MainThreadExecutor` resource which can be passed to the scope in the parallel executor that it uses to spawn it's non send systems on. ### Scopes executors between render and main should not share tasks Since the render world and the app world share the `ComputeTaskPool`. Because `scope` has executors for the ComputeTaskPool a system from the main world could run on the render thread or a render system could run on the main thread. This can cause performance problems because it can delay a stage from finishing. See https://github.com/bevyengine/bevy/pull/6503#issuecomment-1309791442 for more details. To avoid this problem, `TaskPool::scope` has been changed to not tick the ComputeTaskPool when it's used by the parallel executor. In the future when we move closer to the 1 thread to 1 logical core model we may want to overprovide threads, because the render and main app threads don't do much when executing the schedule. ## Performance My machine is Windows 11, AMD Ryzen 5600x, RX 6600 ### Examples #### This PR with pipelining vs Main > Note that these were run on an older version of main and the performance profile has probably changed due to optimizations Seeing a perf gain from 29% on many lights to 7% on many sprites. <html> <body> <!--StartFragment--><google-sheets-html-origin> | percent | | | Diff | | | Main | | | PR | | -- | -- | -- | -- | -- | -- | -- | -- | -- | -- | -- | -- | -- tracy frame time | mean | median | sigma | mean | median | sigma | mean | median | sigma | mean | median | sigma many foxes | 27.01% | 27.34% | -47.09% | 1.58 | 1.55 | -1.78 | 5.85 | 5.67 | 3.78 | 4.27 | 4.12 | 5.56 many lights | 29.35% | 29.94% | -10.84% | 3.02 | 3.03 | -0.57 | 10.29 | 10.12 | 5.26 | 7.27 | 7.09 | 5.83 many animated sprites | 13.97% | 15.69% | 14.20% | 3.79 | 4.17 | 1.41 | 27.12 | 26.57 | 9.93 | 23.33 | 22.4 | 8.52 3d scene | 25.79% | 26.78% | 7.46% | 0.49 | 0.49 | 0.15 | 1.9 | 1.83 | 2.01 | 1.41 | 1.34 | 1.86 many cubes | 11.97% | 11.28% | 14.51% | 1.93 | 1.78 | 1.31 | 16.13 | 15.78 | 9.03 | 14.2 | 14 | 7.72 many sprites | 7.14% | 9.42% | -85.42% | 1.72 | 2.23 | -6.15 | 24.09 | 23.68 | 7.2 | 22.37 | 21.45 | 13.35 <!--EndFragment--> </body> </html> #### This PR with pipelining disabled vs Main Mostly regressions here. I don't think this should be a problem as users that are disabling pipelined rendering are probably running single threaded and not using the parallel executor. The regression is probably mostly due to the switch to use `async_executor::run` instead of `try_tick` and also having one less thread to run systems on. I'll do a writeup on why switching to `run` causes regressions, so we can try to eventually fix it. Using try_tick causes issues when pipeline rendering is enable as seen [here](https://github.com/bevyengine/bevy/pull/6503#issuecomment-1380803518) <html> <body> <!--StartFragment--><google-sheets-html-origin> | percent | | | Diff | | | Main | | | PR no pipelining | | -- | -- | -- | -- | -- | -- | -- | -- | -- | -- | -- | -- | -- tracy frame time | mean | median | sigma | mean | median | sigma | mean | median | sigma | mean | median | sigma many foxes | -3.72% | -4.42% | -1.07% | -0.21 | -0.24 | -0.04 | 5.64 | 5.43 | 3.74 | 5.85 | 5.67 | 3.78 many lights | 0.29% | -0.30% | 4.75% | 0.03 | -0.03 | 0.25 | 10.29 | 10.12 | 5.26 | 10.26 | 10.15 | 5.01 many animated sprites | 0.22% | 1.81% | -2.72% | 0.06 | 0.48 | -0.27 | 27.12 | 26.57 | 9.93 | 27.06 | 26.09 | 10.2 3d scene | -15.79% | -14.75% | -31.34% | -0.3 | -0.27 | -0.63 | 1.9 | 1.83 | 2.01 | 2.2 | 2.1 | 2.64 many cubes | -2.85% | -3.30% | 0.00% | -0.46 | -0.52 | 0 | 16.13 | 15.78 | 9.03 | 16.59 | 16.3 | 9.03 many sprites | 2.49% | 2.41% | 0.69% | 0.6 | 0.57 | 0.05 | 24.09 | 23.68 | 7.2 | 23.49 | 23.11 | 7.15 <!--EndFragment--> </body> </html> ### Benchmarks Mostly the same except empty_systems has got a touch slower. The maybe_pipelining+1 column has the compute task pool with an extra thread over default added. This is because pipelining loses one thread over main to execute systems on, since the main thread no longer runs normal systems. <details> <summary>Click Me</summary> ```text group main maybe-pipelining+1 ----- ------------------------- ------------------ busy_systems/01x_entities_03_systems 1.07 30.7±1.32µs ? ?/sec 1.00 28.6±1.35µs ? ?/sec busy_systems/01x_entities_06_systems 1.10 52.1±1.10µs ? ?/sec 1.00 47.2±1.08µs ? ?/sec busy_systems/01x_entities_09_systems 1.00 74.6±1.36µs ? ?/sec 1.00 75.0±1.93µs ? ?/sec busy_systems/01x_entities_12_systems 1.03 100.6±6.68µs ? ?/sec 1.00 98.0±1.46µs ? ?/sec busy_systems/01x_entities_15_systems 1.11 128.5±3.53µs ? ?/sec 1.00 115.5±1.02µs ? ?/sec busy_systems/02x_entities_03_systems 1.16 50.4±2.56µs ? ?/sec 1.00 43.5±3.00µs ? ?/sec busy_systems/02x_entities_06_systems 1.00 87.1±1.27µs ? ?/sec 1.05 91.5±7.15µs ? ?/sec busy_systems/02x_entities_09_systems 1.04 139.9±6.37µs ? ?/sec 1.00 134.0±1.06µs ? ?/sec busy_systems/02x_entities_12_systems 1.05 179.2±3.47µs ? ?/sec 1.00 170.1±3.17µs ? ?/sec busy_systems/02x_entities_15_systems 1.01 219.6±3.75µs ? ?/sec 1.00 218.1±2.55µs ? ?/sec busy_systems/03x_entities_03_systems 1.10 70.6±2.33µs ? ?/sec 1.00 64.3±0.69µs ? ?/sec busy_systems/03x_entities_06_systems 1.02 130.2±3.11µs ? ?/sec 1.00 128.0±1.34µs ? ?/sec busy_systems/03x_entities_09_systems 1.00 195.0±10.11µs ? ?/sec 1.00 194.8±1.41µs ? ?/sec busy_systems/03x_entities_12_systems 1.01 261.7±4.05µs ? ?/sec 1.00 259.8±4.11µs ? ?/sec busy_systems/03x_entities_15_systems 1.00 318.0±3.04µs ? ?/sec 1.06 338.3±20.25µs ? ?/sec busy_systems/04x_entities_03_systems 1.00 82.9±0.63µs ? ?/sec 1.02 84.3±0.63µs ? ?/sec busy_systems/04x_entities_06_systems 1.01 181.7±3.65µs ? ?/sec 1.00 179.8±1.76µs ? ?/sec busy_systems/04x_entities_09_systems 1.04 265.0±4.68µs ? ?/sec 1.00 255.3±1.98µs ? ?/sec busy_systems/04x_entities_12_systems 1.00 335.9±3.00µs ? ?/sec 1.05 352.6±15.84µs ? ?/sec busy_systems/04x_entities_15_systems 1.00 418.6±10.26µs ? ?/sec 1.08 450.2±39.58µs ? ?/sec busy_systems/05x_entities_03_systems 1.07 114.3±0.95µs ? ?/sec 1.00 106.9±1.52µs ? ?/sec busy_systems/05x_entities_06_systems 1.08 229.8±2.90µs ? ?/sec 1.00 212.3±4.18µs ? ?/sec busy_systems/05x_entities_09_systems 1.03 329.3±1.99µs ? ?/sec 1.00 319.2±2.43µs ? ?/sec busy_systems/05x_entities_12_systems 1.06 454.7±6.77µs ? ?/sec 1.00 430.1±3.58µs ? ?/sec busy_systems/05x_entities_15_systems 1.03 554.6±6.15µs ? ?/sec 1.00 538.4±23.87µs ? ?/sec contrived/01x_entities_03_systems 1.00 14.0±0.15µs ? ?/sec 1.08 15.1±0.21µs ? ?/sec contrived/01x_entities_06_systems 1.04 28.5±0.37µs ? ?/sec 1.00 27.4±0.44µs ? ?/sec contrived/01x_entities_09_systems 1.00 41.5±4.38µs ? ?/sec 1.02 42.2±2.24µs ? ?/sec contrived/01x_entities_12_systems 1.06 55.9±1.49µs ? ?/sec 1.00 52.6±1.36µs ? ?/sec contrived/01x_entities_15_systems 1.02 68.0±2.00µs ? ?/sec 1.00 66.5±0.78µs ? ?/sec contrived/02x_entities_03_systems 1.03 25.2±0.38µs ? ?/sec 1.00 24.6±0.52µs ? ?/sec contrived/02x_entities_06_systems 1.00 46.3±0.49µs ? ?/sec 1.04 48.1±4.13µs ? ?/sec contrived/02x_entities_09_systems 1.02 70.4±0.99µs ? ?/sec 1.00 68.8±1.04µs ? ?/sec contrived/02x_entities_12_systems 1.06 96.8±1.49µs ? ?/sec 1.00 91.5±0.93µs ? ?/sec contrived/02x_entities_15_systems 1.02 116.2±0.95µs ? ?/sec 1.00 114.2±1.42µs ? ?/sec contrived/03x_entities_03_systems 1.00 33.2±0.38µs ? ?/sec 1.01 33.6±0.45µs ? ?/sec contrived/03x_entities_06_systems 1.00 62.4±0.73µs ? ?/sec 1.01 63.3±1.05µs ? ?/sec contrived/03x_entities_09_systems 1.02 96.4±0.85µs ? ?/sec 1.00 94.8±3.02µs ? ?/sec contrived/03x_entities_12_systems 1.01 126.3±4.67µs ? ?/sec 1.00 125.6±2.27µs ? ?/sec contrived/03x_entities_15_systems 1.03 160.2±9.37µs ? ?/sec 1.00 156.0±1.53µs ? ?/sec contrived/04x_entities_03_systems 1.02 41.4±3.39µs ? ?/sec 1.00 40.5±0.52µs ? ?/sec contrived/04x_entities_06_systems 1.00 78.9±1.61µs ? ?/sec 1.02 80.3±1.06µs ? ?/sec contrived/04x_entities_09_systems 1.02 121.8±3.97µs ? ?/sec 1.00 119.2±1.46µs ? ?/sec contrived/04x_entities_12_systems 1.00 157.8±1.48µs ? ?/sec 1.01 160.1±1.72µs ? ?/sec contrived/04x_entities_15_systems 1.00 197.9±1.47µs ? ?/sec 1.08 214.2±34.61µs ? ?/sec contrived/05x_entities_03_systems 1.00 49.1±0.33µs ? ?/sec 1.01 49.7±0.75µs ? ?/sec contrived/05x_entities_06_systems 1.00 95.0±0.93µs ? ?/sec 1.00 94.6±0.94µs ? ?/sec contrived/05x_entities_09_systems 1.01 143.2±1.68µs ? ?/sec 1.00 142.2±2.00µs ? ?/sec contrived/05x_entities_12_systems 1.00 191.8±2.03µs ? ?/sec 1.01 192.7±7.88µs ? ?/sec contrived/05x_entities_15_systems 1.02 239.7±3.71µs ? ?/sec 1.00 235.8±4.11µs ? ?/sec empty_systems/000_systems 1.01 47.8±0.67ns ? ?/sec 1.00 47.5±2.02ns ? ?/sec empty_systems/001_systems 1.00 1743.2±126.14ns ? ?/sec 1.01 1761.1±70.10ns ? ?/sec empty_systems/002_systems 1.01 2.2±0.04µs ? ?/sec 1.00 2.2±0.02µs ? ?/sec empty_systems/003_systems 1.02 2.7±0.09µs ? ?/sec 1.00 2.7±0.16µs ? ?/sec empty_systems/004_systems 1.00 3.1±0.11µs ? ?/sec 1.00 3.1±0.24µs ? ?/sec empty_systems/005_systems 1.00 3.5±0.05µs ? ?/sec 1.11 3.9±0.70µs ? ?/sec empty_systems/010_systems 1.00 5.5±0.12µs ? ?/sec 1.03 5.7±0.17µs ? ?/sec empty_systems/015_systems 1.00 7.9±0.19µs ? ?/sec 1.06 8.4±0.16µs ? ?/sec empty_systems/020_systems 1.00 10.4±1.25µs ? ?/sec 1.02 10.6±0.18µs ? ?/sec empty_systems/025_systems 1.00 12.4±0.39µs ? ?/sec 1.14 14.1±1.07µs ? ?/sec empty_systems/030_systems 1.00 15.1±0.39µs ? ?/sec 1.05 15.8±0.62µs ? ?/sec empty_systems/035_systems 1.00 16.9±0.47µs ? ?/sec 1.07 18.0±0.37µs ? ?/sec empty_systems/040_systems 1.00 19.3±0.41µs ? ?/sec 1.05 20.3±0.39µs ? ?/sec empty_systems/045_systems 1.00 22.4±1.67µs ? ?/sec 1.02 22.9±0.51µs ? ?/sec empty_systems/050_systems 1.00 24.4±1.67µs ? ?/sec 1.01 24.7±0.40µs ? ?/sec empty_systems/055_systems 1.05 28.6±5.27µs ? ?/sec 1.00 27.2±0.70µs ? ?/sec empty_systems/060_systems 1.02 29.9±1.64µs ? ?/sec 1.00 29.3±0.66µs ? ?/sec empty_systems/065_systems 1.02 32.7±3.15µs ? ?/sec 1.00 32.1±0.98µs ? ?/sec empty_systems/070_systems 1.00 33.0±1.42µs ? ?/sec 1.03 34.1±1.44µs ? ?/sec empty_systems/075_systems 1.00 34.8±0.89µs ? ?/sec 1.04 36.2±0.70µs ? ?/sec empty_systems/080_systems 1.00 37.0±1.82µs ? ?/sec 1.05 38.7±1.37µs ? ?/sec empty_systems/085_systems 1.00 38.7±0.76µs ? ?/sec 1.05 40.8±0.83µs ? ?/sec empty_systems/090_systems 1.00 41.5±1.09µs ? ?/sec 1.04 43.2±0.82µs ? ?/sec empty_systems/095_systems 1.00 43.6±1.10µs ? ?/sec 1.04 45.2±0.99µs ? ?/sec empty_systems/100_systems 1.00 46.7±2.27µs ? ?/sec 1.03 48.1±1.25µs ? ?/sec ``` </details> ## Migration Guide ### App `runner` and SubApp `extract` functions are now required to be Send This was changed to enable pipelined rendering. If this breaks your use case please report it as these new bounds might be able to be relaxed. ## ToDo * [x] redo benchmarking * [x] reinvestigate the perf of the try_tick -> run change for task pool scope

206 lines

7.0 KiB

Rust

206 lines

7.0 KiB

Rust

use std::{

|

|

future::Future,

|

|

marker::PhantomData,

|

|

mem,

|

|

sync::{Arc, Mutex},

|

|

};

|

|

|

|

/// Used to create a TaskPool

|

|

#[derive(Debug, Default, Clone)]

|

|

pub struct TaskPoolBuilder {}

|

|

|

|

/// This is a dummy struct for wasm support to provide the same api as with the multithreaded

|

|

/// task pool. In the case of the multithreaded task pool this struct is used to spawn

|

|

/// tasks on a specific thread. But the wasm task pool just calls

|

|

/// [`wasm_bindgen_futures::spawn_local`] for spawning which just runs tasks on the main thread

|

|

/// and so the [`ThreadExecutor`] does nothing.

|

|

#[derive(Default)]

|

|

pub struct ThreadExecutor<'a>(PhantomData<&'a ()>);

|

|

impl<'a> ThreadExecutor<'a> {

|

|

/// Creates a new `ThreadExecutor`

|

|

pub fn new() -> Self {

|

|

Self(PhantomData::default())

|

|

}

|

|

}

|

|

|

|

impl TaskPoolBuilder {

|

|

/// Creates a new TaskPoolBuilder instance

|

|

pub fn new() -> Self {

|

|

Self::default()

|

|

}

|

|

|

|

/// No op on the single threaded task pool

|

|

pub fn num_threads(self, _num_threads: usize) -> Self {

|

|

self

|

|

}

|

|

|

|

/// No op on the single threaded task pool

|

|

pub fn stack_size(self, _stack_size: usize) -> Self {

|

|

self

|

|

}

|

|

|

|

/// No op on the single threaded task pool

|

|

pub fn thread_name(self, _thread_name: String) -> Self {

|

|

self

|

|

}

|

|

|

|

/// Creates a new [`TaskPool`]

|

|

pub fn build(self) -> TaskPool {

|

|

TaskPool::new_internal()

|

|

}

|

|

}

|

|

|

|

/// A thread pool for executing tasks. Tasks are futures that are being automatically driven by

|

|

/// the pool on threads owned by the pool. In this case - main thread only.

|

|

#[derive(Debug, Default, Clone)]

|

|

pub struct TaskPool {}

|

|

|

|

impl TaskPool {

|

|

/// Create a `TaskPool` with the default configuration.

|

|

pub fn new() -> Self {

|

|

TaskPoolBuilder::new().build()

|

|

}

|

|

|

|

#[allow(unused_variables)]

|

|

fn new_internal() -> Self {

|

|

Self {}

|

|

}

|

|

|

|

/// Return the number of threads owned by the task pool

|

|

pub fn thread_num(&self) -> usize {

|

|

1

|

|

}

|

|

|

|

/// Allows spawning non-`static futures on the thread pool. The function takes a callback,

|

|

/// passing a scope object into it. The scope object provided to the callback can be used

|

|

/// to spawn tasks. This function will await the completion of all tasks before returning.

|

|

///

|

|

/// This is similar to `rayon::scope` and `crossbeam::scope`

|

|

pub fn scope<'env, F, T>(&self, f: F) -> Vec<T>

|

|

where

|

|

F: for<'scope> FnOnce(&'env mut Scope<'scope, 'env, T>),

|

|

T: Send + 'static,

|

|

{

|

|

self.scope_with_executor(false, None, f)

|

|

}

|

|

|

|

/// Allows spawning non-`static futures on the thread pool. The function takes a callback,

|

|

/// passing a scope object into it. The scope object provided to the callback can be used

|

|

/// to spawn tasks. This function will await the completion of all tasks before returning.

|

|

///

|

|

/// This is similar to `rayon::scope` and `crossbeam::scope`

|

|

pub fn scope_with_executor<'env, F, T>(

|

|

&self,

|

|

_tick_task_pool_executor: bool,

|

|

_thread_executor: Option<&ThreadExecutor>,

|

|

f: F,

|

|

) -> Vec<T>

|

|

where

|

|

F: for<'scope> FnOnce(&'env mut Scope<'scope, 'env, T>),

|

|

T: Send + 'static,

|

|

{

|

|

let executor = &async_executor::LocalExecutor::new();

|

|

let executor: &'env async_executor::LocalExecutor<'env> =

|

|

unsafe { mem::transmute(executor) };

|

|

|

|

let results: Mutex<Vec<Arc<Mutex<Option<T>>>>> = Mutex::new(Vec::new());

|

|

let results: &'env Mutex<Vec<Arc<Mutex<Option<T>>>>> = unsafe { mem::transmute(&results) };

|

|

|

|

let mut scope = Scope {

|

|

executor,

|

|

results,

|

|

scope: PhantomData,

|

|

env: PhantomData,

|

|

};

|

|

|

|

let scope_ref: &'env mut Scope<'_, 'env, T> = unsafe { mem::transmute(&mut scope) };

|

|

|

|

f(scope_ref);

|

|

|

|

// Loop until all tasks are done

|

|

while executor.try_tick() {}

|

|

|

|

let results = scope.results.lock().unwrap();

|

|

results

|

|

.iter()

|

|

.map(|result| result.lock().unwrap().take().unwrap())

|

|

.collect()

|

|

}

|

|

|

|

/// Spawns a static future onto the JS event loop. For now it is returning FakeTask

|

|

/// instance with no-op detach method. Returning real Task is possible here, but tricky:

|

|

/// future is running on JS event loop, Task is running on async_executor::LocalExecutor

|

|

/// so some proxy future is needed. Moreover currently we don't have long-living

|

|

/// LocalExecutor here (above `spawn` implementation creates temporary one)

|

|

/// But for typical use cases it seems that current implementation should be sufficient:

|

|

/// caller can spawn long-running future writing results to some channel / event queue

|

|

/// and simply call detach on returned Task (like AssetServer does) - spawned future

|

|

/// can write results to some channel / event queue.

|

|

pub fn spawn<T>(&self, future: impl Future<Output = T> + 'static) -> FakeTask

|

|

where

|

|

T: 'static,

|

|

{

|

|

wasm_bindgen_futures::spawn_local(async move {

|

|

future.await;

|

|

});

|

|

FakeTask

|

|

}

|

|

|

|

/// Spawns a static future on the JS event loop. This is exactly the same as [`TaskSpool::spawn`].

|

|

pub fn spawn_local<T>(&self, future: impl Future<Output = T> + 'static) -> FakeTask

|

|

where

|

|

T: 'static,

|

|

{

|

|

self.spawn(future)

|

|

}

|

|

}

|

|

|

|

#[derive(Debug)]

|

|

pub struct FakeTask;

|

|

|

|

impl FakeTask {

|

|

/// No op on the single threaded task pool

|

|

pub fn detach(self) {}

|

|

}

|

|

|

|

/// A `TaskPool` scope for running one or more non-`'static` futures.

|

|

///

|

|

/// For more information, see [`TaskPool::scope`].

|

|

#[derive(Debug)]

|

|

pub struct Scope<'scope, 'env: 'scope, T> {

|

|

executor: &'env async_executor::LocalExecutor<'env>,

|

|

// Vector to gather results of all futures spawned during scope run

|

|

results: &'env Mutex<Vec<Arc<Mutex<Option<T>>>>>,

|

|

|

|

// make `Scope` invariant over 'scope and 'env

|

|

scope: PhantomData<&'scope mut &'scope ()>,

|

|

env: PhantomData<&'env mut &'env ()>,

|

|

}

|

|

|

|

impl<'scope, 'env, T: Send + 'env> Scope<'scope, 'env, T> {

|

|

/// Spawns a scoped future onto the thread-local executor. The scope *must* outlive

|

|

/// the provided future. The results of the future will be returned as a part of

|

|

/// [`TaskPool::scope`]'s return value.

|

|

///

|

|

/// On the single threaded task pool, it just calls [`Scope::spawn_local`].

|

|

///

|

|

/// For more information, see [`TaskPool::scope`].

|

|

pub fn spawn<Fut: Future<Output = T> + 'env>(&self, f: Fut) {

|

|

self.spawn_on_scope(f);

|

|

}

|

|

|

|

/// Spawns a scoped future that runs on the thread the scope called from. The

|

|

/// scope *must* outlive the provided future. The results of the future will be

|

|

/// returned as a part of [`TaskPool::scope`]'s return value.

|

|

///

|

|

/// For more information, see [`TaskPool::scope`].

|

|

pub fn spawn_on_scope<Fut: Future<Output = T> + 'env>(&self, f: Fut) {

|

|

let result = Arc::new(Mutex::new(None));

|

|

self.results.lock().unwrap().push(result.clone());

|

|

let f = async move {

|

|

result.lock().unwrap().replace(f.await);

|

|

};

|

|

self.executor.spawn(f).detach();

|

|

}

|

|

}

|