# Objective

Document `PipelineCache` and a few other related types.

## Solution

Add documenting comments to `PipelineCache` and a few other related

types in the same file.

# Objective

- In WASM, creating a pipeline can easily take 2 seconds, freezing the game while doing so

- Preloading pipelines can be done during a "loading" state, but it is not trivial to know which pipeline to preload, or when it's done

## Solution

- Add a log with shaders being loaded and their shader defs

- add a function on `PipelineCache` to return the number of ready pipelines

# Objective

`ShaderData` is marked as public, but is an internal type only used by one other

internal type, so it should be made private.

## Solution

`ShaderData` is only used in `ShaderCache`, and the latter is private,

so there is no need to make the former public. This change removes the

`pub` keyword from `ShaderData`, hidding it as the implementation detail

it is.

Split from #5600

*This PR description is an edited copy of #5007, written by @alice-i-cecile.*

# Objective

Follow-up to https://github.com/bevyengine/bevy/pull/2254. The `Resource` trait currently has a blanket implementation for all types that meet its bounds.

While ergonomic, this results in several drawbacks:

* it is possible to make confusing, silent mistakes such as inserting a function pointer (Foo) rather than a value (Foo::Bar) as a resource

* it is challenging to discover if a type is intended to be used as a resource

* we cannot later add customization options (see the [RFC](https://github.com/bevyengine/rfcs/blob/main/rfcs/27-derive-component.md) for the equivalent choice for Component).

* dependencies can use the same Rust type as a resource in invisibly conflicting ways

* raw Rust types used as resources cannot preserve privacy appropriately, as anyone able to access that type can read and write to internal values

* we cannot capture a definitive list of possible resources to display to users in an editor

## Notes to reviewers

* Review this commit-by-commit; there's effectively no back-tracking and there's a lot of churn in some of these commits.

*ira: My commits are not as well organized :')*

* I've relaxed the bound on Local to Send + Sync + 'static: I don't think these concerns apply there, so this can keep things simple. Storing e.g. a u32 in a Local is fine, because there's a variable name attached explaining what it does.

* I think this is a bad place for the Resource trait to live, but I've left it in place to make reviewing easier. IMO that's best tackled with https://github.com/bevyengine/bevy/issues/4981.

## Changelog

`Resource` is no longer automatically implemented for all matching types. Instead, use the new `#[derive(Resource)]` macro.

## Migration Guide

Add `#[derive(Resource)]` to all types you are using as a resource.

If you are using a third party type as a resource, wrap it in a tuple struct to bypass orphan rules. Consider deriving `Deref` and `DerefMut` to improve ergonomics.

`ClearColor` no longer implements `Component`. Using `ClearColor` as a component in 0.8 did nothing.

Use the `ClearColorConfig` in the `Camera3d` and `Camera2d` components instead.

Co-authored-by: Alice <alice.i.cecile@gmail.com>

Co-authored-by: Alice Cecile <alice.i.cecile@gmail.com>

Co-authored-by: devil-ira <justthecooldude@gmail.com>

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

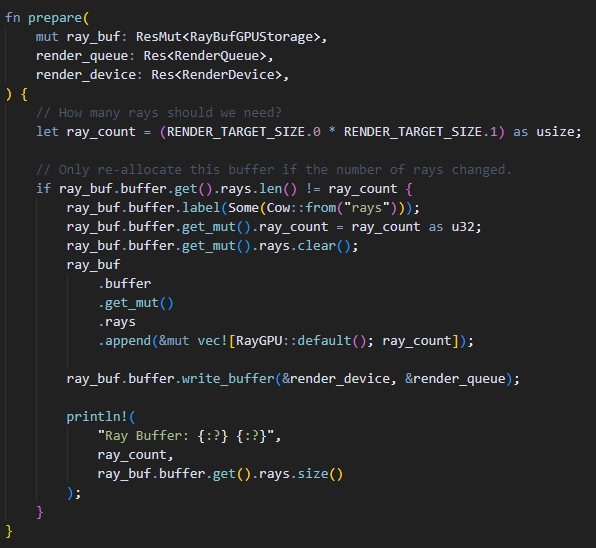

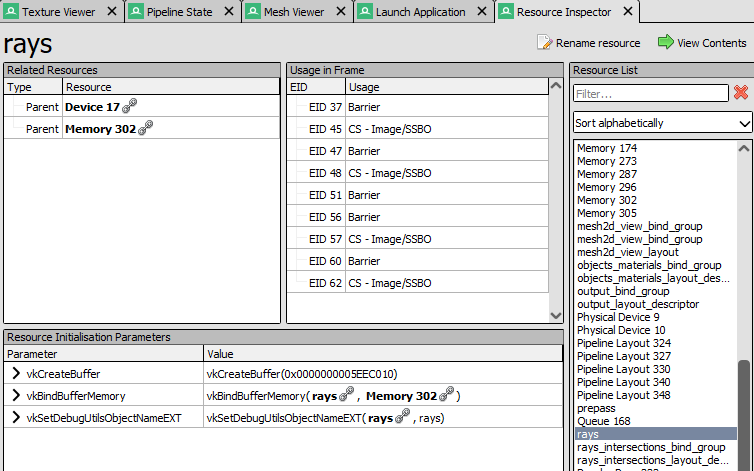

# Objective

- Expose the wgpu debug label on storage buffer types.

## Solution

🐄

- Add an optional cow static string and pass that to the label field of create_buffer_with_data

- This pattern is already used by Bevy for debug tags on bind group and layout descriptors.

---

Example Usage:

A buffer is given a label using the label function. Alternatively a buffer may be labeled when it is created if the default() convention is not used.

Here is the buffer appearing with the correct name in RenderDoc. Previously the buffer would have an anonymous name such as "Buffer223":

Co-authored-by: rebelroad-reinhart <reinhart@rebelroad.gg>

# Objective

- Currently, the `Extract` `RenderStage` is executed on the main world, with the render world available as a resource.

- However, when needing access to resources in the render world (e.g. to mutate them), the only way to do so was to get exclusive access to the whole `RenderWorld` resource.

- This meant that effectively only one extract which wrote to resources could run at a time.

- We didn't previously make `Extract`ing writing to the world a non-happy path, even though we want to discourage that.

## Solution

- Move the extract stage to run on the render world.

- Add the main world as a `MainWorld` resource.

- Add an `Extract` `SystemParam` as a convenience to access a (read only) `SystemParam` in the main world during `Extract`.

## Future work

It should be possible to avoid needing to use `get_or_spawn` for the render commands, since now the `Commands`' `Entities` matches up with the world being executed on.

We need to determine how this interacts with https://github.com/bevyengine/bevy/pull/3519

It's theoretically possible to remove the need for the `value` method on `Extract`. However, that requires slightly changing the `SystemParam` interface, which would make it more complicated. That would probably mess up the `SystemState` api too.

## Todo

I still need to add doc comments to `Extract`.

---

## Changelog

### Changed

- The `Extract` `RenderStage` now runs on the render world (instead of the main world as before).

You must use the `Extract` `SystemParam` to access the main world during the extract phase.

Resources on the render world can now be accessed using `ResMut` during extract.

### Removed

- `Commands::spawn_and_forget`. Use `Commands::get_or_spawn(e).insert_bundle(bundle)` instead

## Migration Guide

The `Extract` `RenderStage` now runs on the render world (instead of the main world as before).

You must use the `Extract` `SystemParam` to access the main world during the extract phase. `Extract` takes a single type parameter, which is any system parameter (such as `Res`, `Query` etc.). It will extract this from the main world, and returns the result of this extraction when `value` is called on it.

For example, if previously your extract system looked like:

```rust

fn extract_clouds(mut commands: Commands, clouds: Query<Entity, With<Cloud>>) {

for cloud in clouds.iter() {

commands.get_or_spawn(cloud).insert(Cloud);

}

}

```

the new version would be:

```rust

fn extract_clouds(mut commands: Commands, mut clouds: Extract<Query<Entity, With<Cloud>>>) {

for cloud in clouds.value().iter() {

commands.get_or_spawn(cloud).insert(Cloud);

}

}

```

The diff is:

```diff

--- a/src/clouds.rs

+++ b/src/clouds.rs

@@ -1,5 +1,5 @@

-fn extract_clouds(mut commands: Commands, clouds: Query<Entity, With<Cloud>>) {

- for cloud in clouds.iter() {

+fn extract_clouds(mut commands: Commands, mut clouds: Extract<Query<Entity, With<Cloud>>>) {

+ for cloud in clouds.value().iter() {

commands.get_or_spawn(cloud).insert(Cloud);

}

}

```

You can now also access resources from the render world using the normal system parameters during `Extract`:

```rust

fn extract_assets(mut render_assets: ResMut<MyAssets>, source_assets: Extract<Res<MyAssets>>) {

*render_assets = source_assets.clone();

}

```

Please note that all existing extract systems need to be updated to match this new style; even if they currently compile they will not run as expected. A warning will be emitted on a best-effort basis if this is not met.

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

Removed `const_vec2`/`const_vec3`

and replaced with equivalent `.from_array`.

# Objective

Fixes#5112

## Solution

- `encase` needs to update to `glam` as well. See teoxoy/encase#4 on progress on that.

- `hexasphere` also needs to be updated, see OptimisticPeach/hexasphere#12.

# Objective

- Nightly clippy lints should be fixed before they get stable and break CI

## Solution

- fix new clippy lints

- ignore `significant_drop_in_scrutinee` since it isn't relevant in our loop https://github.com/rust-lang/rust-clippy/issues/8987

```rust

for line in io::stdin().lines() {

...

}

```

Co-authored-by: Jakob Hellermann <hellermann@sipgate.de>

# Objective

This PR reworks Bevy's Material system, making the user experience of defining Materials _much_ nicer. Bevy's previous material system leaves a lot to be desired:

* Materials require manually implementing the `RenderAsset` trait, which involves manually generating the bind group, handling gpu buffer data transfer, looking up image textures, etc. Even the simplest single-texture material involves writing ~80 unnecessary lines of code. This was never the long term plan.

* There are two material traits, which is confusing, hard to document, and often redundant: `Material` and `SpecializedMaterial`. `Material` implicitly implements `SpecializedMaterial`, and `SpecializedMaterial` is used in most high level apis to support both use cases. Most users shouldn't need to think about specialization at all (I consider it a "power-user tool"), so the fact that `SpecializedMaterial` is front-and-center in our apis is a miss.

* Implementing either material trait involves a lot of "type soup". The "prepared asset" parameter is particularly heinous: `&<Self as RenderAsset>::PreparedAsset`. Defining vertex and fragment shaders is also more verbose than it needs to be.

## Solution

Say hello to the new `Material` system:

```rust

#[derive(AsBindGroup, TypeUuid, Debug, Clone)]

#[uuid = "f690fdae-d598-45ab-8225-97e2a3f056e0"]

pub struct CoolMaterial {

#[uniform(0)]

color: Color,

#[texture(1)]

#[sampler(2)]

color_texture: Handle<Image>,

}

impl Material for CoolMaterial {

fn fragment_shader() -> ShaderRef {

"cool_material.wgsl".into()

}

}

```

Thats it! This same material would have required [~80 lines of complicated "type heavy" code](https://github.com/bevyengine/bevy/blob/v0.7.0/examples/shader/shader_material.rs) in the old Material system. Now it is just 14 lines of simple, readable code.

This is thanks to a new consolidated `Material` trait and the new `AsBindGroup` trait / derive.

### The new `Material` trait

The old "split" `Material` and `SpecializedMaterial` traits have been removed in favor of a new consolidated `Material` trait. All of the functions on the trait are optional.

The difficulty of implementing `Material` has been reduced by simplifying dataflow and removing type complexity:

```rust

// Old

impl Material for CustomMaterial {

fn fragment_shader(asset_server: &AssetServer) -> Option<Handle<Shader>> {

Some(asset_server.load("custom_material.wgsl"))

}

fn alpha_mode(render_asset: &<Self as RenderAsset>::PreparedAsset) -> AlphaMode {

render_asset.alpha_mode

}

}

// New

impl Material for CustomMaterial {

fn fragment_shader() -> ShaderRef {

"custom_material.wgsl".into()

}

fn alpha_mode(&self) -> AlphaMode {

self.alpha_mode

}

}

```

Specialization is still supported, but it is hidden by default under the `specialize()` function (more on this later).

### The `AsBindGroup` trait / derive

The `Material` trait now requires the `AsBindGroup` derive. This can be implemented manually relatively easily, but deriving it will almost always be preferable.

Field attributes like `uniform` and `texture` are used to define which fields should be bindings,

what their binding type is, and what index they should be bound at:

```rust

#[derive(AsBindGroup)]

struct CoolMaterial {

#[uniform(0)]

color: Color,

#[texture(1)]

#[sampler(2)]

color_texture: Handle<Image>,

}

```

In WGSL shaders, the binding looks like this:

```wgsl

struct CoolMaterial {

color: vec4<f32>;

};

[[group(1), binding(0)]]

var<uniform> material: CoolMaterial;

[[group(1), binding(1)]]

var color_texture: texture_2d<f32>;

[[group(1), binding(2)]]

var color_sampler: sampler;

```

Note that the "group" index is determined by the usage context. It is not defined in `AsBindGroup`. Bevy material bind groups are bound to group 1.

The following field-level attributes are supported:

* `uniform(BINDING_INDEX)`

* The field will be converted to a shader-compatible type using the `ShaderType` trait, written to a `Buffer`, and bound as a uniform. It can also be derived for custom structs.

* `texture(BINDING_INDEX)`

* This field's `Handle<Image>` will be used to look up the matching `Texture` gpu resource, which will be bound as a texture in shaders. The field will be assumed to implement `Into<Option<Handle<Image>>>`. In practice, most fields should be a `Handle<Image>` or `Option<Handle<Image>>`. If the value of an `Option<Handle<Image>>` is `None`, the new `FallbackImage` resource will be used instead. This attribute can be used in conjunction with a `sampler` binding attribute (with a different binding index).

* `sampler(BINDING_INDEX)`

* Behaves exactly like the `texture` attribute, but sets the Image's sampler binding instead of the texture.

Note that fields without field-level binding attributes will be ignored.

```rust

#[derive(AsBindGroup)]

struct CoolMaterial {

#[uniform(0)]

color: Color,

this_field_is_ignored: String,

}

```

As mentioned above, `Option<Handle<Image>>` is also supported:

```rust

#[derive(AsBindGroup)]

struct CoolMaterial {

#[uniform(0)]

color: Color,

#[texture(1)]

#[sampler(2)]

color_texture: Option<Handle<Image>>,

}

```

This is useful if you want a texture to be optional. When the value is `None`, the `FallbackImage` will be used for the binding instead, which defaults to "pure white".

Field uniforms with the same binding index will be combined into a single binding:

```rust

#[derive(AsBindGroup)]

struct CoolMaterial {

#[uniform(0)]

color: Color,

#[uniform(0)]

roughness: f32,

}

```

In WGSL shaders, the binding would look like this:

```wgsl

struct CoolMaterial {

color: vec4<f32>;

roughness: f32;

};

[[group(1), binding(0)]]

var<uniform> material: CoolMaterial;

```

Some less common scenarios will require "struct-level" attributes. These are the currently supported struct-level attributes:

* `uniform(BINDING_INDEX, ConvertedShaderType)`

* Similar to the field-level `uniform` attribute, but instead the entire `AsBindGroup` value is converted to `ConvertedShaderType`, which must implement `ShaderType`. This is useful if more complicated conversion logic is required.

* `bind_group_data(DataType)`

* The `AsBindGroup` type will be converted to some `DataType` using `Into<DataType>` and stored as `AsBindGroup::Data` as part of the `AsBindGroup::as_bind_group` call. This is useful if data needs to be stored alongside the generated bind group, such as a unique identifier for a material's bind group. The most common use case for this attribute is "shader pipeline specialization".

The previous `CoolMaterial` example illustrating "combining multiple field-level uniform attributes with the same binding index" can

also be equivalently represented with a single struct-level uniform attribute:

```rust

#[derive(AsBindGroup)]

#[uniform(0, CoolMaterialUniform)]

struct CoolMaterial {

color: Color,

roughness: f32,

}

#[derive(ShaderType)]

struct CoolMaterialUniform {

color: Color,

roughness: f32,

}

impl From<&CoolMaterial> for CoolMaterialUniform {

fn from(material: &CoolMaterial) -> CoolMaterialUniform {

CoolMaterialUniform {

color: material.color,

roughness: material.roughness,

}

}

}

```

### Material Specialization

Material shader specialization is now _much_ simpler:

```rust

#[derive(AsBindGroup, TypeUuid, Debug, Clone)]

#[uuid = "f690fdae-d598-45ab-8225-97e2a3f056e0"]

#[bind_group_data(CoolMaterialKey)]

struct CoolMaterial {

#[uniform(0)]

color: Color,

is_red: bool,

}

#[derive(Copy, Clone, Hash, Eq, PartialEq)]

struct CoolMaterialKey {

is_red: bool,

}

impl From<&CoolMaterial> for CoolMaterialKey {

fn from(material: &CoolMaterial) -> CoolMaterialKey {

CoolMaterialKey {

is_red: material.is_red,

}

}

}

impl Material for CoolMaterial {

fn fragment_shader() -> ShaderRef {

"cool_material.wgsl".into()

}

fn specialize(

pipeline: &MaterialPipeline<Self>,

descriptor: &mut RenderPipelineDescriptor,

layout: &MeshVertexBufferLayout,

key: MaterialPipelineKey<Self>,

) -> Result<(), SpecializedMeshPipelineError> {

if key.bind_group_data.is_red {

let fragment = descriptor.fragment.as_mut().unwrap();

fragment.shader_defs.push("IS_RED".to_string());

}

Ok(())

}

}

```

Setting `bind_group_data` is not required for specialization (it defaults to `()`). Scenarios like "custom vertex attributes" also benefit from this system:

```rust

impl Material for CustomMaterial {

fn vertex_shader() -> ShaderRef {

"custom_material.wgsl".into()

}

fn fragment_shader() -> ShaderRef {

"custom_material.wgsl".into()

}

fn specialize(

pipeline: &MaterialPipeline<Self>,

descriptor: &mut RenderPipelineDescriptor,

layout: &MeshVertexBufferLayout,

key: MaterialPipelineKey<Self>,

) -> Result<(), SpecializedMeshPipelineError> {

let vertex_layout = layout.get_layout(&[

Mesh::ATTRIBUTE_POSITION.at_shader_location(0),

ATTRIBUTE_BLEND_COLOR.at_shader_location(1),

])?;

descriptor.vertex.buffers = vec![vertex_layout];

Ok(())

}

}

```

### Ported `StandardMaterial` to the new `Material` system

Bevy's built-in PBR material uses the new Material system (including the AsBindGroup derive):

```rust

#[derive(AsBindGroup, Debug, Clone, TypeUuid)]

#[uuid = "7494888b-c082-457b-aacf-517228cc0c22"]

#[bind_group_data(StandardMaterialKey)]

#[uniform(0, StandardMaterialUniform)]

pub struct StandardMaterial {

pub base_color: Color,

#[texture(1)]

#[sampler(2)]

pub base_color_texture: Option<Handle<Image>>,

/* other fields omitted for brevity */

```

### Ported Bevy examples to the new `Material` system

The overall complexity of Bevy's "custom shader examples" has gone down significantly. Take a look at the diffs if you want a dopamine spike.

Please note that while this PR has a net increase in "lines of code", most of those extra lines come from added documentation. There is a significant reduction

in the overall complexity of the code (even accounting for the new derive logic).

---

## Changelog

### Added

* `AsBindGroup` trait and derive, which make it much easier to transfer data to the gpu and generate bind groups for a given type.

### Changed

* The old `Material` and `SpecializedMaterial` traits have been replaced by a consolidated (much simpler) `Material` trait. Materials no longer implement `RenderAsset`.

* `StandardMaterial` was ported to the new material system. There are no user-facing api changes to the `StandardMaterial` struct api, but it now implements `AsBindGroup` and `Material` instead of `RenderAsset` and `SpecializedMaterial`.

## Migration Guide

The Material system has been reworked to be much simpler. We've removed a lot of boilerplate with the new `AsBindGroup` derive and the `Material` trait is simpler as well!

### Bevy 0.7 (old)

```rust

#[derive(Debug, Clone, TypeUuid)]

#[uuid = "f690fdae-d598-45ab-8225-97e2a3f056e0"]

pub struct CustomMaterial {

color: Color,

color_texture: Handle<Image>,

}

#[derive(Clone)]

pub struct GpuCustomMaterial {

_buffer: Buffer,

bind_group: BindGroup,

}

impl RenderAsset for CustomMaterial {

type ExtractedAsset = CustomMaterial;

type PreparedAsset = GpuCustomMaterial;

type Param = (SRes<RenderDevice>, SRes<MaterialPipeline<Self>>);

fn extract_asset(&self) -> Self::ExtractedAsset {

self.clone()

}

fn prepare_asset(

extracted_asset: Self::ExtractedAsset,

(render_device, material_pipeline): &mut SystemParamItem<Self::Param>,

) -> Result<Self::PreparedAsset, PrepareAssetError<Self::ExtractedAsset>> {

let color = Vec4::from_slice(&extracted_asset.color.as_linear_rgba_f32());

let byte_buffer = [0u8; Vec4::SIZE.get() as usize];

let mut buffer = encase::UniformBuffer::new(byte_buffer);

buffer.write(&color).unwrap();

let buffer = render_device.create_buffer_with_data(&BufferInitDescriptor {

contents: buffer.as_ref(),

label: None,

usage: BufferUsages::UNIFORM | BufferUsages::COPY_DST,

});

let (texture_view, texture_sampler) = if let Some(result) = material_pipeline

.mesh_pipeline

.get_image_texture(gpu_images, &Some(extracted_asset.color_texture.clone()))

{

result

} else {

return Err(PrepareAssetError::RetryNextUpdate(extracted_asset));

};

let bind_group = render_device.create_bind_group(&BindGroupDescriptor {

entries: &[

BindGroupEntry {

binding: 0,

resource: buffer.as_entire_binding(),

},

BindGroupEntry {

binding: 0,

resource: BindingResource::TextureView(texture_view),

},

BindGroupEntry {

binding: 1,

resource: BindingResource::Sampler(texture_sampler),

},

],

label: None,

layout: &material_pipeline.material_layout,

});

Ok(GpuCustomMaterial {

_buffer: buffer,

bind_group,

})

}

}

impl Material for CustomMaterial {

fn fragment_shader(asset_server: &AssetServer) -> Option<Handle<Shader>> {

Some(asset_server.load("custom_material.wgsl"))

}

fn bind_group(render_asset: &<Self as RenderAsset>::PreparedAsset) -> &BindGroup {

&render_asset.bind_group

}

fn bind_group_layout(render_device: &RenderDevice) -> BindGroupLayout {

render_device.create_bind_group_layout(&BindGroupLayoutDescriptor {

entries: &[

BindGroupLayoutEntry {

binding: 0,

visibility: ShaderStages::FRAGMENT,

ty: BindingType::Buffer {

ty: BufferBindingType::Uniform,

has_dynamic_offset: false,

min_binding_size: Some(Vec4::min_size()),

},

count: None,

},

BindGroupLayoutEntry {

binding: 1,

visibility: ShaderStages::FRAGMENT,

ty: BindingType::Texture {

multisampled: false,

sample_type: TextureSampleType::Float { filterable: true },

view_dimension: TextureViewDimension::D2Array,

},

count: None,

},

BindGroupLayoutEntry {

binding: 2,

visibility: ShaderStages::FRAGMENT,

ty: BindingType::Sampler(SamplerBindingType::Filtering),

count: None,

},

],

label: None,

})

}

}

```

### Bevy 0.8 (new)

```rust

impl Material for CustomMaterial {

fn fragment_shader() -> ShaderRef {

"custom_material.wgsl".into()

}

}

#[derive(AsBindGroup, TypeUuid, Debug, Clone)]

#[uuid = "f690fdae-d598-45ab-8225-97e2a3f056e0"]

pub struct CustomMaterial {

#[uniform(0)]

color: Color,

#[texture(1)]

#[sampler(2)]

color_texture: Handle<Image>,

}

```

## Future Work

* Add support for more binding types (cubemaps, buffers, etc). This PR intentionally includes a bare minimum number of binding types to keep "reviewability" in check.

* Consider optionally eliding binding indices using binding names. `AsBindGroup` could pass in (optional?) reflection info as a "hint".

* This would make it possible for the derive to do this:

```rust

#[derive(AsBindGroup)]

pub struct CustomMaterial {

#[uniform]

color: Color,

#[texture]

#[sampler]

color_texture: Option<Handle<Image>>,

alpha_mode: AlphaMode,

}

```

* Or this

```rust

#[derive(AsBindGroup)]

pub struct CustomMaterial {

#[binding]

color: Color,

#[binding]

color_texture: Option<Handle<Image>>,

alpha_mode: AlphaMode,

}

```

* Or even this (if we flip to "include bindings by default")

```rust

#[derive(AsBindGroup)]

pub struct CustomMaterial {

color: Color,

color_texture: Option<Handle<Image>>,

#[binding(ignore)]

alpha_mode: AlphaMode,

}

```

* If we add the option to define custom draw functions for materials (which could be done in a type-erased way), I think that would be enough to support extra non-material bindings. Worth considering!

# Objective

Documents the `BufferVec` render resource.

`BufferVec` is a fairly low level object, that will likely be managed by a higher level API (e.g. through [`encase`](https://github.com/bevyengine/bevy/issues/4272)) in the future. For now, since it is still used by some simple

example crates (e.g. [bevy-vertex-pulling](https://github.com/superdump/bevy-vertex-pulling)), it will be helpful

to provide some simple documentation on what `BufferVec` does.

## Solution

I looked through Discord discussion on `BufferVec`, and found [a comment](https://discord.com/channels/691052431525675048/953222550568173580/956596218857918464 ) by @superdump to be particularly helpful, in the general discussion around `encase`.

I have taken care to clarify where the data is stored (host-side), when the device-side buffer is created (through calls to `reserve`), and when data writes from host to device are scheduled (using `write_buffer` calls).

---

## Changelog

- Added doc string for `BufferVec` and two of its methods: `reserve` and `write_buffer`.

Co-authored-by: Brian Merchant <bhmerchant@gmail.com>

# Objective

Fix#4958

There was 4 issues:

- this is not true in WASM and on macOS: f28b921209/examples/3d/split_screen.rs (L90)

- ~~I made sure the system was running at least once~~

- I'm sending the event on window creation

- in webgl, setting a viewport has impacts on other render passes

- only in webgl and when there is a custom viewport, I added a render pass without a custom viewport

- shaderdef NO_ARRAY_TEXTURES_SUPPORT was not used by the 2d pipeline

- webgl feature was used but not declared in bevy_sprite, I added it to the Cargo.toml

- shaderdef NO_STORAGE_BUFFERS_SUPPORT was not used by the 2d pipeline

- I added it based on the BufferBindingType

The last commit changes the two last fixes to add the shaderdefs in the shader cache directly instead of needing to do it in each pipeline

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

Most of our `Iterator` impls satisfy the requirements of `std::iter::FusedIterator`, which has internal specialization that optimizes `Interator::fuse`. The std lib iterator combinators do have a few that rely on `fuse`, so this could optimize those use cases. I don't think we're using any of them in the engine itself, but beyond a light increase in compile time, it doesn't hurt to implement the trait.

## Solution

Implement the trait for all eligible iterators in first party crates. Also add a missing `ExactSizeIterator` on an iterator that could use it.

# Objective

At the moment all extra capabilities are disabled when validating shaders with naga:

c7c08f95cb/crates/bevy_render/src/render_resource/shader.rs (L146-L149)

This means these features can't be used even if the corresponding wgpu features are active.

## Solution

With these changes capabilities are now set corresponding to `RenderDevice::features`.

---

I have validated these changes for push constants with a project I am currently working on. Though bevy does not support creating pipelines with push constants yet, so I was only able to see that shaders are validated and compiled as expected.

# Objective

Fixes#4556

## Solution

StorageBuffer must use the Size of the std430 representation to calculate the buffer size, as the std430 representation is the data that will be written to it.

# Objective

- Make use of storage buffers, where they are available, for clustered forward bindings to support far more point lights in a scene

- Fixes#3605

- Based on top of #4079

This branch on an M1 Max can keep 60fps with about 2150 point lights of radius 1m in the Sponza scene where I've been testing. The bottleneck is mostly assigning lights to clusters which grows faster than linearly (I think 1000 lights was about 1.5ms and 5000 was 7.5ms). I have seen papers and presentations leveraging compute shaders that can get this up to over 1 million. That said, I think any further optimisations should probably be done in a separate PR.

## Solution

- Add `RenderDevice` to the `Material` and `SpecializedMaterial` trait `::key()` functions to allow setting flags on the keys depending on feature/limit availability

- Make `GpuPointLights` and `ViewClusterBuffers` into enums containing `UniformVec` and `StorageBuffer` variants. Implement the necessary API on them to make usage the same for both cases, and the only difference is at initialisation time.

- Appropriate shader defs in the shader code to handle the two cases

## Context on some decisions / open questions

- I'm using `max_storage_buffers_per_shader_stage >= 3` as a check to see if storage buffers are supported. I was thinking about diving into 'binding resource management' but it feels like we don't have enough use cases to understand the problem yet, and it is mostly a separate concern to this PR, so I think it should be handled separately.

- Should `ViewClusterBuffers` and `ViewClusterBindings` be merged, duplicating the count variables into the enum variants?

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

related: https://github.com/bevyengine/bevy/pull/3289

In addition to validating shaders early when debug assertions are enabled, use the new [error scopes](https://gpuweb.github.io/gpuweb/#error-scopes) API when creating a shader module.

I chose to keep the early validation (and thereby parsing twice) when debug assertions are enabled in, because it lets as handle errors ourselves and display them with pretty colors, while the error scopes API just gives us a string we can display.

This change pulls in `futures-util` as a new dependency for `future.now_or_never()`. I can inline that part of futures-lite into `bevy_render` to keep the compilation time lower if that's preferred.

# Objective

- Fixes#3970

- To support Bevy's shader abstraction(shader defs, shader imports and hot shader reloading) for compute shaders, I have followed carts advice and change the `PipelinenCache` to accommodate both compute and render pipelines.

## Solution

- renamed `RenderPipelineCache` to `PipelineCache`

- Cached Pipelines are now represented by an enum (render, compute)

- split the `SpecializedPipelines` into `SpecializedRenderPipelines` and `SpecializedComputePipelines`

- updated the game of life example

## Open Questions

- should `SpecializedRenderPipelines` and `SpecializedComputePipelines` be merged and how would we do that?

- should the `get_render_pipeline` and `get_compute_pipeline` methods be merged?

- is pipeline specialization for different entry points a good pattern

Co-authored-by: Kurt Kühnert <51823519+Ku95@users.noreply.github.com>

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

- Add a helper for storage buffers similar to `UniformVec`

## Solution

- Add a `StorageBuffer<T, U>` where `T` is the main body of the shader struct without any final variable-sized array member, and `U` is the type of the items in a variable-sized array.

- Use `()` as the type for unwanted parts, e.g. `StorageBuffer<(), Vec4>::default()` would construct a binding that would work with `struct MyType { data: array<vec4<f32>>; }` in WGSL and `StorageBuffer<MyType, ()>::default()` would work with `struct MyType { ... }` in WGSL as long as there are no variable-sized arrays.

- Std430 requires that there is at most one variable-sized array in a storage buffer, that if there is one it is the last member of the binding, and that it has at least one item. `StorageBuffer` handles all of these constraints.

# Objective

- In the large majority of cases, users were calling `.unwrap()` immediately after `.get_resource`.

- Attempting to add more helpful error messages here resulted in endless manual boilerplate (see #3899 and the linked PRs).

## Solution

- Add an infallible variant named `.resource` and so on.

- Use these infallible variants over `.get_resource().unwrap()` across the code base.

## Notes

I did not provide equivalent methods on `WorldCell`, in favor of removing it entirely in #3939.

## Migration Guide

Infallible variants of `.get_resource` have been added that implicitly panic, rather than needing to be unwrapped.

Replace `world.get_resource::<Foo>().unwrap()` with `world.resource::<Foo>()`.

## Impact

- `.unwrap` search results before: 1084

- `.unwrap` search results after: 942

- internal `unwrap_or_else` calls added: 4

- trivial unwrap calls removed from tests and code: 146

- uses of the new `try_get_resource` API: 11

- percentage of the time the unwrapping API was used internally: 93%

This PR makes a number of changes to how meshes and vertex attributes are handled, which the goal of enabling easy and flexible custom vertex attributes:

* Reworks the `Mesh` type to use the newly added `VertexAttribute` internally

* `VertexAttribute` defines the name, a unique `VertexAttributeId`, and a `VertexFormat`

* `VertexAttributeId` is used to produce consistent sort orders for vertex buffer generation, replacing the more expensive and often surprising "name based sorting"

* Meshes can be used to generate a `MeshVertexBufferLayout`, which defines the layout of the gpu buffer produced by the mesh. `MeshVertexBufferLayouts` can then be used to generate actual `VertexBufferLayouts` according to the requirements of a specific pipeline. This decoupling of "mesh layout" vs "pipeline vertex buffer layout" is what enables custom attributes. We don't need to standardize _mesh layouts_ or contort meshes to meet the needs of a specific pipeline. As long as the mesh has what the pipeline needs, it will work transparently.

* Mesh-based pipelines now specialize on `&MeshVertexBufferLayout` via the new `SpecializedMeshPipeline` trait (which behaves like `SpecializedPipeline`, but adds `&MeshVertexBufferLayout`). The integrity of the pipeline cache is maintained because the `MeshVertexBufferLayout` is treated as part of the key (which is fully abstracted from implementers of the trait ... no need to add any additional info to the specialization key).

* Hashing `MeshVertexBufferLayout` is too expensive to do for every entity, every frame. To make this scalable, I added a generalized "pre-hashing" solution to `bevy_utils`: `Hashed<T>` keys and `PreHashMap<K, V>` (which uses `Hashed<T>` internally) . Why didn't I just do the quick and dirty in-place "pre-compute hash and use that u64 as a key in a hashmap" that we've done in the past? Because its wrong! Hashes by themselves aren't enough because two different values can produce the same hash. Re-hashing a hash is even worse! I decided to build a generalized solution because this pattern has come up in the past and we've chosen to do the wrong thing. Now we can do the right thing! This did unfortunately require pulling in `hashbrown` and using that in `bevy_utils`, because avoiding re-hashes requires the `raw_entry_mut` api, which isn't stabilized yet (and may never be ... `entry_ref` has favor now, but also isn't available yet). If std's HashMap ever provides the tools we need, we can move back to that. Note that adding `hashbrown` doesn't increase our dependency count because it was already in our tree. I will probably break these changes out into their own PR.

* Specializing on `MeshVertexBufferLayout` has one non-obvious behavior: it can produce identical pipelines for two different MeshVertexBufferLayouts. To optimize the number of active pipelines / reduce re-binds while drawing, I de-duplicate pipelines post-specialization using the final `VertexBufferLayout` as the key. For example, consider a pipeline that needs the layout `(position, normal)` and is specialized using two meshes: `(position, normal, uv)` and `(position, normal, other_vec2)`. If both of these meshes result in `(position, normal)` specializations, we can use the same pipeline! Now we do. Cool!

To briefly illustrate, this is what the relevant section of `MeshPipeline`'s specialization code looks like now:

```rust

impl SpecializedMeshPipeline for MeshPipeline {

type Key = MeshPipelineKey;

fn specialize(

&self,

key: Self::Key,

layout: &MeshVertexBufferLayout,

) -> RenderPipelineDescriptor {

let mut vertex_attributes = vec![

Mesh::ATTRIBUTE_POSITION.at_shader_location(0),

Mesh::ATTRIBUTE_NORMAL.at_shader_location(1),

Mesh::ATTRIBUTE_UV_0.at_shader_location(2),

];

let mut shader_defs = Vec::new();

if layout.contains(Mesh::ATTRIBUTE_TANGENT) {

shader_defs.push(String::from("VERTEX_TANGENTS"));

vertex_attributes.push(Mesh::ATTRIBUTE_TANGENT.at_shader_location(3));

}

let vertex_buffer_layout = layout

.get_layout(&vertex_attributes)

.expect("Mesh is missing a vertex attribute");

```

Notice that this is _much_ simpler than it was before. And now any mesh with any layout can be used with this pipeline, provided it has vertex postions, normals, and uvs. We even got to remove `HAS_TANGENTS` from MeshPipelineKey and `has_tangents` from `GpuMesh`, because that information is redundant with `MeshVertexBufferLayout`.

This is still a draft because I still need to:

* Add more docs

* Experiment with adding error handling to mesh pipeline specialization (which would print errors at runtime when a mesh is missing a vertex attribute required by a pipeline). If it doesn't tank perf, we'll keep it.

* Consider breaking out the PreHash / hashbrown changes into a separate PR.

* Add an example illustrating this change

* Verify that the "mesh-specialized pipeline de-duplication code" works properly

Please dont yell at me for not doing these things yet :) Just trying to get this in peoples' hands asap.

Alternative to #3120Fixes#3030

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

This enables shaders to (optionally) define their import path inside their source. This has a number of benefits:

1. enables users to define their own custom paths directly in their assets

2. moves the import path "close" to the asset instead of centralized in the plugin definition, which seems "better" to me.

3. makes "internal hot shader reloading" way more reasonable (see #3966)

4. logically opens the door to importing "parts" of a shader by defining "import_path blocks".

```rust

#define_import_path bevy_pbr::mesh_struct

struct Mesh {

model: mat4x4<f32>;

inverse_transpose_model: mat4x4<f32>;

// 'flags' is a bit field indicating various options. u32 is 32 bits so we have up to 32 options.

flags: u32;

};

let MESH_FLAGS_SHADOW_RECEIVER_BIT: u32 = 1u;

```

For some keys, it is too expensive to hash them on every lookup. Historically in Bevy, we have regrettably done the "wrong" thing in these cases (pre-computing hashes, then re-hashing them) because Rust's built in hashed collections don't give us the tools we need to do otherwise. Doing this is "wrong" because two different values can result in the same hash. Hashed collections generally get around this by falling back to equality checks on hash collisions. You can't do that if the key _is_ the hash. Additionally, re-hashing a hash increase the odds of collision!

#3959 needs pre-hashing to be viable, so I decided to finally properly solve the problem. The solution involves two different changes:

1. A new generalized "pre-hashing" solution in bevy_utils: `Hashed<T>` types, which store a value alongside a pre-computed hash. And `PreHashMap<K, V>` (which uses `Hashed<T>` internally) . `PreHashMap` is just an alias for a normal HashMap that uses `Hashed<T>` as the key and a new `PassHash` implementation as the Hasher.

2. Replacing the `std::collections` re-exports in `bevy_utils` with equivalent `hashbrown` impls. Avoiding re-hashes requires the `raw_entry_mut` api, which isn't stabilized yet (and may never be ... `entry_ref` has favor now, but also isn't available yet). If std's HashMap ever provides the tools we need, we can move back to that. The latest version of `hashbrown` adds support for the `entity_ref` api, so we can move to that in preparation for an std migration, if thats the direction they seem to be going in. Note that adding hashbrown doesn't increase our dependency count because it was already in our tree.

In addition to providing these core tools, I also ported the "table identity hashing" in `bevy_ecs` to `raw_entry_mut`, which was a particularly egregious case.

The biggest outstanding case is `AssetPathId`, which stores a pre-hash. We need AssetPathId to be cheaply clone-able (and ideally Copy), but `Hashed<AssetPath>` requires ownership of the AssetPath, which makes cloning ids way more expensive. We could consider doing `Hashed<Arc<AssetPath>>`, but cloning an arc is still a non-trivial expensive that needs to be considered. I would like to handle this in a separate PR. And given that we will be re-evaluating the Bevy Assets implementation in the very near future, I'd prefer to hold off until after that conversation is concluded.

# Objective

- `WgpuOptions` is mutated to be updated with the actual device limits and features, but this information is readily available to both the main and render worlds through the `RenderDevice` which has .limits() and .features() methods

- Information about the adapter in terms of its name, the backend in use, etc were not being exposed but have clear use cases for being used to take decisions about what rendering code to use. For example, if something works well on AMD GPUs but poorly on Intel GPUs. Or perhaps something works well in Vulkan but poorly in DX12.

## Solution

- Stop mutating `WgpuOptions `and don't insert the updated values into the main and render worlds

- Return `AdapterInfo` from `initialize_renderer` and insert it into the main and render worlds

- Use `RenderDevice` limits in the lighting code that was using `WgpuOptions.limits`.

- Renamed `WgpuOptions` to `WgpuSettings`

What is says on the tin.

This has got more to do with making `clippy` slightly more *quiet* than it does with changing anything that might greatly impact readability or performance.

that said, deriving `Default` for a couple of structs is a nice easy win

# Objective

The docs for `{VertexState, FragmentState}::entry_point` stipulate that the entry point function in the shader must return void. This seems to be specific to GLSL; WGSL has no `void` type and its entry point functions return values that describe their output.

## Solution

Remove the mention of the `void` return type.

## Objective

When print shader validation error messages, we didn't print the sources and error message text, which led to some confusing error messages.

```cs

error:

┌─ wgsl:15:11

│

15 │ return material.color + 1u;

│ ^^^^^^^^^^^^^^^^^^^^ naga::Expression [11]

```

## Solution

New error message:

```cs

error: Entry point fragment at Vertex is invalid

┌─ wgsl:15:11

│

15 │ return material.color + 1u;

│ ^^^^^^^^^^^^^^^^^^^^ naga::Expression [11]

│

= Expression [11] is invalid

= Operation Add can't work with [8] and [10]

```

# Objective

In order to create a glsl shader, we must provide the `naga::ShaderStage` type which is not exported by bevy, meaning a user would have to manually include naga just to access this type.

`pub fn from_glsl(source: impl Into<Cow<'static, str>>, stage: naga::ShaderStage) -> Shader {`

## Solution

Re-rexport naga::ShaderStage from `render_resources`

#3457 adds the `doc_markdown` clippy lint, which checks doc comments to make sure code identifiers are escaped with backticks. This causes a lot of lint errors, so this is one of a number of PR's that will fix those lint errors one crate at a time.

This PR fixes lints in the `bevy_render` crate.

# Objective

The current 2d rendering is specialized to render sprites, we need a generic way to render 2d items, using meshes and materials like we have for 3d.

## Solution

I cloned a good part of `bevy_pbr` into `bevy_sprite/src/mesh2d`, removed lighting and pbr itself, adapted it to 2d rendering, added a `ColorMaterial`, and modified the sprite rendering to break batches around 2d meshes.

~~The PR is a bit crude; I tried to change as little as I could in both the parts copied from 3d and the current sprite rendering to make reviewing easier. In the future, I expect we could make the sprite rendering a normal 2d material, cleanly integrated with the rest.~~ _edit: see <https://github.com/bevyengine/bevy/pull/3460#issuecomment-1003605194>_

## Remaining work

- ~~don't require mesh normals~~ _out of scope_

- ~~add an example~~ _done_

- support 2d meshes & materials in the UI?

- bikeshed names (I didn't think hard about naming, please check if it's fine)

## Remaining questions

- ~~should we add a depth buffer to 2d now that there are 2d meshes?~~ _let's revisit that when we have an opaque render phase_

- ~~should we add MSAA support to the sprites, or remove it from the 2d meshes?~~ _I added MSAA to sprites since it's really needed for 2d meshes_

- ~~how to customize vertex attributes?~~ _#3120_

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

- I want to port `bevy_egui` to Bevy main and only reuse re-exports from Bevy

## Solution

- Add exports for `BufferBinding` and `BufferDescriptor`

Co-authored-by: François <8672791+mockersf@users.noreply.github.com>

# Objective

- Our crevice is still called "crevice", which we can't use for a release

- Users would need to use our "crevice" directly to be able to use the derive macro

## Solution

- Rename crevice to bevy_crevice, and crevice-derive to bevy-crevice-derive

- Re-export it from bevy_render, and use it from bevy_render everywhere

- Fix derive macro to work either from bevy_render, from bevy_crevice, or from bevy

## Remaining

- It is currently re-exported as `bevy::render::bevy_crevice`, is it the path we want?

- After a brief suggestion to Cart, I changed the version to follow Bevy version instead of crevice, do we want that?

- Crevice README.md need to be updated

- in the `Cargo.toml`, there are a few things to change. How do we want to change them? How do we keep attributions to original Crevice?

```

authors = ["Lucien Greathouse <me@lpghatguy.com>"]

documentation = "https://docs.rs/crevice"

homepage = "https://github.com/LPGhatguy/crevice"

repository = "https://github.com/LPGhatguy/crevice"

```

Co-authored-by: François <8672791+mockersf@users.noreply.github.com>

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

### Problem

- shader processing errors are not displayed

- during hot reloading when encountering a shader with errors, the whole app crashes

### Solution

- log `error!`s for shader processing errors

- when `cfg(debug_assertions)` is enabled (i.e. you're running in `debug` mode), parse shaders before passing them to wgpu. This lets us handle errors early.

# Objective

- I want to be able to use `#ifdef` and other processor directives in an imported shader

## Solution

- Process imported shader strings

Co-authored-by: François <8672791+mockersf@users.noreply.github.com>

# Objective

- Only bevy_render should depend directly on wgpu

- This helps to make sure bevy_render re-exports everything needed from wgpu

## Solution

- Remove bevy_pbr, bevy_sprite and bevy_ui dependency on wgpu

Co-authored-by: François <8672791+mockersf@users.noreply.github.com>

# Objective

Fixes#3352Fixes#3208

## Solution

- Update wgpu to 0.12

- Update naga to 0.8

- Resolve compilation errors

- Remove [[block]] from WGSL shaders (because it is depracated and now wgpu cant parse it)

- Replace `elseif` with `else if` in pbr.wgsl

# Objective

- There are a few warnings when building Bevy docs for dead links

- CI seems to not catch those warnings when it should

## Solution

- Enable doc CI on all Bevy workspace

- Fix warnings

- Also noticed plugin GilrsPlugin was not added anymore when feature was enabled

First commit to check that CI would actually fail with it: https://github.com/bevyengine/bevy/runs/4532652688?check_suite_focus=true

Co-authored-by: François <8672791+mockersf@users.noreply.github.com>

This makes the [New Bevy Renderer](#2535) the default (and only) renderer. The new renderer isn't _quite_ ready for the final release yet, but I want as many people as possible to start testing it so we can identify bugs and address feedback prior to release.

The examples are all ported over and operational with a few exceptions:

* I removed a good portion of the examples in the `shader` folder. We still have some work to do in order to make these examples possible / ergonomic / worthwhile: #3120 and "high level shader material plugins" are the big ones. This is a temporary measure.

* Temporarily removed the multiple_windows example: doing this properly in the new renderer will require the upcoming "render targets" changes. Same goes for the render_to_texture example.

* Removed z_sort_debug: entity visibility sort info is no longer available in app logic. we could do this on the "render app" side, but i dont consider it a priority.