## Objective

A major critique of Bevy at the moment is how boilerplatey it is to

compose (and read) entity hierarchies:

```rust

commands

.spawn(Foo)

.with_children(|p| {

p.spawn(Bar).with_children(|p| {

p.spawn(Baz);

});

p.spawn(Bar).with_children(|p| {

p.spawn(Baz);

});

});

```

There is also currently no good way to statically define and return an

entity hierarchy from a function. Instead, people often do this

"internally" with a Commands function that returns nothing, making it

impossible to spawn the hierarchy in other cases (direct World spawns,

ChildSpawner, etc).

Additionally, because this style of API results in creating the

hierarchy bits _after_ the initial spawn of a bundle, it causes ECS

archetype changes (and often expensive table moves).

Because children are initialized after the fact, we also can't count

them to pre-allocate space. This means each time a child inserts itself,

it has a high chance of overflowing the currently allocated capacity in

the `RelationshipTarget` collection, causing literal worst-case

reallocations.

We can do better!

## Solution

The Bundle trait has been extended to support an optional

`BundleEffect`. This is applied directly to World immediately _after_

the Bundle has fully inserted. Note that this is

[intentionally](https://github.com/bevyengine/bevy/discussions/16920)

_not done via a deferred Command_, which would require repeatedly

copying each remaining subtree of the hierarchy to a new command as we

walk down the tree (_not_ good performance).

This allows us to implement the new `SpawnRelated` trait for all

`RelationshipTarget` impls, which looks like this in practice:

```rust

world.spawn((

Foo,

Children::spawn((

Spawn((

Bar,

Children::spawn(Spawn(Baz)),

)),

Spawn((

Bar,

Children::spawn(Spawn(Baz)),

)),

))

))

```

`Children::spawn` returns `SpawnRelatedBundle<Children, L:

SpawnableList>`, which is a `Bundle` that inserts `Children`

(preallocated to the size of the `SpawnableList::size_hint()`).

`Spawn<B: Bundle>(pub B)` implements `SpawnableList` with a size of 1.

`SpawnableList` is also implemented for tuples of `SpawnableList` (same

general pattern as the Bundle impl).

There are currently three built-in `SpawnableList` implementations:

```rust

world.spawn((

Foo,

Children::spawn((

Spawn(Name::new("Child1")),

SpawnIter(["Child2", "Child3"].into_iter().map(Name::new),

SpawnWith(|parent: &mut ChildSpawner| {

parent.spawn(Name::new("Child4"));

parent.spawn(Name::new("Child5"));

})

)),

))

```

We get the benefits of "structured init", but we have nice flexibility

where it is required!

Some readers' first instinct might be to try to remove the need for the

`Spawn` wrapper. This is impossible in the Rust type system, as a tuple

of "child Bundles to be spawned" and a "tuple of Components to be added

via a single Bundle" is ambiguous in the Rust type system. There are two

ways to resolve that ambiguity:

1. By adding support for variadics to the Rust type system (removing the

need for nested bundles). This is out of scope for this PR :)

2. Using wrapper types to resolve the ambiguity (this is what I did in

this PR).

For the single-entity spawn cases, `Children::spawn_one` does also

exist, which removes the need for the wrapper:

```rust

world.spawn((

Foo,

Children::spawn_one(Bar),

))

```

## This works for all Relationships

This API isn't just for `Children` / `ChildOf` relationships. It works

for any relationship type, and they can be mixed and matched!

```rust

world.spawn((

Foo,

Observers::spawn((

Spawn(Observer::new(|trigger: Trigger<FuseLit>| {})),

Spawn(Observer::new(|trigger: Trigger<Exploded>| {})),

)),

OwnerOf::spawn(Spawn(Bar))

Children::spawn(Spawn(Baz))

))

```

## Macros

While `Spawn` is necessary to satisfy the type system, we _can_ remove

the need to express it via macros. The example above can be expressed

more succinctly using the new `children![X]` macro, which internally

produces `Children::spawn(Spawn(X))`:

```rust

world.spawn((

Foo,

children![

(

Bar,

children![Baz],

),

(

Bar,

children![Baz],

),

]

))

```

There is also a `related!` macro, which is a generic version of the

`children!` macro that supports any relationship type:

```rust

world.spawn((

Foo,

related!(Children[

(

Bar,

related!(Children[Baz]),

),

(

Bar,

related!(Children[Baz]),

),

])

))

```

## Returning Hierarchies from Functions

Thanks to these changes, the following pattern is now possible:

```rust

fn button(text: &str, color: Color) -> impl Bundle {

(

Node {

width: Val::Px(300.),

height: Val::Px(100.),

..default()

},

BackgroundColor(color),

children![

Text::new(text),

]

)

}

fn ui() -> impl Bundle {

(

Node {

width: Val::Percent(100.0),

height: Val::Percent(100.0),

..default(),

},

children![

button("hello", BLUE),

button("world", RED),

]

)

}

// spawn from a system

fn system(mut commands: Commands) {

commands.spawn(ui());

}

// spawn directly on World

world.spawn(ui());

```

## Additional Changes and Notes

* `Bundle::from_components` has been split out into

`BundleFromComponents::from_components`, enabling us to implement

`Bundle` for types that cannot be "taken" from the ECS (such as the new

`SpawnRelatedBundle`).

* The `NoBundleEffect` trait (which implements `BundleEffect`) is

implemented for empty tuples (and tuples of empty tuples), which allows

us to constrain APIs to only accept bundles that do not have effects.

This is critical because the current batch spawn APIs cannot efficiently

apply BundleEffects in their current form (as doing so in-place could

invalidate the cached raw pointers). We could consider allocating a

buffer of the effects to be applied later, but that does have

performance implications that could offset the balance and value of the

batched APIs (and would likely require some refactors to the underlying

code). I've decided to be conservative here. We can consider relaxing

that requirement on those APIs later, but that should be done in a

followup imo.

* I've ported a few examples to illustrate real-world usage. I think in

a followup we should port all examples to the `children!` form whenever

possible (and for cases that require things like SpawnIter, use the raw

APIs).

* Some may ask "why not use the `Relationship` to spawn (ex:

`ChildOf::spawn(Foo)`) instead of the `RelationshipTarget` (ex:

`Children::spawn(Spawn(Foo))`)?". That _would_ allow us to remove the

`Spawn` wrapper. I've explicitly chosen to disallow this pattern.

`Bundle::Effect` has the ability to create _significant_ weirdness.

Things in `Bundle` position look like components. For example

`world.spawn((Foo, ChildOf::spawn(Bar)))` _looks and reads_ like Foo is

a child of Bar. `ChildOf` is in Foo's "component position" but it is not

a component on Foo. This is a huge problem. Now that `Bundle::Effect`

exists, we should be _very_ principled about keeping the "weird and

unintuitive behavior" to a minimum. Things that read like components

_should be the components they appear to be".

## Remaining Work

* The macros are currently trivially implemented using macro_rules and

are currently limited to the max tuple length. They will require a

proc_macro implementation to work around the tuple length limit.

## Next Steps

* Port the remaining examples to use `children!` where possible and raw

`Spawn` / `SpawnIter` / `SpawnWith` where the flexibility of the raw API

is required.

## Migration Guide

Existing spawn patterns will continue to work as expected.

Manual Bundle implementations now require a `BundleEffect` associated

type. Exisiting bundles would have no bundle effect, so use `()`.

Additionally `Bundle::from_components` has been moved to the new

`BundleFromComponents` trait.

```rust

// Before

unsafe impl Bundle for X {

unsafe fn from_components<T, F>(ctx: &mut T, func: &mut F) -> Self {

}

/* remaining bundle impl here */

}

// After

unsafe impl Bundle for X {

type Effect = ();

/* remaining bundle impl here */

}

unsafe impl BundleFromComponents for X {

unsafe fn from_components<T, F>(ctx: &mut T, func: &mut F) -> Self {

}

}

```

---------

Co-authored-by: Alice Cecile <alice.i.cecile@gmail.com>

Co-authored-by: Gino Valente <49806985+MrGVSV@users.noreply.github.com>

Co-authored-by: Emerson Coskey <emerson@coskey.dev>

This pr uses the `extern crate self as` trick to make proc macros behave

the same way inside and outside bevy.

# Objective

- Removes noise introduced by `crate as` in the whole bevy repo.

- Fixes#17004.

- Hardens proc macro path resolution.

## TODO

- [x] `BevyManifest` needs cleanup.

- [x] Cleanup remaining `crate as`.

- [x] Add proper integration tests to the ci.

## Notes

- `cargo-manifest-proc-macros` is written by me and based/inspired by

the old `BevyManifest` implementation and

[`bkchr/proc-macro-crate`](https://github.com/bkchr/proc-macro-crate).

- What do you think about the new integration test machinery I added to

the `ci`?

More and better integration tests can be added at a later stage.

The goal of these integration tests is to simulate an actual separate

crate that uses bevy. Ideally they would lightly touch all bevy crates.

## Testing

- Needs RA test

- Needs testing from other users

- Others need to run at least `cargo run -p ci integration-test` and

verify that they work.

---------

Co-authored-by: Alice Cecile <alice.i.cecile@gmail.com>

Didn't remove WgpuWrapper. Not sure if it's needed or not still.

## Testing

- Did you test these changes? If so, how? Example runner

- Are there any parts that need more testing? Web (portable atomics

thingy?), DXC.

## Migration Guide

- Bevy has upgraded to [wgpu

v24](https://github.com/gfx-rs/wgpu/blob/trunk/CHANGELOG.md#v2400-2025-01-15).

- When using the DirectX 12 rendering backend, the new priority system

for choosing a shader compiler is as follows:

- If the `WGPU_DX12_COMPILER` environment variable is set at runtime, it

is used

- Else if the new `statically-linked-dxc` feature is enabled, a custom

version of DXC will be statically linked into your app at compile time.

- Else Bevy will look in the app's working directory for

`dxcompiler.dll` and `dxil.dll` at runtime.

- Else if they are missing, Bevy will fall back to FXC (not recommended)

---------

Co-authored-by: Alice Cecile <alice.i.cecile@gmail.com>

Co-authored-by: IceSentry <c.giguere42@gmail.com>

Co-authored-by: François Mockers <francois.mockers@vleue.com>

# Objective

- publish script copy the license files to all subcrates, meaning that

all publish are dirty. this breaks git verification of crates

- the order and list of crates to publish is manually maintained,

leading to error. cargo 1.84 is more strict and the list is currently

wrong

## Solution

- duplicate all the licenses to all crates and remove the

`--allow-dirty` flag

- instead of a manual list of crates, get it from `cargo package

--workspace`

- remove the `--no-verify` flag to... verify more things?

PR #17684 broke occlusion culling because it neglected to set the

indirect parameter offsets for the late mesh preprocessing stage if the

work item buffers were already set. This PR moves the update of those

values to a new function, `init_work_item_buffers`, which is

unconditionally called for every phase every frame.

Note that there's some complexity in order to handle the case in which

occlusion culling was enabled on one frame and disabled on the next, or

vice versa. This was necessary in order to make the occlusion culling

toggle in the `occlusion_culling` example work again.

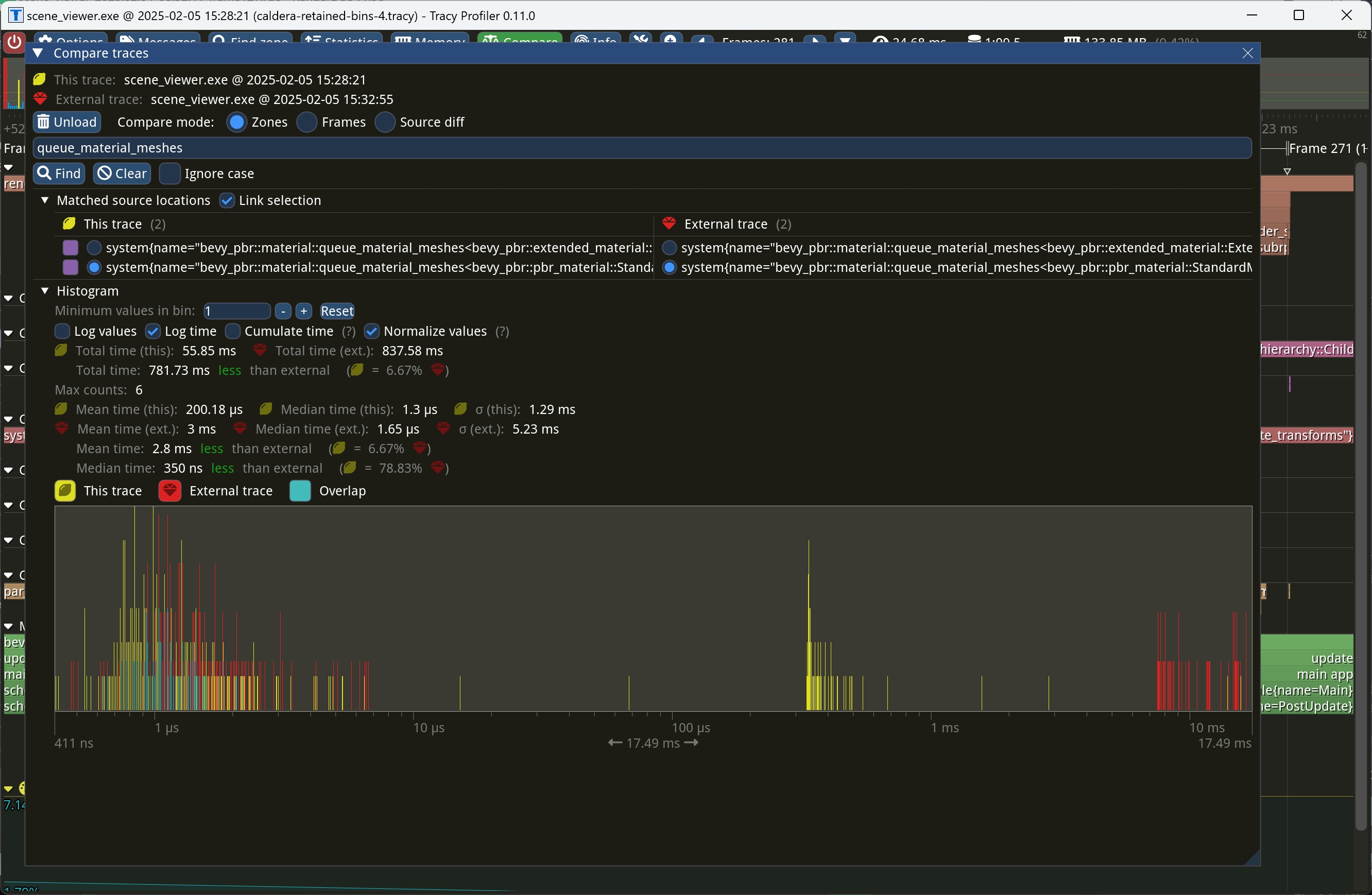

This PR makes Bevy keep entities in bins from frame to frame if they

haven't changed. This reduces the time spent in `queue_material_meshes`

and related functions to near zero for static geometry. This patch uses

the same change tick technique that #17567 uses to detect when meshes

have changed in such a way as to require re-binning.

In order to quickly find the relevant bin for an entity when that entity

has changed, we introduce a new type of cache, the *bin key cache*. This

cache stores a mapping from main world entity ID to cached bin key, as

well as the tick of the most recent change to the entity. As we iterate

through the visible entities in `queue_material_meshes`, we check the

cache to see whether the entity needs to be re-binned. If it doesn't,

then we mark it as clean in the `valid_cached_entity_bin_keys` bit set.

If it does, then we insert it into the correct bin, and then mark the

entity as clean. At the end, all entities not marked as clean are

removed from the bins.

This patch has a dramatic effect on the rendering performance of most

benchmarks, as it effectively eliminates `queue_material_meshes` from

the profile. Note, however, that it generally simultaneously regresses

`batch_and_prepare_binned_render_phase` by a bit (not by enough to

outweigh the win, however). I believe that's because, before this patch,

`queue_material_meshes` put the bins in the CPU cache for

`batch_and_prepare_binned_render_phase` to use, while with this patch,

`batch_and_prepare_binned_render_phase` must load the bins into the CPU

cache itself.

On Caldera, this reduces the time spent in `queue_material_meshes` from

5+ ms to 0.2ms-0.3ms. Note that benchmarking on that scene is very noisy

right now because of https://github.com/bevyengine/bevy/issues/17535.

# Objective

- Make use of the new `weak_handle!` macro added in

https://github.com/bevyengine/bevy/pull/17384

## Solution

- Migrate bevy from `Handle::weak_from_u128` to the new `weak_handle!`

macro that takes a random UUID

- Deprecate `Handle::weak_from_u128`, since there are no remaining use

cases that can't also be addressed by constructing the type manually

## Testing

- `cargo run -p ci -- test`

---

## Migration Guide

Replace `Handle::weak_from_u128` with `weak_handle!` and a random UUID.

# Objective

Fixes#17662

## Solution

Moved `Item` and `fetch` from `WorldQuery` to `QueryData`, and adjusted

their implementations accordingly.

Currently, documentation related to `fetch` is written under

`WorldQuery`. It would be more appropriate to move it to the `QueryData`

documentation for clarity.

I am not very experienced with making contributions. If there are any

mistakes or areas for improvement, I would appreciate any suggestions

you may have.

## Migration Guide

The `WorldQuery::Item` type and `WorldQuery::fetch` method have been

moved to `QueryData`, as they were not useful for `QueryFilter` types.

---------

Co-authored-by: Chris Russell <8494645+chescock@users.noreply.github.com>

# Objective

Simplify and expand the API for `QueryState`.

`QueryState` has a lot of methods that mirror those on `Query`. These

are then multiplied by variants that take `&World`, `&mut World`, and

`UnsafeWorldCell`. In addition, many of them have `_manual` variants

that take `&QueryState` and avoid calling `update_archetypes()`. Not all

of the combinations exist, however, so some operations are not possible.

## Solution

Introduce methods to get a `Query` from a `QueryState`. That will reduce

duplication between the types, and ensure that the full `Query` API is

always available for `QueryState`.

Introduce methods on `Query` that consume the query to return types with

the full `'w` lifetime. This avoids issues with borrowing where things

like `query_state.query(&world).get(entity)` don't work because they

borrow from the temporary `Query`.

Finally, implement `Copy` for read-only `Query`s. `get_inner` and

`iter_inner` currently take `&self`, so changing them to consume `self`

would be a breaking change. By making `Query: Copy`, they can consume a

copy of `self` and continue to work.

The consuming methods also let us simplify the implementation of methods

on `Query`, by doing `fn foo(&self) { self.as_readonly().foo_inner() }`

and `fn foo_mut(&mut self) { self.reborrow().foo_inner() }`. That

structure makes it more difficult to accidentally extend lifetimes,

since the safe `as_readonly()` and `reborrow()` methods shrink them

appropriately. The optimizer is able to see that they are both identity

functions and inline them, so there should be no performance cost.

Note that this change would conflict with #15848. If `QueryState` is

stored as a `Cow`, then the consuming methods cannot be implemented, and

`Copy` cannot be implemented.

## Future Work

The next step is to mark the methods on `QueryState` as `#[deprecated]`,

and move the implementations into `Query`.

## Migration Guide

`Query::to_readonly` has been renamed to `Query::as_readonly`.

# Cold Specialization

## Objective

An ongoing part of our quest to retain everything in the render world,

cold-specialization aims to cache pipeline specialization so that

pipeline IDs can be recomputed only when necessary, rather than every

frame. This approach reduces redundant work in stable scenes, while

still accommodating scenarios in which materials, views, or visibility

might change, as well as unlocking future optimization work like

retaining render bins.

## Solution

Queue systems are split into a specialization system and queue system,

the former of which only runs when necessary to compute a new pipeline

id. Pipelines are invalidated using a combination of change detection

and ECS ticks.

### The difficulty with change detection

Detecting “what changed” can be tricky because pipeline specialization

depends not only on the entity’s components (e.g., mesh, material, etc.)

but also on which view (camera) it is rendering in. In other words, the

cache key for a given pipeline id is a view entity/render entity pair.

As such, it's not sufficient simply to react to change detection in

order to specialize -- an entity could currently be out of view or could

be rendered in the future in camera that is currently disabled or hasn't

spawned yet.

### Why ticks?

Ticks allow us to ensure correctness by allowing us to compare the last

time a view or entity was updated compared to the cached pipeline id.

This ensures that even if an entity was out of view or has never been

seen in a given camera before we can still correctly determine whether

it needs to be re-specialized or not.

## Testing

TODO: Tested a bunch of different examples, need to test more.

## Migration Guide

TODO

- `AssetEvents` has been moved into the `PostUpdate` schedule.

---------

Co-authored-by: Patrick Walton <pcwalton@mimiga.net>

We were calling `clear()` on the work item buffer table, which caused us

to deallocate all the CPU side buffers. This patch changes the logic to

instead just clear the buffers individually, but leave their backing

stores. This has two consequences:

1. To effectively retain work item buffers from frame to frame, we need

to key them off `RetainedViewEntity` values and not the render world

`Entity`, which is transient. This PR changes those buffers accordingly.

2. We need to clean up work item buffers that belong to views that went

away. Amusingly enough, we actually have a system,

`delete_old_work_item_buffers`, that tries to do this already, but it

wasn't doing anything because the `clear_batched_gpu_instance_buffers`

system already handled that. This patch actually makes the

`delete_old_work_item_buffers` system useful, by removing the clearing

behavior from `clear_batched_gpu_instance_buffers` and instead making

`delete_old_work_item_buffers` delete buffers corresponding to

nonexistent views.

On Bistro, this PR improves the performance of

`batch_and_prepare_binned_render_phase` from 61.2 us to 47.8 us, a 28%

speedup.

Data for the other batches is only accessed by the GPU, not the CPU, so

it's a waste of time and memory to store information relating to those

other batches.

On Bistro, this reduces time spent in

`batch_and_prepare_binned_render_phase` from 85.9 us to 61.2 us, a 40%

speedup.

# Objective

- Most of the `*MeshBuilder` classes are not implementing `Reflect`

## Solution

- Implementing `Reflect` for all `*MeshBuilder` were is possible.

- Make sure all `*MeshBuilder` implements `Default`.

- Adding new `MeshBuildersPlugin` that registers all `*MeshBuilder`

types.

## Testing

- `cargo run -p ci`

- Tested some examples like `3d_scene` just in case something was

broken.

# Objective

- Fix the atmosphere LUT parameterization in the aerial -view and

sky-view LUTs

- Correct the light accumulation according to a ray-marched reference

- Avoid negative values of the sun disk illuminance when the sun disk is

below the horizon

## Solution

- Adding a Newton's method iteration to `fast_sqrt` function

- Switched to using `fast_acos_4` for better precision of the sun angle

towards the horizon (view mu angle = 0)

- Simplified the function for mapping to and from the Sky View UV

coordinates by removing an if statement and correctly apply the method

proposed by the [Hillarie

paper](https://sebh.github.io/publications/egsr2020.pdf) detailed in

section 5.3 and 5.4.

- Replaced the `ray_dir_ws.y` term with a shadow factor in the

`sample_sun_illuminance` function that correctly approximates the sun

disk occluded by the earth from any view point

## Testing

- Ran the atmosphere and SSAO examples to make sure the shaders still

compile and run as expected.

---

## Showcase

<img width="1151" alt="showcase-img"

src="https://github.com/user-attachments/assets/de875533-42bd-41f9-9fd0-d7cc57d6e51c"

/>

---------

Co-authored-by: Emerson Coskey <emerson@coskey.dev>

# Objective

- Linking to a specific AsBindGroup attribute is hard because it doesn't

use any headers and all the docs is in a giant block

## Solution

- Make each attribute it's own sub-header so they can be easily linked

---

## Showcase

Here's what the rustdoc output looks like with this change

## Notes

I kept the bullet point so the text is still indented like before. Not

sure if we should keep that or not

Minor improvement to the render_resource doc comments; specifically, the

gpu buffer types

- makes them consistently reference each other

- reorders them to be alphabetical

- removes duplicated entries

*Occlusion culling* allows the GPU to skip the vertex and fragment

shading overhead for objects that can be quickly proved to be invisible

because they're behind other geometry. A depth prepass already

eliminates most fragment shading overhead for occluded objects, but the

vertex shading overhead, as well as the cost of testing and rejecting

fragments against the Z-buffer, is presently unavoidable for standard

meshes. We currently perform occlusion culling only for meshlets. But

other meshes, such as skinned meshes, can benefit from occlusion culling

too in order to avoid the transform and skinning overhead for unseen

meshes.

This commit adapts the same [*two-phase occlusion culling*] technique

that meshlets use to Bevy's standard 3D mesh pipeline when the new

`OcclusionCulling` component, as well as the `DepthPrepass` component,

are present on the camera. It has these steps:

1. *Early depth prepass*: We use the hierarchical Z-buffer from the

previous frame to cull meshes for the initial depth prepass, effectively

rendering only the meshes that were visible in the last frame.

2. *Early depth downsample*: We downsample the depth buffer to create

another hierarchical Z-buffer, this time with the current view

transform.

3. *Late depth prepass*: We use the new hierarchical Z-buffer to test

all meshes that weren't rendered in the early depth prepass. Any meshes

that pass this check are rendered.

4. *Late depth downsample*: Again, we downsample the depth buffer to

create a hierarchical Z-buffer in preparation for the early depth

prepass of the next frame. This step is done after all the rendering, in

order to account for custom phase items that might write to the depth

buffer.

Note that this patch has no effect on the per-mesh CPU overhead for

occluded objects, which remains high for a GPU-driven renderer due to

the lack of `cold-specialization` and retained bins. If

`cold-specialization` and retained bins weren't on the horizon, then a

more traditional approach like potentially visible sets (PVS) or low-res

CPU rendering would probably be more efficient than the GPU-driven

approach that this patch implements for most scenes. However, at this

point the amount of effort required to implement a PVS baking tool or a

low-res CPU renderer would probably be greater than landing

`cold-specialization` and retained bins, and the GPU driven approach is

the more modern one anyway. It does mean that the performance

improvements from occlusion culling as implemented in this patch *today*

are likely to be limited, because of the high CPU overhead for occluded

meshes.

Note also that this patch currently doesn't implement occlusion culling

for 2D objects or shadow maps. Those can be addressed in a follow-up.

Additionally, note that the techniques in this patch require compute

shaders, which excludes support for WebGL 2.

This PR is marked experimental because of known precision issues with

the downsampling approach when applied to non-power-of-two framebuffer

sizes (i.e. most of them). These precision issues can, in rare cases,

cause objects to be judged occluded that in fact are not. (I've never

seen this in practice, but I know it's possible; it tends to be likelier

to happen with small meshes.) As a follow-up to this patch, we desire to

switch to the [SPD-based hi-Z buffer shader from the Granite engine],

which doesn't suffer from these problems, at which point we should be

able to graduate this feature from experimental status. I opted not to

include that rewrite in this patch for two reasons: (1) @JMS55 is

planning on doing the rewrite to coincide with the new availability of

image atomic operations in Naga; (2) to reduce the scope of this patch.

A new example, `occlusion_culling`, has been added. It demonstrates

objects becoming quickly occluded and disoccluded by dynamic geometry

and shows the number of objects that are actually being rendered. Also,

a new `--occlusion-culling` switch has been added to `scene_viewer`, in

order to make it easy to test this patch with large scenes like Bistro.

[*two-phase occlusion culling*]:

https://medium.com/@mil_kru/two-pass-occlusion-culling-4100edcad501

[Aaltonen SIGGRAPH 2015]:

https://www.advances.realtimerendering.com/s2015/aaltonenhaar_siggraph2015_combined_final_footer_220dpi.pdf

[Some literature]:

https://gist.github.com/reduz/c5769d0e705d8ab7ac187d63be0099b5?permalink_comment_id=5040452#gistcomment-5040452

[SPD-based hi-Z buffer shader from the Granite engine]:

https://github.com/Themaister/Granite/blob/master/assets/shaders/post/hiz.comp

## Migration guide

* When enqueuing a custom mesh pipeline, work item buffers are now

created with

`bevy::render::batching::gpu_preprocessing::get_or_create_work_item_buffer`,

not `PreprocessWorkItemBuffers::new`. See the

`specialized_mesh_pipeline` example.

## Showcase

Occlusion culling example:

Bistro zoomed out, before occlusion culling:

Bistro zoomed out, after occlusion culling:

In this scene, occlusion culling reduces the number of meshes Bevy has

to render from 1591 to 585.

# Objective

Implement `Eq` and `Hash` for the `BindGroup` and `BindGroupLayout`

wrappers.

## Solution

Implement based on the same assumption that the ID is unique, for

consistency with `PartialEq`.

## Testing

None; this should be straightforward. If there's an issue that would be

a design one.

Implement procedural atmospheric scattering from [Sebastien Hillaire's

2020 paper](https://sebh.github.io/publications/egsr2020.pdf). This

approach should scale well even down to mobile hardware, and is

physically accurate.

## Co-author: @mate-h

He helped massively with getting this over the finish line, ensuring

everything was physically correct, correcting several places where I had

misunderstood or misapplied the paper, and improving the performance in

several places as well. Thanks!

## Credits

@aevyrie: helped find numerous bugs and improve the example to best show

off this feature :)

Built off of @mtsr's original branch, which handled the transmittance

lut (arguably the most important part)

## Showcase:

## For followup

- Integrate with pcwalton's volumetrics code

- refactor/reorganize for better integration with other effects

- have atmosphere transmittance affect directional lights

- add support for generating skybox/environment map

---------

Co-authored-by: Emerson Coskey <56370779+EmersonCoskey@users.noreply.github.com>

Co-authored-by: atlv <email@atlasdostal.com>

Co-authored-by: JMS55 <47158642+JMS55@users.noreply.github.com>

Co-authored-by: Emerson Coskey <coskey@emerlabs.net>

Co-authored-by: Máté Homolya <mate.homolya@gmail.com>

# Objective

- Contributes to #16877

## Solution

- Moved `hashbrown`, `foldhash`, and related types out of `bevy_utils`

and into `bevy_platform_support`

- Refactored the above to match the layout of these types in `std`.

- Updated crates as required.

## Testing

- CI

---

## Migration Guide

- The following items were moved out of `bevy_utils` and into

`bevy_platform_support::hash`:

- `FixedState`

- `DefaultHasher`

- `RandomState`

- `FixedHasher`

- `Hashed`

- `PassHash`

- `PassHasher`

- `NoOpHash`

- The following items were moved out of `bevy_utils` and into

`bevy_platform_support::collections`:

- `HashMap`

- `HashSet`

- `bevy_utils::hashbrown` has been removed. Instead, import from

`bevy_platform_support::collections` _or_ take a dependency on

`hashbrown` directly.

- `bevy_utils::Entry` has been removed. Instead, import from

`bevy_platform_support::collections::hash_map` or

`bevy_platform_support::collections::hash_set` as appropriate.

- All of the above equally apply to `bevy::utils` and

`bevy::platform_support`.

## Notes

- I left `PreHashMap`, `PreHashMapExt`, and `TypeIdMap` in `bevy_utils`

as they might be candidates for micro-crating. They can always be moved

into `bevy_platform_support` at a later date if desired.

# Objective

- Make the function signature for `ComponentHook` less verbose

## Solution

- Refactored `Entity`, `ComponentId`, and `Option<&Location>` into a new

`HookContext` struct.

## Testing

- CI

---

## Migration Guide

Update the function signatures for your component hooks to only take 2

arguments, `world` and `context`. Note that because `HookContext` is

plain data with all members public, you can use de-structuring to

simplify migration.

```rust

// Before

fn my_hook(

mut world: DeferredWorld,

entity: Entity,

component_id: ComponentId,

) { ... }

// After

fn my_hook(

mut world: DeferredWorld,

HookContext { entity, component_id, caller }: HookContext,

) { ... }

```

Likewise, if you were discarding certain parameters, you can use `..` in

the de-structuring:

```rust

// Before

fn my_hook(

mut world: DeferredWorld,

entity: Entity,

_: ComponentId,

) { ... }

// After

fn my_hook(

mut world: DeferredWorld,

HookContext { entity, .. }: HookContext,

) { ... }

```

# Objective

Fixes#14708

Also fixes some commands not updating tracked location.

## Solution

`ObserverTrigger` has a new `caller` field with the

`track_change_detection` feature;

hooks take an additional caller parameter (which is `Some(…)` or `None`

depending on the feature).

## Testing

See the new tests in `src/observer/mod.rs`

---

## Showcase

Observers now know from where they were triggered (if

`track_change_detection` is enabled):

```rust

world.observe(move |trigger: Trigger<OnAdd, Foo>| {

println!("Added Foo from {}", trigger.caller());

});

```

## Migration

- hooks now take an additional `Option<&'static Location>` argument

---------

Co-authored-by: Alice Cecile <alice.i.cecile@gmail.com>

This commit makes Bevy use change detection to only update

`RenderMaterialInstances` and `RenderMeshMaterialIds` when meshes have

been added, changed, or removed. `extract_mesh_materials`, the system

that extracts these, now follows the pattern that

`extract_meshes_for_gpu_building` established.

This improves frame time of `many_cubes` from 3.9ms to approximately

3.1ms, which slightly surpasses the performance of Bevy 0.14.

(Resubmitted from #16878 to clean up history.)

---------

Co-authored-by: Charlotte McElwain <charlotte.c.mcelwain@gmail.com>

Co-authored-by: Alice Cecile <alice.i.cecile@gmail.com>

# Objective

`bevy_ecs`'s `system` module is something of a grab bag, and *very*

large. This is particularly true for the `system_param` module, which is

more than 2k lines long!

While it could be defensible to put `Res` and `ResMut` there (lol no

they're in change_detection.rs, obviously), it doesn't make any sense to

put the `Resource` trait there. This is confusing to navigate (and

painful to work on and review).

## Solution

- Create a root level `bevy_ecs/resource.rs` module to mirror

`bevy_ecs/component.rs`

- move the `Resource` trait to that module

- move the `Resource` derive macro to that module as well (Rust really

likes when you pun on the names of the derive macro and trait and put

them in the same path)

- fix all of the imports

## Notes to reviewers

- We could probably move more stuff into here, but I wanted to keep this

PR as small as possible given the absurd level of import changes.

- This PR is ground work for my upcoming attempts to store resource data

on components (resources-as-entities). Splitting this code out will make

the work and review a bit easier, and is the sort of overdue refactor

that's good to do as part of more meaningful work.

## Testing

cargo build works!

## Migration Guide

`bevy_ecs::system::Resource` has been moved to

`bevy_ecs::resource::Resource`.

Fixes#17412

## Objective

`Parent` uses the "has a X" naming convention. There is increasing

sentiment that we should use the "is a X" naming convention for

relationships (following #17398). This leaves `Children` as-is because

there is prevailing sentiment that `Children` is clearer than `ParentOf`

in many cases (especially when treating it like a collection).

This renames `Parent` to `ChildOf`.

This is just the implementation PR. To discuss the path forward, do so

in #17412.

## Migration Guide

- The `Parent` component has been renamed to `ChildOf`.

# Objective

Diagnostics for labels don't suggest how to best implement them.

```

error[E0277]: the trait bound `Label: ScheduleLabel` is not satisfied

--> src/main.rs:15:35

|

15 | let mut sched = Schedule::new(Label);

| ------------- ^^^^^ the trait `ScheduleLabel` is not implemented for `Label`

| |

| required by a bound introduced by this call

|

= help: the trait `ScheduleLabel` is implemented for `Interned<(dyn ScheduleLabel + 'static)>`

note: required by a bound in `bevy_ecs::schedule::Schedule::new`

--> /home/vj/workspace/rust/bevy/crates/bevy_ecs/src/schedule/schedule.rs:297:28

|

297 | pub fn new(label: impl ScheduleLabel) -> Self {

| ^^^^^^^^^^^^^ required by this bound in `Schedule::new`

```

## Solution

`diagnostics::on_unimplemented` and `diagnostics::do_not_recommend`

## Showcase

New error message:

```

error[E0277]: the trait bound `Label: ScheduleLabel` is not satisfied

--> src/main.rs:15:35

|

15 | let mut sched = Schedule::new(Label);

| ------------- ^^^^^ the trait `ScheduleLabel` is not implemented for `Label`

| |

| required by a bound introduced by this call

|

= note: consider annotating `Label` with `#[derive(ScheduleLabel)]`

note: required by a bound in `bevy_ecs::schedule::Schedule::new`

--> /home/vj/workspace/rust/bevy/crates/bevy_ecs/src/schedule/schedule.rs:297:28

|

297 | pub fn new(label: impl ScheduleLabel) -> Self {

| ^^^^^^^^^^^^^ required by this bound in `Schedule::new`

```

# Objective

The existing `RelationshipSourceCollection` uses `Vec` as the only

possible backing for our relationships. While a reasonable choice,

benchmarking use cases might reveal that a different data type is better

or faster.

For example:

- Not all relationships require a stable ordering between the

relationship sources (i.e. children). In cases where we a) have many

such relations and b) don't care about the ordering between them, a hash

set is likely a better datastructure than a `Vec`.

- The number of children-like entities may be small on average, and a

`smallvec` may be faster

## Solution

- Implement `RelationshipSourceCollection` for `EntityHashSet`, our

custom entity-optimized `HashSet`.

-~~Implement `DoubleEndedIterator` for `EntityHashSet` to make things

compile.~~

- This implementation was cursed and very surprising.

- Instead, by moving the iterator type on `RelationshipSourceCollection`

from an erased RPTIT to an explicit associated type we can add a trait

bound on the offending methods!

- Implement `RelationshipSourceCollection` for `SmallVec`

## Testing

I've added a pair of new tests to make sure this pattern compiles

successfully in practice!

## Migration Guide

`EntityHashSet` and `EntityHashMap` are no longer re-exported in

`bevy_ecs::entity` directly. If you were not using `bevy_ecs` / `bevy`'s

`prelude`, you can access them through their now-public modules,

`hash_set` and `hash_map` instead.

## Notes to reviewers

The `EntityHashSet::Iter` type needs to be public for this impl to be

allowed. I initially renamed it to something that wasn't ambiguous and

re-exported it, but as @Victoronz pointed out, that was somewhat

unidiomatic.

In

1a8564898f,

I instead made the `entity_hash_set` public (and its `entity_hash_set`)

sister public, and removed the re-export. I prefer this design (give me

module docs please), but it leads to a lot of churn in this PR.

Let me know which you'd prefer, and if you'd like me to split that

change out into its own micro PR.

# Objective

- Contributes to #16877

## Solution

- Initial creation of `bevy_platform_support` crate.

- Moved `bevy_utils::Instant` into new `bevy_platform_support` crate.

- Moved `portable-atomic`, `portable-atomic-util`, and

`critical-section` into new `bevy_platform_support` crate.

## Testing

- CI

---

## Showcase

Instead of needing code like this to import an `Arc`:

```rust

#[cfg(feature = "portable-atomic")]

use portable_atomic_util::Arc;

#[cfg(not(feature = "portable-atomic"))]

use alloc::sync::Arc;

```

We can now use:

```rust

use bevy_platform_support::sync::Arc;

```

This applies to many other types, but the goal is overall the same:

allowing crates to use `std`-like types without the boilerplate of

conditional compilation and platform-dependencies.

## Migration Guide

- Replace imports of `bevy_utils::Instant` with

`bevy_platform_support::time::Instant`

- Replace imports of `bevy::utils::Instant` with

`bevy::platform_support::time::Instant`

## Notes

- `bevy_platform_support` hasn't been reserved on `crates.io`

- ~~`bevy_platform_support` is not re-exported from `bevy` at this time.

It may be worthwhile exporting this crate, but I am unsure of a

reasonable name to export it under (`platform_support` may be a bit

wordy for user-facing).~~

- I've included an implementation of `Instant` which is suitable for

`no_std` platforms that are not Wasm for the sake of eliminating feature

gates around its use. It may be a controversial inclusion, so I'm happy

to remove it if required.

- There are many other items (`spin`, `bevy_utils::Sync(Unsafe)Cell`,

etc.) which should be added to this crate. I have kept the initial scope

small to demonstrate utility without making this too unwieldy.

---------

Co-authored-by: TimJentzsch <TimJentzsch@users.noreply.github.com>

Co-authored-by: Chris Russell <8494645+chescock@users.noreply.github.com>

Co-authored-by: François Mockers <francois.mockers@vleue.com>

This adds support for one-to-many non-fragmenting relationships (with

planned paths for fragmenting and non-fragmenting many-to-many

relationships). "Non-fragmenting" means that entities with the same

relationship type, but different relationship targets, are not forced

into separate tables (which would cause "table fragmentation").

Functionally, this fills a similar niche as the current Parent/Children

system. The biggest differences are:

1. Relationships have simpler internals and significantly improved

performance and UX. Commands and specialized APIs are no longer

necessary to keep everything in sync. Just spawn entities with the

relationship components you want and everything "just works".

2. Relationships are generalized. Bevy can provide additional built in

relationships, and users can define their own.

**REQUEST TO REVIEWERS**: _please don't leave top level comments and

instead comment on specific lines of code. That way we can take

advantage of threaded discussions. Also dont leave comments simply

pointing out CI failures as I can read those just fine._

## Built on top of what we have

Relationships are implemented on top of the Bevy ECS features we already

have: components, immutability, and hooks. This makes them immediately

compatible with all of our existing (and future) APIs for querying,

spawning, removing, scenes, reflection, etc. The fewer specialized APIs

we need to build, maintain, and teach, the better.

## Why focus on one-to-many non-fragmenting first?

1. This allows us to improve Parent/Children relationships immediately,

in a way that is reasonably uncontroversial. Switching our hierarchy to

fragmenting relationships would have significant performance

implications. ~~Flecs is heavily considering a switch to non-fragmenting

relations after careful considerations of the performance tradeoffs.~~

_(Correction from @SanderMertens: Flecs is implementing non-fragmenting

storage specialized for asset hierarchies, where asset hierarchies are

many instances of small trees that have a well defined structure)_

2. Adding generalized one-to-many relationships is currently a priority

for the [Next Generation Scene / UI

effort](https://github.com/bevyengine/bevy/discussions/14437).

Specifically, we're interested in building reactions and observers on

top.

## The changes

This PR does the following:

1. Adds a generic one-to-many Relationship system

3. Ports the existing Parent/Children system to Relationships, which now

lives in `bevy_ecs::hierarchy`. The old `bevy_hierarchy` crate has been

removed.

4. Adds on_despawn component hooks

5. Relationships can opt-in to "despawn descendants" behavior, meaning

that the entire relationship hierarchy is despawned when

`entity.despawn()` is called. The built in Parent/Children hierarchies

enable this behavior, and `entity.despawn_recursive()` has been removed.

6. `world.spawn` now applies commands after spawning. This ensures that

relationship bookkeeping happens immediately and removes the need to

manually flush. This is in line with the equivalent behaviors recently

added to the other APIs (ex: insert).

7. Removes the ValidParentCheckPlugin (system-driven / poll based) in

favor of a `validate_parent_has_component` hook.

## Using Relationships

The `Relationship` trait looks like this:

```rust

pub trait Relationship: Component + Sized {

type RelationshipSources: RelationshipSources<Relationship = Self>;

fn get(&self) -> Entity;

fn from(entity: Entity) -> Self;

}

```

A relationship is a component that:

1. Is a simple wrapper over a "target" Entity.

2. Has a corresponding `RelationshipSources` component, which is a

simple wrapper over a collection of entities. Every "target entity"

targeted by a "source entity" with a `Relationship` has a

`RelationshipSources` component, which contains every "source entity"

that targets it.

For example, the `Parent` component (as it currently exists in Bevy) is

the `Relationship` component and the entity containing the Parent is the

"source entity". The entity _inside_ the `Parent(Entity)` component is

the "target entity". And that target entity has a `Children` component

(which implements `RelationshipSources`).

In practice, the Parent/Children relationship looks like this:

```rust

#[derive(Relationship)]

#[relationship(relationship_sources = Children)]

pub struct Parent(pub Entity);

#[derive(RelationshipSources)]

#[relationship_sources(relationship = Parent)]

pub struct Children(Vec<Entity>);

```

The Relationship and RelationshipSources derives automatically implement

Component with the relevant configuration (namely, the hooks necessary

to keep everything in sync).

The most direct way to add relationships is to spawn entities with

relationship components:

```rust

let a = world.spawn_empty().id();

let b = world.spawn(Parent(a)).id();

assert_eq!(world.entity(a).get::<Children>().unwrap(), &[b]);

```

There are also convenience APIs for spawning more than one entity with

the same relationship:

```rust

world.spawn_empty().with_related::<Children>(|s| {

s.spawn_empty();

s.spawn_empty();

})

```

The existing `with_children` API is now a simpler wrapper over

`with_related`. This makes this change largely non-breaking for existing

spawn patterns.

```rust

world.spawn_empty().with_children(|s| {

s.spawn_empty();

s.spawn_empty();

})

```

There are also other relationship APIs, such as `add_related` and

`despawn_related`.

## Automatic recursive despawn via the new on_despawn hook

`RelationshipSources` can opt-in to "despawn descendants" behavior,

which will despawn all related entities in the relationship hierarchy:

```rust

#[derive(RelationshipSources)]

#[relationship_sources(relationship = Parent, despawn_descendants)]

pub struct Children(Vec<Entity>);

```

This means that `entity.despawn_recursive()` is no longer required.

Instead, just use `entity.despawn()` and the relevant related entities

will also be despawned.

To despawn an entity _without_ despawning its parent/child descendants,

you should remove the `Children` component first, which will also remove

the related `Parent` components:

```rust

entity

.remove::<Children>()

.despawn()

```

This builds on the on_despawn hook introduced in this PR, which is fired

when an entity is despawned (before other hooks).

## Relationships are the source of truth

`Relationship` is the _single_ source of truth component.

`RelationshipSources` is merely a reflection of what all the

`Relationship` components say. By embracing this, we are able to

significantly improve the performance of the system as a whole. We can

rely on component lifecycles to protect us against duplicates, rather

than needing to scan at runtime to ensure entities don't already exist

(which results in quadratic runtime). A single source of truth gives us

constant-time inserts. This does mean that we cannot directly spawn

populated `Children` components (or directly add or remove entities from

those components). I personally think this is a worthwhile tradeoff,

both because it makes the performance much better _and_ because it means

theres exactly one way to do things (which is a philosophy we try to

employ for Bevy APIs).

As an aside: treating both sides of the relationship as "equivalent

source of truth relations" does enable building simple and flexible

many-to-many relationships. But this introduces an _inherent_ need to

scan (or hash) to protect against duplicates.

[`evergreen_relations`](https://github.com/EvergreenNest/evergreen_relations)

has a very nice implementation of the "symmetrical many-to-many"

approach. Unfortunately I think the performance issues inherent to that

approach make it a poor choice for Bevy's default relationship system.

## Followup Work

* Discuss renaming `Parent` to `ChildOf`. I refrained from doing that in

this PR to keep the diff reasonable, but I'm personally biased toward

this change (and using that naming pattern generally for relationships).

* [Improved spawning

ergonomics](https://github.com/bevyengine/bevy/discussions/16920)

* Consider adding relationship observers/triggers for "relationship

targets" whenever a source is added or removed. This would replace the

current "hierarchy events" system, which is unused upstream but may have

existing users downstream. I think triggers are the better fit for this

than a buffered event queue, and would prefer not to add that back.

* Fragmenting relations: My current idea hinges on the introduction of

"value components" (aka: components whose type _and_ value determines

their ComponentId, via something like Hashing / PartialEq). By labeling

a Relationship component such as `ChildOf(Entity)` as a "value

component", `ChildOf(e1)` and `ChildOf(e2)` would be considered

"different components". This makes the transition between fragmenting

and non-fragmenting a single flag, and everything else continues to work

as expected.

* Many-to-many support

* Non-fragmenting: We can expand Relationship to be a list of entities

instead of a single entity. I have largely already written the code for

this.

* Fragmenting: With the "value component" impl mentioned above, we get

many-to-many support "for free", as it would allow inserting multiple

copies of a Relationship component with different target entities.

Fixes#3742 (If this PR is merged, I think we should open more targeted

followup issues for the work above, with a fresh tracking issue free of

the large amount of less-directed historical context)

Fixes#17301Fixes#12235Fixes#15299Fixes#15308

## Migration Guide

* Replace `ChildBuilder` with `ChildSpawnerCommands`.

* Replace calls to `.set_parent(parent_id)` with

`.insert(Parent(parent_id))`.

* Replace calls to `.replace_children()` with `.remove::<Children>()`

followed by `.add_children()`. Note that you'll need to manually despawn

any children that are not carried over.

* Replace calls to `.despawn_recursive()` with `.despawn()`.

* Replace calls to `.despawn_descendants()` with

`.despawn_related::<Children>()`.

* If you have any calls to `.despawn()` which depend on the children

being preserved, you'll need to remove the `Children` component first.

---------

Co-authored-by: Alice Cecile <alice.i.cecile@gmail.com>

# Objective

Fixes https://github.com/bevyengine/bevy/issues/17111

## Solution

Move `#![warn(clippy::allow_attributes,

clippy::allow_attributes_without_reason)]` to the workspace `Cargo.toml`

## Testing

Lots of CI testing, and local testing too.

---------

Co-authored-by: Benjamin Brienen <benjamin.brienen@outlook.com>

This commit allows Bevy to use `multi_draw_indirect_count` for drawing

meshes. The `multi_draw_indirect_count` feature works just like

`multi_draw_indirect`, but it takes the number of indirect parameters

from a GPU buffer rather than specifying it on the CPU.

Currently, the CPU constructs the list of indirect draw parameters with

the instance count for each batch set to zero, uploads the resulting

buffer to the GPU, and dispatches a compute shader that bumps the

instance count for each mesh that survives culling. Unfortunately, this

is inefficient when we support `multi_draw_indirect_count`. Draw

commands corresponding to meshes for which all instances were culled

will remain present in the list when calling

`multi_draw_indirect_count`, causing overhead. Proper use of

`multi_draw_indirect_count` requires eliminating these empty draw

commands.

To address this inefficiency, this PR makes Bevy fully construct the

indirect draw commands on the GPU instead of on the CPU. Instead of

writing instance counts to the draw command buffer, the mesh

preprocessing shader now writes them to a separate *indirect metadata

buffer*. A second compute dispatch known as the *build indirect

parameters* shader runs after mesh preprocessing and converts the

indirect draw metadata into actual indirect draw commands for the GPU.

The build indirect parameters shader operates on a batch at a time,

rather than an instance at a time, and as such each thread writes only 0

or 1 indirect draw parameters, simplifying the current logic in

`mesh_preprocessing`, which currently has to have special cases for the

first mesh in each batch. The build indirect parameters shader emits

draw commands in a tightly packed manner, enabling maximally efficient

use of `multi_draw_indirect_count`.

Along the way, this patch switches mesh preprocessing to dispatch one

compute invocation per render phase per view, instead of dispatching one

compute invocation per view. This is preparation for two-phase occlusion

culling, in which we will have two mesh preprocessing stages. In that

scenario, the first mesh preprocessing stage must only process opaque

and alpha tested objects, so the work items must be separated into those

that are opaque or alpha tested and those that aren't. Thus this PR

splits out the work items into a separate buffer for each phase. As this

patch rewrites so much of the mesh preprocessing infrastructure, it was

simpler to just fold the change into this patch instead of deferring it

to the forthcoming occlusion culling PR.

Finally, this patch changes mesh preprocessing so that it runs

separately for indexed and non-indexed meshes. This is because draw

commands for indexed and non-indexed meshes have different sizes and

layouts. *The existing code is actually broken for non-indexed meshes*,

as it attempts to overlay the indirect parameters for non-indexed meshes

on top of those for indexed meshes. Consequently, right now the

parameters will be read incorrectly when multiple non-indexed meshes are

multi-drawn together. *This is a bug fix* and, as with the change to

dispatch phases separately noted above, was easiest to include in this

patch as opposed to separately.

## Migration Guide

* Systems that add custom phase items now need to populate the indirect

drawing-related buffers. See the `specialized_mesh_pipeline` example for

an example of how this is done.

We won't be able to retain render phases from frame to frame if the keys

are unstable. It's not as simple as simply keying off the main world

entity, however, because some main world entities extract to multiple

render world entities. For example, directional lights extract to

multiple shadow cascades, and point lights extract to one view per

cubemap face. Therefore, we key off a new type, `RetainedViewEntity`,

which contains the main entity plus a *subview ID*.

This is part of the preparation for retained bins.

---------

Co-authored-by: ickshonpe <david.curthoys@googlemail.com>

# Objective

I realized that setting these to `deny` may have been a little

aggressive - especially since we upgrade warnings to denies in CI.

## Solution

Downgrades these lints to `warn`, so that compiles can work locally. CI

will still treat these as denies.

# Objective

Stumbled upon a `from <-> form` transposition while reviewing a PR,

thought it was interesting, and went down a bit of a rabbit hole.

## Solution

Fix em

# Objective

Many instances of `clippy::too_many_arguments` linting happen to be on

systems - functions which we don't call manually, and thus there's not

much reason to worry about the argument count.

## Solution

Allow `clippy::too_many_arguments` globally, and remove all lint

attributes related to it.

# Objective

In my crusade to give every lint attribute a reason, it appears I got

too complacent and copy-pasted this expect onto non-system functions.

## Solution

Fix up the reason on those non-system functions

## Testing

N/A

# Objective

- Commands like `cargo bench -- --save-baseline before` do not work

because the default `libtest` is intercepting Criterion-specific CLI

arguments.

- Fixes#17200.

## Solution

- Disable the default `libtest` benchmark harness for the library crate,

as per [the Criterion

book](https://bheisler.github.io/criterion.rs/book/faq.html#cargo-bench-gives-unrecognized-option-errors-for-valid-command-line-options).

## Testing

- `cargo bench -p benches -- --save-baseline before`

- You don't need to run the entire benchmarks, just make sure that they

start without any errors. :)

# Objective & Solution

- Update `downcast-rs` to the latest version, 2.

- Disable (new) `sync` feature to improve compatibility with atomically

challenged platforms.

- Remove stub `downcast-rs` alternative code from `bevy_app`

## Testing

- CI

## Notes

The only change from version 1 to version 2 is the addition of a new

`sync` feature, which allows disabling the `DowncastSync` parts of

`downcast-rs`, which require access to `alloc::sync::Arc`, which is not

available on atomically challenged platforms. Since Bevy makes no use of

the functionality provided by the `sync` feature, I've disabled it in

all crates. Further details can be found

[here](https://github.com/marcianx/downcast-rs/pull/22).

# Objective

- https://github.com/bevyengine/bevy/issues/17111

## Solution

Set the `clippy::allow_attributes` and

`clippy::allow_attributes_without_reason` lints to `deny`, and bring

`bevy_render` in line with the new restrictions.

## Testing

`cargo clippy` and `cargo test --package bevy_render` were run, and no

errors were encountered.

# Objective

the `get` function on [`InstanceInputUniformBuffer`] seems very

error-prone. This PR hopes to fix this.

## Solution

Do a few checks to ensure the index is in bounds and that the `BDI` is

not removed.

Return `Option<BDI>` instead of `BDI`.

## Testing

- Did you test these changes? If so, how?

added a test to verify that the instance buffer works correctly

## Future Work

Performance decreases when using .binary_search(). However this is

likely due to the fact that [`InstanceInputUniformBuffer::get`] for now

is never used, and only get_unchecked.

## Migration Guide

`InstanceInputUniformBuffer::get` now returns `Option<BDI>` instead of

`BDI` to reduce panics. If you require the old functionality of

`InstanceInputUniformBuffer::get` consider using

`InstanceInputUniformBuffer::get_unchecked`.

---------

Co-authored-by: Tim Overbeek <oorbeck@gmail.com>

Currently, our batchable binned items are stored in a hash table that

maps bin key, which includes the batch set key, to a list of entities.

Multidraw is handled by sorting the bin keys and accumulating adjacent

bins that can be multidrawn together (i.e. have the same batch set key)

into multidraw commands during `batch_and_prepare_binned_render_phase`.

This is reasonably efficient right now, but it will complicate future

work to retain indirect draw parameters from frame to frame. Consider

what must happen when we have retained indirect draw parameters and the

application adds a bin (i.e. a new mesh) that shares a batch set key

with some pre-existing meshes. (That is, the new mesh can be multidrawn

with the pre-existing meshes.) To be maximally efficient, our goal in

that scenario will be to update *only* the indirect draw parameters for

the batch set (i.e. multidraw command) containing the mesh that was

added, while leaving the others alone. That means that we have to

quickly locate all the bins that belong to the batch set being modified.

In the existing code, we would have to sort the list of bin keys so that

bins that can be multidrawn together become adjacent to one another in

the list. Then we would have to do a binary search through the sorted

list to find the location of the bin that was just added. Next, we would

have to widen our search to adjacent indexes that contain the same batch

set, doing expensive comparisons against the batch set key every time.

Finally, we would reallocate the indirect draw parameters and update the

stored pointers to the indirect draw parameters that the bins store.

By contrast, it'd be dramatically simpler if we simply changed the way

bins are stored to first map from batch set key (i.e. multidraw command)

to the bins (i.e. meshes) within that batch set key, and then from each

individual bin to the mesh instances. That way, the scenario above in

which we add a new mesh will be simpler to handle. First, we will look

up the batch set key corresponding to that mesh in the outer map to find

an inner map corresponding to the single multidraw command that will

draw that batch set. We will know how many meshes the multidraw command

is going to draw by the size of that inner map. Then we simply need to

reallocate the indirect draw parameters and update the pointers to those

parameters within the bins as necessary. There will be no need to do any

binary search or expensive batch set key comparison: only a single hash

lookup and an iteration over the inner map to update the pointers.

This patch implements the above technique. Because we don't have

retained bins yet, this PR provides no performance benefits. However, it

opens the door to maximally efficient updates when only a small number

of meshes change from frame to frame.

The main churn that this patch causes is that the *batch set key* (which

uniquely specifies a multidraw command) and *bin key* (which uniquely

specifies a mesh *within* that multidraw command) are now separate,

instead of the batch set key being embedded *within* the bin key.

In order to isolate potential regressions, I think that at least #16890,

#16836, and #16825 should land before this PR does.

## Migration Guide

* The *batch set key* is now separate from the *bin key* in

`BinnedPhaseItem`. The batch set key is used to collect multidrawable

meshes together. If you aren't using the multidraw feature, you can

safely set the batch set key to `()`.

Bump version after release

This PR has been auto-generated

---------

Co-authored-by: Bevy Auto Releaser <41898282+github-actions[bot]@users.noreply.github.com>

Co-authored-by: François Mockers <mockersf@gmail.com>

# Objective

- Contributes to #11478

## Solution

- Made `bevy_utils::tracing` `doc(hidden)`

- Re-exported `tracing` from `bevy_log` for end-users

- Added `tracing` directly to crates that need it.

## Testing

- CI

---

## Migration Guide

If you were importing `tracing` via `bevy::utils::tracing`, instead use

`bevy::log::tracing`. Note that many items within `tracing` are also

directly re-exported from `bevy::log` as well, so you may only need

`bevy::log` for the most common items (e.g., `warn!`, `trace!`, etc.).

This also applies to the `log_once!` family of macros.

## Notes

- While this doesn't reduce the line-count in `bevy_utils`, it further

decouples the internal crates from `bevy_utils`, making its eventual

removal more feasible in the future.

- I have just imported `tracing` as we do for all dependencies. However,

a workspace dependency may be more appropriate for version management.

Some hardware and driver combos, such as Intel Iris Xe, have low limits

on the numbers of samplers per shader, causing an overflow. With

first-class bindless arrays, `wgpu` should be able to work around this

limitation eventually, but for now we need to disable bindless materials

on those platforms.

This is an alternative to PR #17107 that calculates the precise number

of samplers needed and compares to the hardware sampler limit,

transparently falling back to non-bindless if the limit is exceeded.

Fixes#16988.