6bbfee8cd3

205 Commits

| Author | SHA1 | Message | Date | |

|---|---|---|---|---|

|

|

38c3423693

|

Event Split: Event, EntityEvent, and BufferedEvent (#19647)

# Objective Closes #19564. The current `Event` trait looks like this: ```rust pub trait Event: Send + Sync + 'static { type Traversal: Traversal<Self>; const AUTO_PROPAGATE: bool = false; fn register_component_id(world: &mut World) -> ComponentId { ... } fn component_id(world: &World) -> Option<ComponentId> { ... } } ``` The `Event` trait is used by both buffered events (`EventReader`/`EventWriter`) and observer events. If they are observer events, they can optionally be targeted at specific `Entity`s or `ComponentId`s, and can even be propagated to other entities. However, there has long been a desire to split the trait semantically for a variety of reasons, see #14843, #14272, and #16031 for discussion. Some reasons include: - It's very uncommon to use a single event type as both a buffered event and targeted observer event. They are used differently and tend to have distinct semantics. - A common footgun is using buffered events with observers or event readers with observer events, as there is no type-level error that prevents this kind of misuse. - #19440 made `Trigger::target` return an `Option<Entity>`. This *seriously* hurts ergonomics for the general case of entity observers, as you need to `.unwrap()` each time. If we could statically determine whether the event is expected to have an entity target, this would be unnecessary. There's really two main ways that we can categorize events: push vs. pull (i.e. "observer event" vs. "buffered event") and global vs. targeted: | | Push | Pull | | ------------ | --------------- | --------------------------- | | **Global** | Global observer | `EventReader`/`EventWriter` | | **Targeted** | Entity observer | - | There are many ways to approach this, each with their tradeoffs. Ultimately, we kind of want to split events both ways: - A type-level distinction between observer events and buffered events, to prevent people from using the wrong kind of event in APIs - A statically designated entity target for observer events to avoid accidentally using untargeted events for targeted APIs This PR achieves these goals by splitting event traits into `Event`, `EntityEvent`, and `BufferedEvent`, with `Event` being the shared trait implemented by all events. ## `Event`, `EntityEvent`, and `BufferedEvent` `Event` is now a very simple trait shared by all events. ```rust pub trait Event: Send + Sync + 'static { // Required for observer APIs fn register_component_id(world: &mut World) -> ComponentId { ... } fn component_id(world: &World) -> Option<ComponentId> { ... } } ``` You can call `trigger` for *any* event, and use a global observer for listening to the event. ```rust #[derive(Event)] struct Speak { message: String, } // ... app.add_observer(|trigger: On<Speak>| { println!("{}", trigger.message); }); // ... commands.trigger(Speak { message: "Y'all like these reworked events?".to_string(), }); ``` To allow an event to be targeted at entities and even propagated further, you can additionally implement the `EntityEvent` trait: ```rust pub trait EntityEvent: Event { type Traversal: Traversal<Self>; const AUTO_PROPAGATE: bool = false; } ``` This lets you call `trigger_targets`, and to use targeted observer APIs like `EntityCommands::observe`: ```rust #[derive(Event, EntityEvent)] #[entity_event(traversal = &'static ChildOf, auto_propagate)] struct Damage { amount: f32, } // ... let enemy = commands.spawn((Enemy, Health(100.0))).id(); // Spawn some armor as a child of the enemy entity. // When the armor takes damage, it will bubble the event up to the enemy. let armor_piece = commands .spawn((ArmorPiece, Health(25.0), ChildOf(enemy))) .observe(|trigger: On<Damage>, mut query: Query<&mut Health>| { // Note: `On::target` only exists because this is an `EntityEvent`. let mut health = query.get(trigger.target()).unwrap(); health.0 -= trigger.amount(); }); commands.trigger_targets(Damage { amount: 10.0 }, armor_piece); ``` > [!NOTE] > You *can* still also trigger an `EntityEvent` without targets using `trigger`. We probably *could* make this an either-or thing, but I'm not sure that's actually desirable. To allow an event to be used with the buffered API, you can implement `BufferedEvent`: ```rust pub trait BufferedEvent: Event {} ``` The event can then be used with `EventReader`/`EventWriter`: ```rust #[derive(Event, BufferedEvent)] struct Message(String); fn write_hello(mut writer: EventWriter<Message>) { writer.write(Message("I hope these examples are alright".to_string())); } fn read_messages(mut reader: EventReader<Message>) { // Process all buffered events of type `Message`. for Message(message) in reader.read() { println!("{message}"); } } ``` In summary: - Need a basic event you can trigger and observe? Derive `Event`! - Need the event to be targeted at an entity? Derive `EntityEvent`! - Need the event to be buffered and support the `EventReader`/`EventWriter` API? Derive `BufferedEvent`! ## Alternatives I'll now cover some of the alternative approaches I have considered and briefly explored. I made this section collapsible since it ended up being quite long :P <details> <summary>Expand this to see alternatives</summary> ### 1. Unified `Event` Trait One option is not to have *three* separate traits (`Event`, `EntityEvent`, `BufferedEvent`), and to instead just use associated constants on `Event` to determine whether an event supports targeting and buffering or not: ```rust pub trait Event: Send + Sync + 'static { type Traversal: Traversal<Self>; const AUTO_PROPAGATE: bool = false; const TARGETED: bool = false; const BUFFERED: bool = false; fn register_component_id(world: &mut World) -> ComponentId { ... } fn component_id(world: &World) -> Option<ComponentId> { ... } } ``` Methods can then use bounds like `where E: Event<TARGETED = true>` or `where E: Event<BUFFERED = true>` to limit APIs to specific kinds of events. This would keep everything under one `Event` trait, but I don't think it's necessarily a good idea. It makes APIs harder to read, and docs can't easily refer to specific types of events. You can also create weird invariants: what if you specify `TARGETED = false`, but have `Traversal` and/or `AUTO_PROPAGATE` enabled? ### 2. `Event` and `Trigger` Another option is to only split the traits between buffered events and observer events, since that is the main thing people have been asking for, and they have the largest API difference. If we did this, I think we would need to make the terms *clearly* separate. We can't really use `Event` and `BufferedEvent` as the names, since it would be strange that `BufferedEvent` doesn't implement `Event`. Something like `ObserverEvent` and `BufferedEvent` could work, but it'd be more verbose. For this approach, I would instead keep `Event` for the current `EventReader`/`EventWriter` API, and call the observer event a `Trigger`, since the "trigger" terminology is already used in the observer context within Bevy (both as a noun and a verb). This is also what a long [bikeshed on Discord](https://discord.com/channels/691052431525675048/749335865876021248/1298057661878898791) seemed to land on at the end of last year. ```rust // For `EventReader`/`EventWriter` pub trait Event: Send + Sync + 'static {} // For observers pub trait Trigger: Send + Sync + 'static { type Traversal: Traversal<Self>; const AUTO_PROPAGATE: bool = false; const TARGETED: bool = false; fn register_component_id(world: &mut World) -> ComponentId { ... } fn component_id(world: &World) -> Option<ComponentId> { ... } } ``` The problem is that "event" is just a really good term for something that "happens". Observers are rapidly becoming the more prominent API, so it'd be weird to give them the `Trigger` name and leave the good `Event` name for the less common API. So, even though a split like this seems neat on the surface, I think it ultimately wouldn't really work. We want to keep the `Event` name for observer events, and there is no good alternative for the buffered variant. (`Message` was suggested, but saying stuff like "sends a collision message" is weird.) ### 3. `GlobalEvent` + `TargetedEvent` What if instead of focusing on the buffered vs. observed split, we *only* make a distinction between global and targeted events? ```rust // A shared event trait to allow global observers to work pub trait Event: Send + Sync + 'static { fn register_component_id(world: &mut World) -> ComponentId { ... } fn component_id(world: &World) -> Option<ComponentId> { ... } } // For buffered events and non-targeted observer events pub trait GlobalEvent: Event {} // For targeted observer events pub trait TargetedEvent: Event { type Traversal: Traversal<Self>; const AUTO_PROPAGATE: bool = false; } ``` This is actually the first approach I implemented, and it has the neat characteristic that you can only use non-targeted APIs like `trigger` with a `GlobalEvent` and targeted APIs like `trigger_targets` with a `TargetedEvent`. You have full control over whether the entity should or should not have a target, as they are fully distinct at the type-level. However, there's a few problems: - There is no type-level indication of whether a `GlobalEvent` supports buffered events or just non-targeted observer events - An `Event` on its own does literally nothing, it's just a shared trait required to make global observers accept both non-targeted and targeted events - If an event is both a `GlobalEvent` and `TargetedEvent`, global observers again have ambiguity on whether an event has a target or not, undermining some of the benefits - The names are not ideal ### 4. `Event` and `EntityEvent` We can fix some of the problems of Alternative 3 by accepting that targeted events can also be used in non-targeted contexts, and simply having the `Event` and `EntityEvent` traits: ```rust // For buffered events and non-targeted observer events pub trait Event: Send + Sync + 'static { fn register_component_id(world: &mut World) -> ComponentId { ... } fn component_id(world: &World) -> Option<ComponentId> { ... } } // For targeted observer events pub trait EntityEvent: Event { type Traversal: Traversal<Self>; const AUTO_PROPAGATE: bool = false; } ``` This is essentially identical to this PR, just without a dedicated `BufferedEvent`. The remaining major "problem" is that there is still zero type-level indication of whether an `Event` event *actually* supports the buffered API. This leads us to the solution proposed in this PR, using `Event`, `EntityEvent`, and `BufferedEvent`. </details> ## Conclusion The `Event` + `EntityEvent` + `BufferedEvent` split proposed in this PR aims to solve all the common problems with Bevy's current event model while keeping the "weirdness" factor minimal. It splits in terms of both the push vs. pull *and* global vs. targeted aspects, while maintaining a shared concept for an "event". ### Why I Like This - The term "event" remains as a single concept for all the different kinds of events in Bevy. - Despite all event types being "events", they use fundamentally different APIs. Instead of assuming that you can use an event type with any pattern (when only one is typically supported), you explicitly opt in to each one with dedicated traits. - Using separate traits for each type of event helps with documentation and clearer function signatures. - I can safely make assumptions on expected usage. - If I see that an event is an `EntityEvent`, I can assume that I can use `observe` on it and get targeted events. - If I see that an event is a `BufferedEvent`, I can assume that I can use `EventReader` to read events. - If I see both `EntityEvent` and `BufferedEvent`, I can assume that both APIs are supported. In summary: This allows for a unified concept for events, while limiting the different ways to use them with opt-in traits. No more guess-work involved when using APIs. ### Problems? - Because `BufferedEvent` implements `Event` (for more consistent semantics etc.), you can still use all buffered events for non-targeted observers. I think this is fine/good. The important part is that if you see that an event implements `BufferedEvent`, you know that the `EventReader`/`EventWriter` API should be supported. Whether it *also* supports other APIs is secondary. - I currently only support `trigger_targets` for an `EntityEvent`. However, you can technically target components too, without targeting any entities. I consider that such a niche and advanced use case that it's not a huge problem to only support it for `EntityEvent`s, but we could also split `trigger_targets` into `trigger_entities` and `trigger_components` if we wanted to (or implement components as entities :P). - You can still trigger an `EntityEvent` *without* targets. I consider this correct, since `Event` implements the non-targeted behavior, and it'd be weird if implementing another trait *removed* behavior. However, it does mean that global observers for entity events can technically return `Entity::PLACEHOLDER` again (since I got rid of the `Option<Entity>` added in #19440 for ergonomics). I think that's enough of an edge case that it's not a huge problem, but it is worth keeping in mind. - ~~Deriving both `EntityEvent` and `BufferedEvent` for the same type currently duplicates the `Event` implementation, so you instead need to manually implement one of them.~~ Changed to always requiring `Event` to be derived. ## Related Work There are plans to implement multi-event support for observers, especially for UI contexts. [Cart's example](https://github.com/bevyengine/bevy/issues/14649#issuecomment-2960402508) API looked like this: ```rust // Truncated for brevity trigger: Trigger<( OnAdd<Pressed>, OnRemove<Pressed>, OnAdd<InteractionDisabled>, OnRemove<InteractionDisabled>, OnInsert<Hovered>, )>, ``` I believe this shouldn't be in conflict with this PR. If anything, this PR might *help* achieve the multi-event pattern for entity observers with fewer footguns: by statically enforcing that all of these events are `EntityEvent`s in the context of `EntityCommands::observe`, we can avoid misuse or weird cases where *some* events inside the trigger are targeted while others are not. |

||

|

|

e5dc177b4b

|

Rename Trigger to On (#19596)

# Objective

Currently, the observer API looks like this:

```rust

app.add_observer(|trigger: Trigger<Explode>| {

info!("Entity {} exploded!", trigger.target());

});

```

Future plans for observers also include "multi-event observers" with a

trigger that looks like this (see [Cart's

example](https://github.com/bevyengine/bevy/issues/14649#issuecomment-2960402508)):

```rust

trigger: Trigger<(

OnAdd<Pressed>,

OnRemove<Pressed>,

OnAdd<InteractionDisabled>,

OnRemove<InteractionDisabled>,

OnInsert<Hovered>,

)>,

```

In scenarios like this, there is a lot of repetition of `On`. These are

expected to be very high-traffic APIs especially in UI contexts, so

ergonomics and readability are critical.

By renaming `Trigger` to `On`, we can make these APIs read more cleanly

and get rid of the repetition:

```rust

app.add_observer(|trigger: On<Explode>| {

info!("Entity {} exploded!", trigger.target());

});

```

```rust

trigger: On<(

Add<Pressed>,

Remove<Pressed>,

Add<InteractionDisabled>,

Remove<InteractionDisabled>,

Insert<Hovered>,

)>,

```

Names like `On<Add<Pressed>>` emphasize the actual event listener nature

more than `Trigger<OnAdd<Pressed>>`, and look cleaner. This *also* frees

up the `Trigger` name if we want to use it for the observer event type,

splitting them out from buffered events (bikeshedding this is out of

scope for this PR though).

For prior art:

[`bevy_eventlistener`](https://github.com/aevyrie/bevy_eventlistener)

used

[`On`](https://docs.rs/bevy_eventlistener/latest/bevy_eventlistener/event_listener/struct.On.html)

for its event listener type. Though in our case, the observer is the

event listener, and `On` is just a type containing information about the

triggered event.

## Solution

Steal from `bevy_event_listener` by @aevyrie and use `On`.

- Rename `Trigger` to `On`

- Rename `OnAdd` to `Add`

- Rename `OnInsert` to `Insert`

- Rename `OnReplace` to `Replace`

- Rename `OnRemove` to `Remove`

- Rename `OnDespawn` to `Despawn`

## Discussion

### Naming Conflicts??

Using a name like `Add` might initially feel like a very bad idea, since

it risks conflict with `core::ops::Add`. However, I don't expect this to

be a big problem in practice.

- You rarely need to actually implement the `Add` trait, especially in

modules that would use the Bevy ECS.

- In the rare cases where you *do* get a conflict, it is very easy to

fix by just disambiguating, for example using `ops::Add`.

- The `Add` event is a struct while the `Add` trait is a trait (duh), so

the compiler error should be very obvious.

For the record, renaming `OnAdd` to `Add`, I got exactly *zero* errors

or conflicts within Bevy itself. But this is of course not entirely

representative of actual projects *using* Bevy.

You might then wonder, why not use `Added`? This would conflict with the

`Added` query filter, so it wouldn't work. Additionally, the current

naming convention for observer events does not use past tense.

### Documentation

This does make documentation slightly more awkward when referring to

`On` or its methods. Previous docs often referred to `Trigger::target`

or "sends a `Trigger`" (which is... a bit strange anyway), which would

now be `On::target` and "sends an observer `Event`".

You can see the diff in this PR to see some of the effects. I think it

should be fine though, we may just need to reword more documentation to

read better.

|

||

|

|

064e5e48b4

|

Remove entity placeholder from observers (#19440)

# Objective `Entity::PLACEHOLDER` acts as a magic number that will *probably* never really exist, but it certainly could. And, `Entity` has a niche, so the only reason to use `PLACEHOLDER` is as an alternative to `MaybeUninit` that trades safety risks for logic risks. As a result, bevy has generally advised against using `PLACEHOLDER`, but we still use if for a lot internally. This pr starts removing internal uses of it, starting from observers. ## Solution Change all trigger target related types from `Entity` to `Option<Entity>` Small migration guide to come. ## Testing CI ## Future Work This turned a lot of code from ```rust trigger.target() ``` to ```rust trigger.target().unwrap() ``` The extra panic is no worse than before; it's just earlier than panicking after passing the placeholder to something else. But this is kinda annoying. I would like to add a `TriggerMode` or something to `Event` that would restrict what kinds of targets can be used for that event. Many events like `Removed` etc, are always triggered with a target. We can make those have a way to assume Some, etc. But I wanted to save that for a future pr. |

||

|

|

c617fc49ae

|

fix distinct directional lights per view (#19147)

# Objective after #15156 it seems like using distinct directional lights on different views is broken (and will probably break spotlights too). fix them ## Solution the reason is a bit hairy so with an example: - camera 0 on layer 0 - camera 1 on layer 1 - dir light 0 on layer 0 (2 cascades) - dir light 1 on layer 1 (2 cascades) in render/lights.rs: - outside of any view loop, - we count the total number of shadow casting directional light cascades (4) and assign an incrementing `depth_texture_base_index` for each (0-1 for one light, 2-3 for the other, depending on iteration order) (line 1034) - allocate a texture array for the total number of cascades plus spotlight maps (4) (line 1106) - in the view loop, for directional lights we - skip lights that don't intersect on renderlayers (line 1440) - assign an incrementing texture layer to each light/cascade starting from 0 (resets to 0 per view) (assigning 0 and 1 each time for the 2 cascades of the intersecting light) (line 1509, init at 1421) then in the rendergraph: - camera 0 renders the shadow map for light 0 to texture indices 0 and 1 - camera 0 renders using shadows from the `depth_texture_base_index` (maybe 0-1, maybe 2-3 depending on the iteration order) - camera 1 renders the shadow map for light 1 to texture indices 0 and 1 - camera 0 renders using shadows from the `depth_texture_base_index` (maybe 0-1, maybe 2-3 depending on the iteration order) issues: - one of the views uses empty shadow maps (bug) - we allocated a texture layer per cascade per light, even though not all lights are used on all views (just inefficient) - I think we're allocating texture layers even for lights with `shadows_enabled: false` (just inefficient) solution: - calculate upfront the view with the largest number of directional cascades - allocate this many layers (plus layers for spotlights) in the texture array - keep using texture layers 0..n in the per-view loop, but build GpuLights.gpu_directional_lights within the loop too so it refers to the same layers we render to nice side effects: - we can now use `max_texture_array_layers / MAX_CASCADES_PER_LIGHT` shadow-casting directional lights per view, rather than overall. - we can remove the `GpuDirectionalLight::skip` field, since the gpu lights struct is constructed per view a simpler approach would be to keep everything the same, and just increment the texture layer index in the view loop even for non-intersecting lights. this pr reduces the total shadowmap vram used as well and isn't *much* extra complexity. but if we want something less risky/intrusive for 16.1 that would be the way. ## Testing i edited the split screen example to put separate lights on layer 1 and layer 2, and put the plane and fox on both layers (using lots of unrelated code for render layer propagation from #17575). without the fix the directional shadows will only render on one of the top 2 views even though there are directional lights on both layers. ```rs //! Renders two cameras to the same window to accomplish "split screen". use std::f32::consts::PI; use bevy::{ pbr::CascadeShadowConfigBuilder, prelude::*, render:📷:Viewport, window::WindowResized, }; use bevy_render::view::RenderLayers; fn main() { App::new() .add_plugins(DefaultPlugins) .add_plugins(HierarchyPropagatePlugin::<RenderLayers>::default()) .add_systems(Startup, setup) .add_systems(Update, (set_camera_viewports, button_system)) .run(); } /// set up a simple 3D scene fn setup( mut commands: Commands, asset_server: Res<AssetServer>, mut meshes: ResMut<Assets<Mesh>>, mut materials: ResMut<Assets<StandardMaterial>>, ) { let all_layers = RenderLayers::layer(1).with(2).with(3).with(4); // plane commands.spawn(( Mesh3d(meshes.add(Plane3d::default().mesh().size(100.0, 100.0))), MeshMaterial3d(materials.add(Color::srgb(0.3, 0.5, 0.3))), all_layers.clone() )); commands.spawn(( SceneRoot( asset_server.load(GltfAssetLabel::Scene(0).from_asset("models/animated/Fox.glb")), ), Propagate(all_layers.clone()), )); // Light commands.spawn(( Transform::from_rotation(Quat::from_euler(EulerRot::ZYX, 0.0, 1.0, -PI / 4.)), DirectionalLight { shadows_enabled: true, ..default() }, CascadeShadowConfigBuilder { num_cascades: if cfg!(all( feature = "webgl2", target_arch = "wasm32", not(feature = "webgpu") )) { // Limited to 1 cascade in WebGL 1 } else { 2 }, first_cascade_far_bound: 200.0, maximum_distance: 280.0, ..default() } .build(), RenderLayers::layer(1), )); commands.spawn(( Transform::from_rotation(Quat::from_euler(EulerRot::ZYX, 0.0, 1.0, -PI / 4.)), DirectionalLight { shadows_enabled: true, ..default() }, CascadeShadowConfigBuilder { num_cascades: if cfg!(all( feature = "webgl2", target_arch = "wasm32", not(feature = "webgpu") )) { // Limited to 1 cascade in WebGL 1 } else { 2 }, first_cascade_far_bound: 200.0, maximum_distance: 280.0, ..default() } .build(), RenderLayers::layer(2), )); // Cameras and their dedicated UI for (index, (camera_name, camera_pos)) in [ ("Player 1", Vec3::new(0.0, 200.0, -150.0)), ("Player 2", Vec3::new(150.0, 150., 50.0)), ("Player 3", Vec3::new(100.0, 150., -150.0)), ("Player 4", Vec3::new(-100.0, 80., 150.0)), ] .iter() .enumerate() { let camera = commands .spawn(( Camera3d::default(), Transform::from_translation(*camera_pos).looking_at(Vec3::ZERO, Vec3::Y), Camera { // Renders cameras with different priorities to prevent ambiguities order: index as isize, ..default() }, CameraPosition { pos: UVec2::new((index % 2) as u32, (index / 2) as u32), }, RenderLayers::layer(index+1) )) .id(); // Set up UI commands .spawn(( UiTargetCamera(camera), Node { width: Val::Percent(100.), height: Val::Percent(100.), ..default() }, )) .with_children(|parent| { parent.spawn(( Text::new(*camera_name), Node { position_type: PositionType::Absolute, top: Val::Px(12.), left: Val::Px(12.), ..default() }, )); buttons_panel(parent); }); } fn buttons_panel(parent: &mut ChildSpawnerCommands) { parent .spawn(Node { position_type: PositionType::Absolute, width: Val::Percent(100.), height: Val::Percent(100.), display: Display::Flex, flex_direction: FlexDirection::Row, justify_content: JustifyContent::SpaceBetween, align_items: AlignItems::Center, padding: UiRect::all(Val::Px(20.)), ..default() }) .with_children(|parent| { rotate_button(parent, "<", Direction::Left); rotate_button(parent, ">", Direction::Right); }); } fn rotate_button(parent: &mut ChildSpawnerCommands, caption: &str, direction: Direction) { parent .spawn(( RotateCamera(direction), Button, Node { width: Val::Px(40.), height: Val::Px(40.), border: UiRect::all(Val::Px(2.)), justify_content: JustifyContent::Center, align_items: AlignItems::Center, ..default() }, BorderColor(Color::WHITE), BackgroundColor(Color::srgb(0.25, 0.25, 0.25)), )) .with_children(|parent| { parent.spawn(Text::new(caption)); }); } } #[derive(Component)] struct CameraPosition { pos: UVec2, } #[derive(Component)] struct RotateCamera(Direction); enum Direction { Left, Right, } fn set_camera_viewports( windows: Query<&Window>, mut resize_events: EventReader<WindowResized>, mut query: Query<(&CameraPosition, &mut Camera)>, ) { // We need to dynamically resize the camera's viewports whenever the window size changes // so then each camera always takes up half the screen. // A resize_event is sent when the window is first created, allowing us to reuse this system for initial setup. for resize_event in resize_events.read() { let window = windows.get(resize_event.window).unwrap(); let size = window.physical_size() / 2; for (camera_position, mut camera) in &mut query { camera.viewport = Some(Viewport { physical_position: camera_position.pos * size, physical_size: size, ..default() }); } } } fn button_system( interaction_query: Query< (&Interaction, &ComputedNodeTarget, &RotateCamera), (Changed<Interaction>, With<Button>), >, mut camera_query: Query<&mut Transform, With<Camera>>, ) { for (interaction, computed_target, RotateCamera(direction)) in &interaction_query { if let Interaction::Pressed = *interaction { // Since TargetCamera propagates to the children, we can use it to find // which side of the screen the button is on. if let Some(mut camera_transform) = computed_target .camera() .and_then(|camera| camera_query.get_mut(camera).ok()) { let angle = match direction { Direction::Left => -0.1, Direction::Right => 0.1, }; camera_transform.rotate_around(Vec3::ZERO, Quat::from_axis_angle(Vec3::Y, angle)); } } } } use std::marker::PhantomData; use bevy::{ app::{App, Plugin, Update}, ecs::query::QueryFilter, prelude::{ Changed, Children, Commands, Component, Entity, Local, Query, RemovedComponents, SystemSet, With, Without, }, }; /// Causes the inner component to be added to this entity and all children. /// A child with a Propagate<C> component of it's own will override propagation from /// that point in the tree #[derive(Component, Clone, PartialEq)] pub struct Propagate<C: Component + Clone + PartialEq>(pub C); /// Internal struct for managing propagation #[derive(Component, Clone, PartialEq)] pub struct Inherited<C: Component + Clone + PartialEq>(pub C); /// Stops the output component being added to this entity. /// Children will still inherit the component from this entity or its parents #[derive(Component, Default)] pub struct PropagateOver<C: Component + Clone + PartialEq>(PhantomData<fn() -> C>); /// Stops the propagation at this entity. Children will not inherit the component. #[derive(Component, Default)] pub struct PropagateStop<C: Component + Clone + PartialEq>(PhantomData<fn() -> C>); pub struct HierarchyPropagatePlugin<C: Component + Clone + PartialEq, F: QueryFilter = ()> { _p: PhantomData<fn() -> (C, F)>, } impl<C: Component + Clone + PartialEq, F: QueryFilter> Default for HierarchyPropagatePlugin<C, F> { fn default() -> Self { Self { _p: Default::default(), } } } #[derive(SystemSet, Clone, PartialEq, PartialOrd, Ord)] pub struct PropagateSet<C: Component + Clone + PartialEq> { _p: PhantomData<fn() -> C>, } impl<C: Component + Clone + PartialEq> std::fmt::Debug for PropagateSet<C> { fn fmt(&self, f: &mut std::fmt::Formatter<'_>) -> std::fmt::Result { f.debug_struct("PropagateSet") .field("_p", &self._p) .finish() } } impl<C: Component + Clone + PartialEq> Eq for PropagateSet<C> {} impl<C: Component + Clone + PartialEq> std:#️⃣:Hash for PropagateSet<C> { fn hash<H: std:#️⃣:Hasher>(&self, state: &mut H) { self._p.hash(state); } } impl<C: Component + Clone + PartialEq> Default for PropagateSet<C> { fn default() -> Self { Self { _p: Default::default(), } } } impl<C: Component + Clone + PartialEq, F: QueryFilter + 'static> Plugin for HierarchyPropagatePlugin<C, F> { fn build(&self, app: &mut App) { app.add_systems( Update, ( update_source::<C, F>, update_stopped::<C, F>, update_reparented::<C, F>, propagate_inherited::<C, F>, propagate_output::<C, F>, ) .chain() .in_set(PropagateSet::<C>::default()), ); } } pub fn update_source<C: Component + Clone + PartialEq, F: QueryFilter>( mut commands: Commands, changed: Query<(Entity, &Propagate<C>), (Changed<Propagate<C>>, Without<PropagateStop<C>>)>, mut removed: RemovedComponents<Propagate<C>>, ) { for (entity, source) in &changed { commands .entity(entity) .try_insert(Inherited(source.0.clone())); } for removed in removed.read() { if let Ok(mut commands) = commands.get_entity(removed) { commands.remove::<(Inherited<C>, C)>(); } } } pub fn update_stopped<C: Component + Clone + PartialEq, F: QueryFilter>( mut commands: Commands, q: Query<Entity, (With<Inherited<C>>, F, With<PropagateStop<C>>)>, ) { for entity in q.iter() { let mut cmds = commands.entity(entity); cmds.remove::<Inherited<C>>(); } } pub fn update_reparented<C: Component + Clone + PartialEq, F: QueryFilter>( mut commands: Commands, moved: Query< (Entity, &ChildOf, Option<&Inherited<C>>), ( Changed<ChildOf>, Without<Propagate<C>>, Without<PropagateStop<C>>, F, ), >, parents: Query<&Inherited<C>>, ) { for (entity, parent, maybe_inherited) in &moved { if let Ok(inherited) = parents.get(parent.parent()) { commands.entity(entity).try_insert(inherited.clone()); } else if maybe_inherited.is_some() { commands.entity(entity).remove::<(Inherited<C>, C)>(); } } } pub fn propagate_inherited<C: Component + Clone + PartialEq, F: QueryFilter>( mut commands: Commands, changed: Query< (&Inherited<C>, &Children), (Changed<Inherited<C>>, Without<PropagateStop<C>>, F), >, recurse: Query< (Option<&Children>, Option<&Inherited<C>>), (Without<Propagate<C>>, Without<PropagateStop<C>>, F), >, mut to_process: Local<Vec<(Entity, Option<Inherited<C>>)>>, mut removed: RemovedComponents<Inherited<C>>, ) { // gather changed for (inherited, children) in &changed { to_process.extend( children .iter() .map(|child| (child, Some(inherited.clone()))), ); } // and removed for entity in removed.read() { if let Ok((Some(children), _)) = recurse.get(entity) { to_process.extend(children.iter().map(|child| (child, None))) } } // propagate while let Some((entity, maybe_inherited)) = (*to_process).pop() { let Ok((maybe_children, maybe_current)) = recurse.get(entity) else { continue; }; if maybe_current == maybe_inherited.as_ref() { continue; } if let Some(children) = maybe_children { to_process.extend( children .iter() .map(|child| (child, maybe_inherited.clone())), ); } if let Some(inherited) = maybe_inherited { commands.entity(entity).try_insert(inherited.clone()); } else { commands.entity(entity).remove::<(Inherited<C>, C)>(); } } } pub fn propagate_output<C: Component + Clone + PartialEq, F: QueryFilter>( mut commands: Commands, changed: Query< (Entity, &Inherited<C>, Option<&C>), (Changed<Inherited<C>>, Without<PropagateOver<C>>, F), >, ) { for (entity, inherited, maybe_current) in &changed { if maybe_current.is_some_and(|c| &inherited.0 == c) { continue; } commands.entity(entity).try_insert(inherited.0.clone()); } } ``` |

||

|

|

17914943a3

|

Fix spot light shadow glitches (#19273)

# Objective Spot light shadows are still broken after fixing point lights in #19265 ## Solution Fix spot lights in the same way, just using the spot light specific visible entities component. I also changed the query to be directly in the render world instead of being extracted to be more accurate. ## Testing Tested with the same code but changing `PointLight` to `SpotLight`. |

||

|

|

4d1b045855

|

Fix point light shadow glitches (#19265)

# Objective Fixes #18945 ## Solution Entities that are not visible in any view (camera or light), get their render meshes removed. When they become visible somewhere again, the meshes get recreated and assigned possibly different ids. Point/spot light visible entities weren't cleared when the lights themseves went out of view, which caused them to try to queue these fake visible entities for rendering every frame. The shadow phase cache usually flushes non visible entites, but because of this bug it never flushed them and continued to queue meshes with outdated ids. The simple solution is to every frame clear all visible entities for all point/spot lights that may or may not be visible. The visible entities get repopulated directly afterwards. I also renamed the `global_point_lights` to `global_visible_clusterable` to make it clear that it includes only visible things. ## Testing - Tested with the code from the issue. |

||

|

|

6f3ea06060

|

Make sure the mesh actually exists before we try to specialize. (#18836)

Fixes #18809 Fixes #18823 Meshes despawned in `Last` can still be in visisible entities if they were visible as of `PostUpdate`. Sanity check that the mesh actually exists before we specialize. We still want to unconditionally assume that the entity is in `EntitySpecializationTicks` as its absence from that cache would likely suggest another bug. |

||

|

|

e9a0ef49f9

|

Rename bevy_platform_support to bevy_platform (#18813)

# Objective The goal of `bevy_platform_support` is to provide a set of platform agnostic APIs, alongside platform-specific functionality. This is a high traffic crate (providing things like HashMap and Instant). Especially in light of https://github.com/bevyengine/bevy/discussions/18799, it deserves a friendlier / shorter name. Given that it hasn't had a full release yet, getting this change in before Bevy 0.16 makes sense. ## Solution - Rename `bevy_platform_support` to `bevy_platform`. |

||

|

|

6c619397d5

|

Unify RenderMaterialInstances and RenderMeshMaterialIds, and fix an associated race condition. (#18734)

Currently, `RenderMaterialInstances` and `RenderMeshMaterialIds` are very similar render-world resources: the former maps main world meshes to typed material asset IDs, and the latter maps main world meshes to untyped material asset IDs. This is needlessly-complex and wasteful, so this patch unifies the two in favor of a single untyped `RenderMaterialInstances` resource. This patch also fixes a subtle issue that could cause mesh materials to be incorrect if a `MeshMaterial3d<A>` was removed and replaced with a `MeshMaterial3d<B>` material in the same frame. The problematic pattern looks like: 1. `extract_mesh_materials<B>` runs and, seeing the `Changed<MeshMaterial3d<B>>` condition, adds an entry mapping the mesh to the new material to the untyped `RenderMeshMaterialIds`. 2. `extract_mesh_materials<A>` runs and, seeing that the entity is present in `RemovedComponents<MeshMaterial3d<A>>`, removes the entry from `RenderMeshMaterialIds`. 3. The material slot is now empty, and the mesh will show up as whatever material happens to be in slot 0 in the material data slab. This commit fixes the issue by splitting out `extract_mesh_materials` into *three* phases: *extraction*, *early sweeping*, and *late sweeping*, which run in that order: 1. The *extraction* system, which runs for each material, updates `RenderMaterialInstances` records whenever `MeshMaterial3d` components change, and updates a change tick so that the following system will know not to remove it. 2. The *early sweeping* system, which runs for each material, processes entities present in `RemovedComponents<MeshMaterial3d>` and removes each such entity's record from `RenderMeshInstances` only if the extraction system didn't update it this frame. This system runs after *all* extraction systems have completed, fixing the race condition. 3. The *late sweeping* system, which runs only once regardless of the number of materials in the scene, processes entities present in `RemovedComponents<ViewVisibility>` and, as in the early sweeping phase, removes each such entity's record from `RenderMeshInstances` only if the extraction system didn't update it this frame. At the end, the late sweeping system updates the change tick. Because this pattern happens relatively frequently, I think this PR should land for 0.16. |

||

|

|

f57c7a43c4

|

reexport entity set collections in entity module (#18413)

# Objective Unlike for their helper typers, the import paths for `unique_array::UniqueEntityArray`, `unique_slice::UniqueEntitySlice`, `unique_vec::UniqueEntityVec`, `hash_set::EntityHashSet`, `hash_map::EntityHashMap`, `index_set::EntityIndexSet`, `index_map::EntityIndexMap` are quite redundant. When looking at the structure of `hashbrown`, we can also see that while both `HashSet` and `HashMap` have their own modules, the main types themselves are re-exported to the crate level. ## Solution Re-export the types in their shared `entity` parent module, and simplify the imports where they're used. |

||

|

|

f4a5e8bc51

|

Tracy GPU support (#18490)

# Objective - Add tracy GPU support ## Solution - Build on top of the existing render diagnostics recording to also upload gpu timestamps to tracy - Copy code from https://github.com/Wumpf/wgpu-profiler ## Showcase  |

||

|

|

8d5474a2f2

|

Fix unecessary specialization checks for apps with many materials (#18410)

# Objective For materials that aren't being used or a visible entity doesn't have an instance of, we were unnecessarily constantly checking whether they needed specialization, saying yes (because the material had never been specialized for that entity), and failing to look up the material instance. ## Solution If an entity doesn't have an instance of the material, it can't possibly need specialization, so exit early before spending time doing the check. Fixes #18388. |

||

|

|

d6db78b5dd

|

Replace internal uses of insert_or_spawn_batch (#18035)

## Objective `insert_or_spawn_batch` is due to be deprecated eventually (#15704), and removing uses internally will make that easier. ## Solution Replaced internal uses of `insert_or_spawn_batch` with `try_insert_batch` (non-panicking variant because `insert_or_spawn_batch` didn't panic). All of the internal uses are in rendering code. Since retained rendering was meant to get rid non-opaque entity IDs, I assume the code was just using `insert_or_spawn_batch` because `insert_batch` didn't exist and not because it actually wanted to spawn something. However, I am *not* confident in my ability to judge rendering code. |

||

|

|

54701a844e

|

Revert "Replace Ambient Lights with Environment Map Lights (#17482)" (#18167)

This reverts commit

|

||

|

|

0b5302d96a

|

Replace Ambient Lights with Environment Map Lights (#17482)

# Objective Transparently uses simple `EnvironmentMapLight`s to mimic `AmbientLight`s. Implements the first part of #17468, but I can implement hemispherical lights in this PR too if needed. ## Solution - A function `EnvironmentMapLight::solid_color(&mut Assets<Image>, Color)` is provided to make an environment light with a solid color. - A new system is added to `SimulationLightSystems` that maps `AmbientLight`s on views or the world to a corresponding `EnvironmentMapLight`. I have never worked with (or on) Bevy before, so nitpicky comments on how I did things are appreciated :). ## Testing Testing was done on a modified version of the `3d/lighting` example, where I removed all lights except the ambient light. I have not included the example, but can if required. ## Migration `bevy_pbr::AmbientLight` has been deprecated, so all usages of it should be replaced by a `bevy_pbr::EnvironmentMapLight` created with `EnvironmentMapLight::solid_color` placed on the camera. There is no alternative to ambient lights as resources. |

||

|

|

058497e0bb

|

Change Commands::get_entity to return Result and remove panic from Commands::entity (#18043)

## Objective Alternative to #18001. - Now that systems can handle the `?` operator, `get_entity` returning `Result` would be more useful than `Option`. - With `get_entity` being more flexible, combined with entity commands now checking the entity's existence automatically, the panic in `entity` isn't really necessary. ## Solution - Changed `Commands::get_entity` to return `Result<EntityCommands, EntityDoesNotExistError>`. - Removed panic from `Commands::entity`. |

||

|

|

4880a231de

|

Implement occlusion culling for directional light shadow maps. (#17951)

Two-phase occlusion culling can be helpful for shadow maps just as it can for a prepass, in order to reduce vertex and alpha mask fragment shading overhead. This patch implements occlusion culling for shadow maps from directional lights, when the `OcclusionCulling` component is present on the entities containing the lights. Shadow maps from point lights are deferred to a follow-up patch. Much of this patch involves expanding the hierarchical Z-buffer to cover shadow maps in addition to standard view depth buffers. The `scene_viewer` example has been updated to add `OcclusionCulling` to the directional light that it creates. This improved the performance of the rend3 sci-fi test scene when enabling shadows. |

||

|

|

5e569af2d0

|

Make the specialized pipeline cache two-level. (#17915)

Currently, the specialized pipeline cache maps a (view entity, mesh entity) tuple to the retained pipeline for that entity. This causes two problems: 1. Using the view entity is incorrect, because the view entity isn't stable from frame to frame. 2. Switching the view entity to a `RetainedViewEntity`, which is necessary for correctness, significantly regresses performance of `specialize_material_meshes` and `specialize_shadows` because of the loss of the fast `EntityHash`. This patch fixes both problems by switching to a *two-level* hash table. The outer level of the table maps each `RetainedViewEntity` to an inner table, which maps each `MainEntity` to its pipeline ID and change tick. Because we loop over views first and, within that loop, loop over entities visible from that view, we hoist the slow lookup of the view entity out of the inner entity loop. Additionally, this patch fixes a bug whereby pipeline IDs were leaked when removing the view. We still have a problem with leaking pipeline IDs for deleted entities, but that won't be fixed until the specialized pipeline cache is retained. This patch improves performance of the [Caldera benchmark] from 7.8× faster than 0.14 to 9.0× faster than 0.14, when applied on top of the global binding arrays PR, #17898. [Caldera benchmark]: https://github.com/DGriffin91/bevy_caldera_scene |

||

|

|

94deca81bf

|

Use target_abi = "sim" instead of ios_simulator feature (#17702)

## Objective Get rid of a redundant Cargo feature flag. ## Solution Use the built-in `target_abi = "sim"` instead of a custom Cargo feature flag, which is set for the iOS (and visionOS and tvOS) simulator. This has been stable since Rust 1.78. In the future, some of this may become redundant if Wgpu implements proper supper for the iOS Simulator: https://github.com/gfx-rs/wgpu/issues/7057 CC @mockersf who implemented [the original fix](https://github.com/bevyengine/bevy/pull/10178). ## Testing - Open mobile example in Xcode. - Launch the simulator. - See that no errors are emitted. - Remove the code cfg-guarded behind `target_abi = "sim"`. - See that an error now happens. (I haven't actually performed these steps on the latest `main`, because I'm hitting an unrelated error (EDIT: It was https://github.com/bevyengine/bevy/pull/17637). But tested it on 0.15.0). --- ## Migration Guide > If you're using a project that builds upon the mobile example, remove the `ios_simulator` feature from your `Cargo.toml` (Bevy now handles this internally). |

||

|

|

85b366a8a2

|

Cache MeshInputUniform indices in each RenderBin. (#17772)

Currently, we look up each `MeshInputUniform` index in a hash table that maps the main entity ID to the index every frame. This is inefficient, cache unfriendly, and unnecessary, as the `MeshInputUniform` index for an entity remains the same from frame to frame (even if the input uniform changes). This commit changes the `IndexSet` in the `RenderBin` to an `IndexMap` that maps the `MainEntity` to `MeshInputUniformIndex` (a new type that this patch adds for more type safety). On Caldera with parallel `batch_and_prepare_binned_render_phase`, this patch improves that function from 3.18 ms to 2.42 ms, a 31% speedup. |

||

|

|

69db29efb9

|

Sweep bins after queuing so as to only sweep them once. (#17787)

Currently, we *sweep*, or remove entities from bins when those entities became invisible or changed phases, during `queue_material_meshes` and similar phases. This, however, is wrong, because `queue_material_meshes` executes once per material type, not once per phase. This could result in sweeping bins multiple times per phase, which can corrupt the bins. This commit fixes the issue by moving sweeping to a separate system that runs after queuing. This manifested itself as entities appearing and disappearing seemingly at random. Closes #17759. --------- Co-authored-by: Robert Swain <robert.swain@gmail.com> |

||

|

|

669d139c13

|

Upgrade to wgpu v24 (#17542)

Didn't remove WgpuWrapper. Not sure if it's needed or not still. ## Testing - Did you test these changes? If so, how? Example runner - Are there any parts that need more testing? Web (portable atomics thingy?), DXC. ## Migration Guide - Bevy has upgraded to [wgpu v24](https://github.com/gfx-rs/wgpu/blob/trunk/CHANGELOG.md#v2400-2025-01-15). - When using the DirectX 12 rendering backend, the new priority system for choosing a shader compiler is as follows: - If the `WGPU_DX12_COMPILER` environment variable is set at runtime, it is used - Else if the new `statically-linked-dxc` feature is enabled, a custom version of DXC will be statically linked into your app at compile time. - Else Bevy will look in the app's working directory for `dxcompiler.dll` and `dxil.dll` at runtime. - Else if they are missing, Bevy will fall back to FXC (not recommended) --------- Co-authored-by: Alice Cecile <alice.i.cecile@gmail.com> Co-authored-by: IceSentry <c.giguere42@gmail.com> Co-authored-by: François Mockers <francois.mockers@vleue.com> |

||

|

|

9f9373c7d9

|

Fix shadow retention by keying off the RetainedViewEntity, not the light's render world entity. (#17749)

Right now, we key the cached light change ticks off the `LightEntity`. This uses the render world entity, which isn't stable between frames. Thus in practice few shadows are retained from frame to frame. This PR fixes the issue by keying off the `RetainedViewEntity` instead, which is designed to be stable from frame to frame. |

||

|

|

7fc122ad16

|

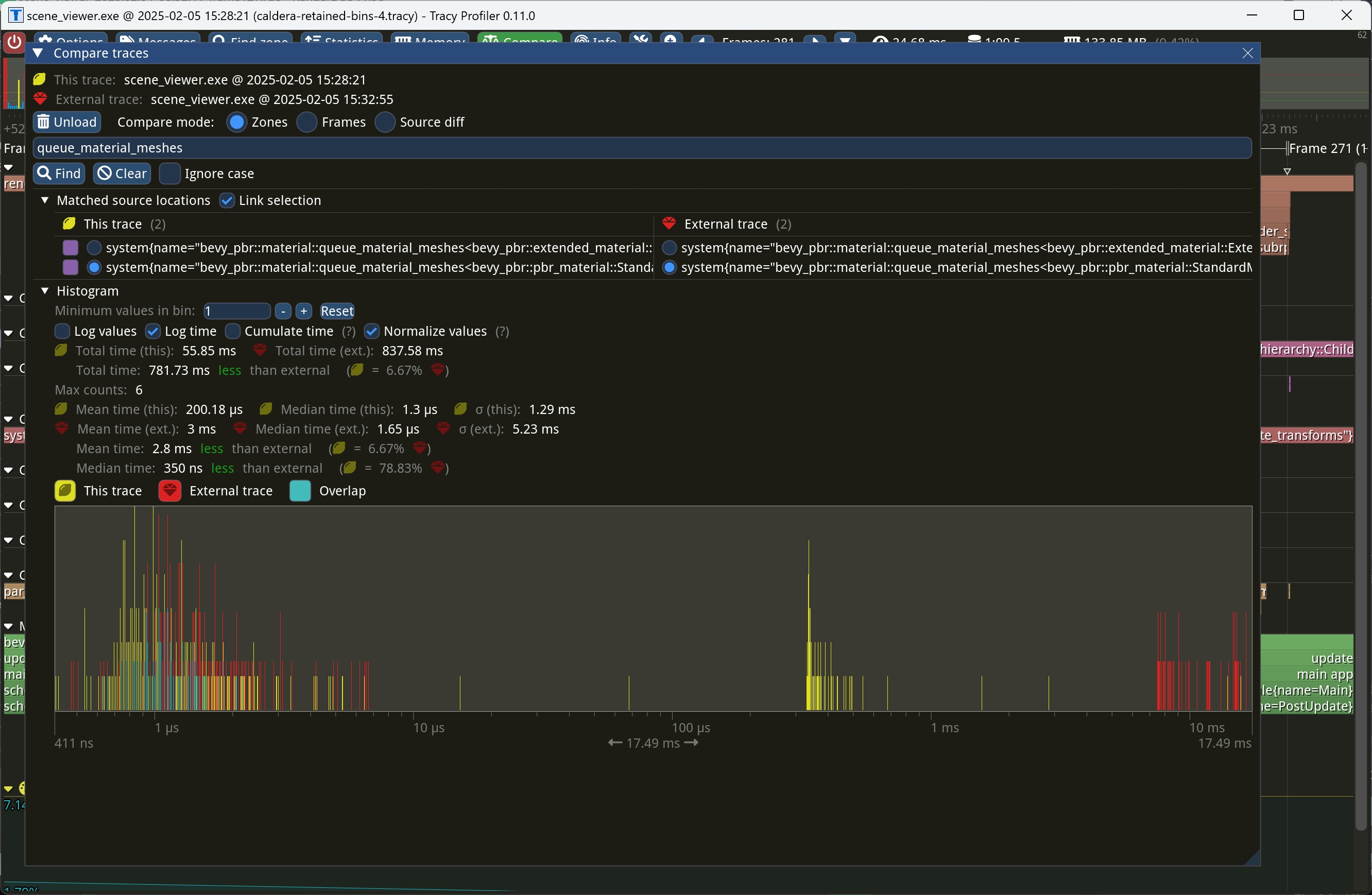

Retain bins from frame to frame. (#17698)

This PR makes Bevy keep entities in bins from frame to frame if they haven't changed. This reduces the time spent in `queue_material_meshes` and related functions to near zero for static geometry. This patch uses the same change tick technique that #17567 uses to detect when meshes have changed in such a way as to require re-binning. In order to quickly find the relevant bin for an entity when that entity has changed, we introduce a new type of cache, the *bin key cache*. This cache stores a mapping from main world entity ID to cached bin key, as well as the tick of the most recent change to the entity. As we iterate through the visible entities in `queue_material_meshes`, we check the cache to see whether the entity needs to be re-binned. If it doesn't, then we mark it as clean in the `valid_cached_entity_bin_keys` bit set. If it does, then we insert it into the correct bin, and then mark the entity as clean. At the end, all entities not marked as clean are removed from the bins. This patch has a dramatic effect on the rendering performance of most benchmarks, as it effectively eliminates `queue_material_meshes` from the profile. Note, however, that it generally simultaneously regresses `batch_and_prepare_binned_render_phase` by a bit (not by enough to outweigh the win, however). I believe that's because, before this patch, `queue_material_meshes` put the bins in the CPU cache for `batch_and_prepare_binned_render_phase` to use, while with this patch, `batch_and_prepare_binned_render_phase` must load the bins into the CPU cache itself. On Caldera, this reduces the time spent in `queue_material_meshes` from 5+ ms to 0.2ms-0.3ms. Note that benchmarking on that scene is very noisy right now because of https://github.com/bevyengine/bevy/issues/17535.  |

||

|

|

bcfc086f3d

|

Include the material bind group in the shadow batch set key. (#17738)

Right now, meshes aren't grouped together based on the bindless texture slab when drawing shadows. This manifests itself as flickering in Bistro. I believe that there are two causes of this: 1. Alpha masked shadows may try to sample from the wrong texture, causing the alpha mask to appear and disappear. 2. Objects may try to sample from the blank textures that we pad out the bindless slabs with, causing them to vanish intermittently. This commit fixes the issue by including the material bind group ID as part of the shadow batch set key, just as we do for the prepass and main pass. |

||

|

|

2ea5e9b846

|

Cold Specialization (#17567)

# Cold Specialization ## Objective An ongoing part of our quest to retain everything in the render world, cold-specialization aims to cache pipeline specialization so that pipeline IDs can be recomputed only when necessary, rather than every frame. This approach reduces redundant work in stable scenes, while still accommodating scenarios in which materials, views, or visibility might change, as well as unlocking future optimization work like retaining render bins. ## Solution Queue systems are split into a specialization system and queue system, the former of which only runs when necessary to compute a new pipeline id. Pipelines are invalidated using a combination of change detection and ECS ticks. ### The difficulty with change detection Detecting “what changed” can be tricky because pipeline specialization depends not only on the entity’s components (e.g., mesh, material, etc.) but also on which view (camera) it is rendering in. In other words, the cache key for a given pipeline id is a view entity/render entity pair. As such, it's not sufficient simply to react to change detection in order to specialize -- an entity could currently be out of view or could be rendered in the future in camera that is currently disabled or hasn't spawned yet. ### Why ticks? Ticks allow us to ensure correctness by allowing us to compare the last time a view or entity was updated compared to the cached pipeline id. This ensures that even if an entity was out of view or has never been seen in a given camera before we can still correctly determine whether it needs to be re-specialized or not. ## Testing TODO: Tested a bunch of different examples, need to test more. ## Migration Guide TODO - `AssetEvents` has been moved into the `PostUpdate` schedule. --------- Co-authored-by: Patrick Walton <pcwalton@mimiga.net> |

||

|

|

9bc0ae33c3

|

Move hashbrown and foldhash out of bevy_utils (#17460)

# Objective - Contributes to #16877 ## Solution - Moved `hashbrown`, `foldhash`, and related types out of `bevy_utils` and into `bevy_platform_support` - Refactored the above to match the layout of these types in `std`. - Updated crates as required. ## Testing - CI --- ## Migration Guide - The following items were moved out of `bevy_utils` and into `bevy_platform_support::hash`: - `FixedState` - `DefaultHasher` - `RandomState` - `FixedHasher` - `Hashed` - `PassHash` - `PassHasher` - `NoOpHash` - The following items were moved out of `bevy_utils` and into `bevy_platform_support::collections`: - `HashMap` - `HashSet` - `bevy_utils::hashbrown` has been removed. Instead, import from `bevy_platform_support::collections` _or_ take a dependency on `hashbrown` directly. - `bevy_utils::Entry` has been removed. Instead, import from `bevy_platform_support::collections::hash_map` or `bevy_platform_support::collections::hash_set` as appropriate. - All of the above equally apply to `bevy::utils` and `bevy::platform_support`. ## Notes - I left `PreHashMap`, `PreHashMapExt`, and `TypeIdMap` in `bevy_utils` as they might be candidates for micro-crating. They can always be moved into `bevy_platform_support` at a later date if desired. |

||

|

|

5a9bc28502

|

Support non-Vec data structures in relations (#17447)

# Objective

The existing `RelationshipSourceCollection` uses `Vec` as the only

possible backing for our relationships. While a reasonable choice,

benchmarking use cases might reveal that a different data type is better

or faster.

For example:

- Not all relationships require a stable ordering between the

relationship sources (i.e. children). In cases where we a) have many

such relations and b) don't care about the ordering between them, a hash

set is likely a better datastructure than a `Vec`.

- The number of children-like entities may be small on average, and a

`smallvec` may be faster

## Solution

- Implement `RelationshipSourceCollection` for `EntityHashSet`, our

custom entity-optimized `HashSet`.

-~~Implement `DoubleEndedIterator` for `EntityHashSet` to make things

compile.~~

- This implementation was cursed and very surprising.

- Instead, by moving the iterator type on `RelationshipSourceCollection`

from an erased RPTIT to an explicit associated type we can add a trait

bound on the offending methods!

- Implement `RelationshipSourceCollection` for `SmallVec`

## Testing

I've added a pair of new tests to make sure this pattern compiles

successfully in practice!

## Migration Guide

`EntityHashSet` and `EntityHashMap` are no longer re-exported in

`bevy_ecs::entity` directly. If you were not using `bevy_ecs` / `bevy`'s

`prelude`, you can access them through their now-public modules,

`hash_set` and `hash_map` instead.

## Notes to reviewers

The `EntityHashSet::Iter` type needs to be public for this impl to be

allowed. I initially renamed it to something that wasn't ambiguous and

re-exported it, but as @Victoronz pointed out, that was somewhat

unidiomatic.

In

|

||

|

|

35101f3ed5

|

Use multi_draw_indirect_count where available, in preparation for two-phase occlusion culling. (#17211)

This commit allows Bevy to use `multi_draw_indirect_count` for drawing meshes. The `multi_draw_indirect_count` feature works just like `multi_draw_indirect`, but it takes the number of indirect parameters from a GPU buffer rather than specifying it on the CPU. Currently, the CPU constructs the list of indirect draw parameters with the instance count for each batch set to zero, uploads the resulting buffer to the GPU, and dispatches a compute shader that bumps the instance count for each mesh that survives culling. Unfortunately, this is inefficient when we support `multi_draw_indirect_count`. Draw commands corresponding to meshes for which all instances were culled will remain present in the list when calling `multi_draw_indirect_count`, causing overhead. Proper use of `multi_draw_indirect_count` requires eliminating these empty draw commands. To address this inefficiency, this PR makes Bevy fully construct the indirect draw commands on the GPU instead of on the CPU. Instead of writing instance counts to the draw command buffer, the mesh preprocessing shader now writes them to a separate *indirect metadata buffer*. A second compute dispatch known as the *build indirect parameters* shader runs after mesh preprocessing and converts the indirect draw metadata into actual indirect draw commands for the GPU. The build indirect parameters shader operates on a batch at a time, rather than an instance at a time, and as such each thread writes only 0 or 1 indirect draw parameters, simplifying the current logic in `mesh_preprocessing`, which currently has to have special cases for the first mesh in each batch. The build indirect parameters shader emits draw commands in a tightly packed manner, enabling maximally efficient use of `multi_draw_indirect_count`. Along the way, this patch switches mesh preprocessing to dispatch one compute invocation per render phase per view, instead of dispatching one compute invocation per view. This is preparation for two-phase occlusion culling, in which we will have two mesh preprocessing stages. In that scenario, the first mesh preprocessing stage must only process opaque and alpha tested objects, so the work items must be separated into those that are opaque or alpha tested and those that aren't. Thus this PR splits out the work items into a separate buffer for each phase. As this patch rewrites so much of the mesh preprocessing infrastructure, it was simpler to just fold the change into this patch instead of deferring it to the forthcoming occlusion culling PR. Finally, this patch changes mesh preprocessing so that it runs separately for indexed and non-indexed meshes. This is because draw commands for indexed and non-indexed meshes have different sizes and layouts. *The existing code is actually broken for non-indexed meshes*, as it attempts to overlay the indirect parameters for non-indexed meshes on top of those for indexed meshes. Consequently, right now the parameters will be read incorrectly when multiple non-indexed meshes are multi-drawn together. *This is a bug fix* and, as with the change to dispatch phases separately noted above, was easiest to include in this patch as opposed to separately. ## Migration Guide * Systems that add custom phase items now need to populate the indirect drawing-related buffers. See the `specialized_mesh_pipeline` example for an example of how this is done. |

||

|

|

47d25c13d7

|

Ambient component (#17343)

# Objective allow setting ambient light via component on cameras. arguably fixes #7193 note i chose to use a component rather than an entity since it was not clear to me how to manage multiple ambient sources for a single renderlayer, and it makes for a very small changeset. ## Solution - make ambient light a component as well as a resource - extract it - use the component if present on a camera, fallback to the resource ## Testing i added ```rs if index == 1 { commands.entity(camera).insert(AmbientLight{ color: Color::linear_rgba(1.0, 0.0, 0.0, 1.0), brightness: 1000.0, ..Default::default() }); } ``` at line 84 of the split_screen example --------- Co-authored-by: François Mockers <mockersf@gmail.com> |

||

|

|

141b7673ab

|

Key render phases off the main world view entity, not the render world view entity. (#16942)

We won't be able to retain render phases from frame to frame if the keys are unstable. It's not as simple as simply keying off the main world entity, however, because some main world entities extract to multiple render world entities. For example, directional lights extract to multiple shadow cascades, and point lights extract to one view per cubemap face. Therefore, we key off a new type, `RetainedViewEntity`, which contains the main entity plus a *subview ID*. This is part of the preparation for retained bins. --------- Co-authored-by: ickshonpe <david.curthoys@googlemail.com> |

||

|

|

4bca7f1b6d

|

Improved Command Errors (#17215)

# Objective Rework / build on #17043 to simplify the implementation. #17043 should be merged first, and the diff from this PR will get much nicer after it is merged (this PR is net negative LOC). ## Solution 1. Command and EntityCommand have been vastly simplified. No more marker components. Just one function. 2. Command and EntityCommand are now generic on the return type. This enables result-less commands to exist, and allows us to statically distinguish between fallible and infallible commands, which allows us to skip the "error handling overhead" for cases that don't need it. 3. There are now only two command queue variants: `queue` and `queue_fallible`. `queue` accepts commands with no return type. `queue_fallible` accepts commands that return a Result (specifically, one that returns an error that can convert to `bevy_ecs::result::Error`). 4. I've added the concept of the "default error handler", which is used by `queue_fallible`. This is a simple direct call to the `panic()` error handler by default. Users that want to override this can enable the `configurable_error_handler` cargo feature, then initialize the GLOBAL_ERROR_HANDLER value on startup. This is behind a flag because there might be minor overhead with `OnceLock` and I'm guessing this will be a niche feature. We can also do perf testing with OnceLock if someone really wants it to be used unconditionally, but I don't personally feel the need to do that. 5. I removed the "temporary error handler" on Commands (and all code associated with it). It added more branching, made Commands bigger / more expensive to initialize (note that we construct it at high frequencies / treat it like a pointer type), made the code harder to follow, and introduced a bunch of additional functions. We instead rely on the new default error handler used in `queue_fallible` for most things. In the event that a custom handler is required, `handle_error_with` can be used. 6. EntityCommand now _only_ supports functions that take `EntityWorldMut` (and all existing entity commands have been ported). Removing the marker component from EntityCommand hinged on this change, but I strongly believe this is for the best anyway, as this sets the stage for more efficient batched entity commands. 7. I added `EntityWorldMut::resource` and the other variants for more ergonomic resource access on `EntityWorldMut` (removes the need for entity.world_scope, which also incurs entity-lookup overhead). ## Open Questions 1. I believe we could merge `queue` and `queue_fallible` into a single `queue` which accepts both fallible and infallible commands (via the introduction of a `QueueCommand` trait). Is this desirable? |

||

|

|

3742e621ef

|

Allow clippy::too_many_arguments to lint without warnings (#17249)

# Objective Many instances of `clippy::too_many_arguments` linting happen to be on systems - functions which we don't call manually, and thus there's not much reason to worry about the argument count. ## Solution Allow `clippy::too_many_arguments` globally, and remove all lint attributes related to it. |

||

|

|

a8f15bd95e

|

Introduce two-level bins for multidrawable meshes. (#16898)

Currently, our batchable binned items are stored in a hash table that maps bin key, which includes the batch set key, to a list of entities. Multidraw is handled by sorting the bin keys and accumulating adjacent bins that can be multidrawn together (i.e. have the same batch set key) into multidraw commands during `batch_and_prepare_binned_render_phase`. This is reasonably efficient right now, but it will complicate future work to retain indirect draw parameters from frame to frame. Consider what must happen when we have retained indirect draw parameters and the application adds a bin (i.e. a new mesh) that shares a batch set key with some pre-existing meshes. (That is, the new mesh can be multidrawn with the pre-existing meshes.) To be maximally efficient, our goal in that scenario will be to update *only* the indirect draw parameters for the batch set (i.e. multidraw command) containing the mesh that was added, while leaving the others alone. That means that we have to quickly locate all the bins that belong to the batch set being modified. In the existing code, we would have to sort the list of bin keys so that bins that can be multidrawn together become adjacent to one another in the list. Then we would have to do a binary search through the sorted list to find the location of the bin that was just added. Next, we would have to widen our search to adjacent indexes that contain the same batch set, doing expensive comparisons against the batch set key every time. Finally, we would reallocate the indirect draw parameters and update the stored pointers to the indirect draw parameters that the bins store. By contrast, it'd be dramatically simpler if we simply changed the way bins are stored to first map from batch set key (i.e. multidraw command) to the bins (i.e. meshes) within that batch set key, and then from each individual bin to the mesh instances. That way, the scenario above in which we add a new mesh will be simpler to handle. First, we will look up the batch set key corresponding to that mesh in the outer map to find an inner map corresponding to the single multidraw command that will draw that batch set. We will know how many meshes the multidraw command is going to draw by the size of that inner map. Then we simply need to reallocate the indirect draw parameters and update the pointers to those parameters within the bins as necessary. There will be no need to do any binary search or expensive batch set key comparison: only a single hash lookup and an iteration over the inner map to update the pointers. This patch implements the above technique. Because we don't have retained bins yet, this PR provides no performance benefits. However, it opens the door to maximally efficient updates when only a small number of meshes change from frame to frame. The main churn that this patch causes is that the *batch set key* (which uniquely specifies a multidraw command) and *bin key* (which uniquely specifies a mesh *within* that multidraw command) are now separate, instead of the batch set key being embedded *within* the bin key. In order to isolate potential regressions, I think that at least #16890, #16836, and #16825 should land before this PR does. ## Migration Guide * The *batch set key* is now separate from the *bin key* in `BinnedPhaseItem`. The batch set key is used to collect multidrawable meshes together. If you aren't using the multidraw feature, you can safely set the batch set key to `()`. |

||

|

|

a371ee3019

|

Remove tracing re-export from bevy_utils (#17161)

# Objective - Contributes to #11478 ## Solution - Made `bevy_utils::tracing` `doc(hidden)` - Re-exported `tracing` from `bevy_log` for end-users - Added `tracing` directly to crates that need it. ## Testing - CI --- ## Migration Guide If you were importing `tracing` via `bevy::utils::tracing`, instead use `bevy::log::tracing`. Note that many items within `tracing` are also directly re-exported from `bevy::log` as well, so you may only need `bevy::log` for the most common items (e.g., `warn!`, `trace!`, etc.). This also applies to the `log_once!` family of macros. ## Notes - While this doesn't reduce the line-count in `bevy_utils`, it further decouples the internal crates from `bevy_utils`, making its eventual removal more feasible in the future. - I have just imported `tracing` as we do for all dependencies. However, a workspace dependency may be more appropriate for version management. |

||

|

|

b78efd339d

|

Simplify sort/max_by calls (#17048)

# Objective Some sort calls and `Ord` impls are unnecessarily complex. ## Solution Rewrite the "match on cmp, if equal do another cmp" as either a comparison on tuples, or `Ordering::then_with`, depending on whether the compare keys need construction. `sort_by` -> `sort_by_key` when symmetrical. Do the same for `min_by`/`max_by`. Note that `total_cmp` can only work with `sort_by`, and not on tuples. When sorting collected query results that contain `Entity`/`MainEntity`/`RenderEntity` in their `QueryData`, with that `Entity` in the sort key: stable -> unstable sort (all queried entities are unique) If key construction is not simple, switch to `sort_by_cached_key` when possible. Sorts that are only performed to discover the maximal element are replaced by `max_by_key`. Dedicated comparison functions and structs are removed where simple. Derive `PartialOrd`/`Ord` when useful. Misc. closure style inconsistencies. ## Testing - Existing tests. |

||

|

|

8ac90ac542

|

make EntityHashMap and EntityHashSet proper types (#16912)

# Objective `EntityHashMap` and `EntityHashSet` iterators do not implement `EntitySetIterator`. ## Solution Make them newtypes instead of aliases. The methods that create the iterators can then produce their own newtypes that carry the `Hasher` generic and implement `EntitySetIterator`. Functionality remains the same otherwise. There are some other small benefits, f.e. the removal of `with_hasher` associated functions, and the ability to implement more traits ourselves. `MainEntityHashMap` and `MainEntityHashSet` are currently left as the previous type aliases, because supporting general `TrustedEntityBorrow` hashing is more complex. However, it can also be done. ## Testing Pre-existing `EntityHashMap` tests. ## Migration Guide Users of `with_hasher` and `with_capacity_and_hasher` on `EntityHashMap`/`Set` must now use `new` and `with_capacity` respectively. If the non-newtyped versions are required, they can be obtained via `Deref`, `DerefMut` or `into_inner` calls. |

||

|

|

bf3692a011

|

Introduce support for mixed lighting by allowing lights to opt out of contributing diffuse light to lightmapped objects. (#16761)

This PR adds support for *mixed lighting* to Bevy, whereby some parts of the scene are lightmapped, while others take part in real-time lighting. (Here *real-time lighting* means lighting at runtime via the PBR shader, as opposed to precomputed light using lightmaps.) It does so by adding a new field, `affects_lightmapped_meshes` to `IrradianceVolume` and `AmbientLight`, and a corresponding field `affects_lightmapped_mesh_diffuse` to `DirectionalLight`, `PointLight`, `SpotLight`, and `EnvironmentMapLight`. By default, this value is set to true; when set to false, the light contributes nothing to the diffuse irradiance component to meshes with lightmaps. Note that specular light is unaffected. This is because the correct way to bake specular lighting is *directional lightmaps*, which we have no support for yet. There are two general ways I expect this field to be used: 1. When diffuse indirect light is baked into lightmaps, irradiance volumes and reflection probes shouldn't contribute any diffuse light to the static geometry that has a lightmap. That's because the baking tool should have already accounted for it, and in a higher-quality fashion, as lightmaps typically offer a higher effective texture resolution than the light probe does. 2. When direct diffuse light is baked into a lightmap, punctual lights shouldn't contribute any diffuse light to static geometry with a lightmap, to avoid double-counting. It may seem odd to bake *direct* light into a lightmap, as opposed to indirect light. But there is a use case: in a scene with many lights, avoiding light leaks requires shadow mapping, which quickly becomes prohibitive when many lights are involved. Baking lightmaps allows light leaks to be eliminated on static geometry. A new example, `mixed_lighting`, has been added. It demonstrates a sofa (model from the [glTF Sample Assets]) that has been lightmapped offline using [Bakery]. It has four modes: 1. In *baked* mode, all objects are locked in place, and all the diffuse direct and indirect light has been calculated ahead of time. Note that the bottom of the sphere has a red tint from the sofa, illustrating that the baking tool captured indirect light for it. 2. In *mixed direct* mode, lightmaps capturing diffuse direct and indirect light have been pre-calculated for the static objects, but the dynamic sphere has real-time lighting. Note that, because the diffuse lighting has been entirely pre-calculated for the scenery, the dynamic sphere casts no shadow. In a real app, you would typically use real-time lighting for the most important light so that dynamic objects can shadow the scenery and relegate baked lighting to the less important lights for which shadows aren't as important. Also note that there is no red tint on the sphere, because there is no global illumination applied to it. In an actual game, you could fix this problem by supplementing the lightmapped objects with an irradiance volume. 3. In *mixed indirect* mode, all direct light is calculated in real-time, and the static objects have pre-calculated indirect lighting. This corresponds to the mode that most applications are expected to use. Because direct light on the scenery is computed dynamically, shadows are fully supported. As in mixed direct mode, there is no global illumination on the sphere; in a real application, irradiance volumes could be used to supplement the lightmaps. 4. In *real-time* mode, no lightmaps are used at all, and all punctual lights are rendered in real-time. No global illumination exists. In the example, you can click around to move the sphere, unless you're in baked mode, in which case the sphere must be locked in place to be lit correctly. ## Showcase Baked mode:  Mixed direct mode:  Mixed indirect mode (default):  Real-time mode:  ## Migration guide * The `AmbientLight` resource, the `IrradianceVolume` component, and the `EnvironmentMapLight` component now have `affects_lightmapped_meshes` fields. If you don't need to use that field (for example, if you aren't using lightmaps), you can safely set the field to true. * `DirectionalLight`, `PointLight`, and `SpotLight` now have `affects_lightmapped_mesh_diffuse` fields. If you don't need to use that field (for example, if you aren't using lightmaps), you can safely set the field to true. [glTF Sample Assets]: https://github.com/KhronosGroup/glTF-Sample-Assets/tree/main [Bakery]: https://geom.io/bakery/wiki/index.php?title=Bakery_-_GPU_Lightmapper |

||

|

|

00722b8d0f

|

Make indirect drawing opt-out instead of opt-in, enabling multidraw by default. (#16757)

This patch replaces the undocumented `NoGpuCulling` component with a new component, `NoIndirectDrawing`, effectively turning indirect drawing on by default. Indirect mode is needed for the recently-landed multidraw feature (#16427). Since multidraw is such a win for performance, when that feature is supported the small performance tax that indirect mode incurs is virtually always worth paying. To ensure that custom drawing code such as that in the `custom_shader_instancing` example continues to function, this commit additionally makes GPU culling take the `NoFrustumCulling` component into account. This PR is an alternative to #16670 that doesn't break the `custom_shader_instancing` example. **PR #16755 should land first in order to avoid breaking deferred rendering, as multidraw currently breaks it**. ## Migration Guide * Indirect drawing (GPU culling) is now enabled by default, so the `GpuCulling` component is no longer available. To disable indirect mode, which may be useful with custom render nodes, add the new `NoIndirectDrawing` component to your camera. |

||

|

|

7236070573

|

Use multidraw for shadows when GPU culling is in use. (#16692)

This patch makes shadows use multidraw when the camera they'll be drawn to has the `GpuCulling` component. This results in a significant reduction in drawcalls; Bistro Exterior drops to 3 drawcalls for each shadow cascade. Note that PR #16670 will remove the `GpuCulling` component, making shadows automatically use multidraw. Beware of that when testing this patch; before #16670 lands, you'll need to manually add `GpuCulling` to your camera in order to see any performance benefits. |

||

|

|

a81c8f9744

|

Don't unconditionally create temporary render entities for visible objects. (#16723)

PR #15756 made us create temporary render entities for all visible objects, even if they had no render world counterpart. This regressed our `many_cubes` time from about 3.59 ms/frame to 4.66 ms/frame. This commit changes that behavior to use `Entity::PLACEHOLDER` instead of creating a temporary render entity. This improves our `many_cubes` time from 5.66 ms/frame to 3.96 ms/frame, a 43% speedup. I tested 3D, 2D gizmos, and UI and they seem to work. See the following graph of `many_cubes` frame time (lower is better). PR #15756 is the one in October.  |

||

|

|

61b98ec80f

|

Rename trigger.entity() to trigger.target() (#16716)