There's still a race resulting in blank materials whenever a material of

type A is added on the same frame that a material of type B is removed.

PR #18734 improved the situation, but ultimately didn't fix the race

because of two issues:

1. The `late_sweep_material_instances` system was never scheduled. This

PR fixes the problem by scheduling that system.

2. `early_sweep_material_instances` needs to be called after *every*

material type has been extracted, not just when the material of *that*

type has been extracted. The `chain()` added during the review process

in PR #18734 broke this logic. This PR reverts that and fixes the

ordering by introducing a new `SystemSet` that contains all material

extraction systems.

I also took the opportunity to switch a manual reference to

`AssetId::<StandardMaterial>::invalid()` to the new

`DUMMY_MESH_MATERIAL` constant for clarity.

Because this is a bug that can affect any application that switches

material types in a single frame, I think this should be uplifted to

Bevy 0.16.

Fixes#18809Fixes#18823

Meshes despawned in `Last` can still be in visisible entities if they

were visible as of `PostUpdate`. Sanity check that the mesh actually

exists before we specialize. We still want to unconditionally assume

that the entity is in `EntitySpecializationTicks` as its absence from

that cache would likely suggest another bug.

# Objective

The goal of `bevy_platform_support` is to provide a set of platform

agnostic APIs, alongside platform-specific functionality. This is a high

traffic crate (providing things like HashMap and Instant). Especially in

light of https://github.com/bevyengine/bevy/discussions/18799, it

deserves a friendlier / shorter name.

Given that it hasn't had a full release yet, getting this change in

before Bevy 0.16 makes sense.

## Solution

- Rename `bevy_platform_support` to `bevy_platform`.

A clippy failure slipped into #18768, although I'm not sure why CI

didn't catch it.

```sh

> cargo clippy --version

clippy 0.1.85 (4eb161250e 2025-03-15)

> cargo run -p ci

...

error: empty line after doc comment

--> crates\bevy_pbr\src\light\mod.rs:105:5

|

105 | / /// The width and height of each of the 6 faces of the cubemap.

106 | |

| |_^

|

= help: for further information visit https://rust-lang.github.io/rust-clippy/master/index.html#empty_line_after_doc_comments

= note: `-D clippy::empty-line-after-doc-comments` implied by `-D warnings`

= help: to override `-D warnings` add `#[allow(clippy::empty_line_after_doc_comments)]`

= help: if the empty line is unintentional remove it

help: if the documentation should include the empty line include it in the comment

|

106 | ///

|

```

# Objective

- Improve the docs for `PointLightShadowMap` and

`DirectionalLightShadowMap`

## Solution

- Add example for how to use `PointLightShadowMap` and move the

`DirectionalLightShadowMap` example from `DirectionalLight`.

- Match `PointLight` and `DirectionalLight` docs about shadows.

- Describe what `size` means.

---------

Co-authored-by: Robert Swain <robert.swain@gmail.com>

# Objective

Fixes#16896Fixes#17737

## Solution

Adds a new render phase, including all the new cold specialization

patterns, for wireframes. There's a *lot* of regrettable duplication

here between 3d/2d.

## Testing

All the examples.

## Migration Guide

- `WireframePlugin` must now be created with

`WireframePlugin::default()`.

Currently, `RenderMaterialInstances` and `RenderMeshMaterialIds` are

very similar render-world resources: the former maps main world meshes

to typed material asset IDs, and the latter maps main world meshes to

untyped material asset IDs. This is needlessly-complex and wasteful, so

this patch unifies the two in favor of a single untyped

`RenderMaterialInstances` resource.

This patch also fixes a subtle issue that could cause mesh materials to

be incorrect if a `MeshMaterial3d<A>` was removed and replaced with a

`MeshMaterial3d<B>` material in the same frame. The problematic pattern

looks like:

1. `extract_mesh_materials<B>` runs and, seeing the

`Changed<MeshMaterial3d<B>>` condition, adds an entry mapping the mesh

to the new material to the untyped `RenderMeshMaterialIds`.

2. `extract_mesh_materials<A>` runs and, seeing that the entity is

present in `RemovedComponents<MeshMaterial3d<A>>`, removes the entry

from `RenderMeshMaterialIds`.

3. The material slot is now empty, and the mesh will show up as whatever

material happens to be in slot 0 in the material data slab.

This commit fixes the issue by splitting out `extract_mesh_materials`

into *three* phases: *extraction*, *early sweeping*, and *late

sweeping*, which run in that order:

1. The *extraction* system, which runs for each material, updates

`RenderMaterialInstances` records whenever `MeshMaterial3d` components

change, and updates a change tick so that the following system will know

not to remove it.

2. The *early sweeping* system, which runs for each material, processes

entities present in `RemovedComponents<MeshMaterial3d>` and removes each

such entity's record from `RenderMeshInstances` only if the extraction

system didn't update it this frame. This system runs after *all*

extraction systems have completed, fixing the race condition.

3. The *late sweeping* system, which runs only once regardless of the

number of materials in the scene, processes entities present in

`RemovedComponents<ViewVisibility>` and, as in the early sweeping phase,

removes each such entity's record from `RenderMeshInstances` only if the

extraction system didn't update it this frame. At the end, the late

sweeping system updates the change tick.

Because this pattern happens relatively frequently, I think this PR

should land for 0.16.

Due to the preprocessor usage in the shader, different combinations of

features could cause the fields of `StandardMaterialBindings` to shift

around. In certain cases, this could cause them to not line up with the

bindings specified in `StandardMaterial`. This resulted in #18104.

This commit fixes the issue by making `StandardMaterialBindings` have a

fixed size. On the CPU side, it uses the

`#[bindless(index_table(range(M..N)))]` feature I added to `AsBindGroup`

in #18025 to do so. Thus this patch has a dependency on #18025.

Closes#18104.

---------

Co-authored-by: Robert Swain <robert.swain@gmail.com>

PR #17898 disabled bindless support for `ExtendedMaterial`. This commit

adds it back. It also adds a new example, `extended_material_bindless`,

showing how to use it.

# Objective

Add web support to atmosphere by gating dual source blending and using a

macro to determine the target platform.

The main objective of this PR is to ensure that users of Bevy's

atmosphere feature can also run it in a web-based context where WebGPU

support is enabled.

## Solution

- Make use of the `#[cfg(not(target_arch = "wasm32"))]` macro to gate

the dual source blending, as this is not (yet) supported in web

browsers.

- Rename the function `sample_sun_illuminance` to `sample_sun_radiance`

and move calls out of conditionals to ensure the shader compiles and

runs in both native and web-based contexts.

- Moved the multiplication of the transmittance out when calculating the

sun color, because calling the `sample_sun_illuminance` function was

causing issues in web. Overall this results in cleaner code and more

readable.

## Testing

- Tested by building a wasm target and loading it in a web page with

Vite dev server using `mate-h/bevy-webgpu` repo template.

- Tested the native build with `cargo run --example atmosphere` to

ensure it still works with dual source blending.

---

## Showcase

Screenshots show the atmosphere example running in two different

contexts:

<img width="1281" alt="atmosphere-web-showcase"

src="https://github.com/user-attachments/assets/40b1ee91-89ae-41a6-8189-89630d1ca1a6"

/>

---------

Co-authored-by: JMS55 <47158642+JMS55@users.noreply.github.com>

## Objective

Fix #18714.

## Solution

Make sure `SkinUniforms::prev_buffer` is resized at the same time as

`current_buffer`.

There will be a one frame visual glitch when the buffers are resized,

since `prev_buffer` is incorrectly initialised with the current joint

transforms.

Note that #18074 includes the same fix. I'm assuming this smaller PR

will land first.

## Testing

See repro instructions in #18714. Tested on `animated_mesh`,

`many_foxes`, `custom_skinned_mesh`, Win10/Nvidia with Vulkan,

WebGL/Chrome, WebGPU/Chrome.

## Objective

Fix motion blur not working on skinned meshes.

## Solution

`set_mesh_motion_vector_flags` can set

`RenderMeshInstanceFlags::HAS_PREVIOUS_SKIN` after specialization has

already cached the material. This can lead to

`MeshPipelineKey::HAS_PREVIOUS_SKIN` never getting set, disabling motion

blur.

The fix is to make sure `set_mesh_motion_vector_flags` happens before

specialization.

Note that the bug is fixed in a different way by #18074, which includes

other fixes but is a much larger change.

## Testing

Open the `animated_mesh` example and add these components to the

`Camera3d` entity:

```rust

MotionBlur {

shutter_angle: 5.0,

samples: 2,

#[cfg(all(feature = "webgl2", target_arch = "wasm32", not(feature = "webgpu")))]

_webgl2_padding: Default::default(),

},

#[cfg(all(feature = "webgl2", target_arch = "wasm32", not(feature = "webgpu")))]

Msaa::Off,

```

Tested on `animated_mesh`, `many_foxes`, `custom_skinned_mesh`,

Win10/Nvidia with Vulkan, WebGL/Chrome, WebGPU/Chrome. Note that testing

`many_foxes` WebGL requires #18715.

# Objective

Make all feature gated bindings consistent with each other

## Solution

Make the bindings of fields gated by `pbr_specular_textures` feature

consistent with the other gated bindings

# Objective

- Cleanup

## Solution

- Remove completely unused weak_handle

(`MESH_PREPROCESS_TYPES_SHADER_HANDLE`). This value is not used

directly, and is never populated.

- Delete multiple loads of `BUILD_INDIRECT_PARAMS_SHADER_HANDLE`. We

load it three times right after one another. This looks to be a

copy-paste error.

## Testing

- None.

# Objective

My ecosystem crate, bevy_mod_outline, currently uses `SetMeshBindGroup`

as part of its custom rendering pipeline. I would like to allow for

possibility that, due to changes in 0.16, I need to customise the

behaviour of `SetMeshBindGroup` in order to make it work. However, not

all of the symbol needed to implement this render command are public

outside of Bevy.

## Solution

- Include `MorphIndices` in re-export list. I feel this is morally

equivalent to `SkinUniforms` already being exported.

- Change `MorphIndex::index` field to be public. I feel this is morally

equivalent to the `SkinByteOffset::byte_offset` field already being

public.

- Change `RenderMeshIntances::mesh_asset_id()` to be public (although

since all the fields of `RenderMeshInstances` are public it's possible

to work around this one by reimplementing).

These changes exclude:

- Making any change to the `RenderLightmaps` type as I don't need to

bind the light-maps for my use-case and I wanted to keep these changes

minimal. It has a private field which would need to be public or have

access methods.

- The changes already included in #18612.

## Testing

Confirmed that a copy of `SetMeshBindGroup` can be compiled outside of

Bevy with these changes, provided that the light-map code is removed.

---------

Co-authored-by: Alice Cecile <alice.i.cecile@gmail.com>

# Objective

Fixes#17872

## Solution

This should have basically no impact on static scenes. We can optimize

more later if anything comes up. Needing to iterate the two level bin is

a bit unfortunate but shouldn't matter for apps that use a single

camera.

# Objective

Fixes#17986Fixes#18608

## Solution

Guard against situations where an extracted mesh does not have an

associated material. The way that mesh is dependent on the material api

(although decoupled) here is a bit unfortunate and we might consider

ways in the future to support these material features without this

indirect dependency.

# Objective

Unlike for their helper typers, the import paths for

`unique_array::UniqueEntityArray`, `unique_slice::UniqueEntitySlice`,

`unique_vec::UniqueEntityVec`, `hash_set::EntityHashSet`,

`hash_map::EntityHashMap`, `index_set::EntityIndexSet`,

`index_map::EntityIndexMap` are quite redundant.

When looking at the structure of `hashbrown`, we can also see that while

both `HashSet` and `HashMap` have their own modules, the main types

themselves are re-exported to the crate level.

## Solution

Re-export the types in their shared `entity` parent module, and simplify

the imports where they're used.

# Objective

As of bevy 0.16-dev, the pre-existing public function

`bevy::pbr::setup_morph_and_skinning_defs()` is now passed a boolean

flag called `skins_use_uniform_buffers`. The value of this boolean is

computed by the function

`bevy_pbr::render::skin::skins_use_uniform_buffers()`, but it is not

exported publicly.

Found while porting

[bevy_mod_outline](https://github.com/komadori/bevy_mod_outline) to

0.16.

## Solution

Add `skin::skins_use_uniform_buffers` to the re-export list of

`bevy_pbr::render`.

## Testing

Confirmed test program can access public API.

# Objective

The flags are referenced later outside of the VERTEX_UVS ifdef/endif

block. The current behavior causes the pre-pass shader to fail to

compile when UVs are not present in the mesh, such as when using a

`LineStrip` to render a grid.

Fixes#18600

## Solution

Move the definition of the `flags` outside of the ifdef/endif block.

## Testing

Ran a modified `3d_example` that used a mesh and material with

alpha_mode blend, `LineStrip` topology, and no UVs.

# Objective

Requires are currently more verbose than they need to be. People would

like to define inline component values. Additionally, the current

`#[require(Foo(custom_constructor))]` and `#[require(Foo(|| Foo(10))]`

syntax doesn't really make sense within the context of the Rust type

system. #18309 was an attempt to improve ergonomics for some cases, but

it came at the cost of even more weirdness / unintuitive behavior. Our

approach as a whole needs a rethink.

## Solution

Rework the `#[require()]` syntax to make more sense. This is a breaking

change, but I think it will make the system easier to learn, while also

improving ergonomics substantially:

```rust

#[derive(Component)]

#[require(

A, // this will use A::default()

B(1), // inline tuple-struct value

C { value: 1 }, // inline named-struct value

D::Variant, // inline enum variant

E::SOME_CONST, // inline associated const

F::new(1), // inline constructor

G = returns_g(), // an expression that returns G

H = SomethingElse::new(), // expression returns SomethingElse, where SomethingElse: Into<H>

)]

struct Foo;

```

## Migration Guide

Custom-constructor requires should use the new expression-style syntax:

```rust

// before

#[derive(Component)]

#[require(A(returns_a))]

struct Foo;

// after

#[derive(Component)]

#[require(A = returns_a())]

struct Foo;

```

Inline-closure-constructor requires should use the inline value syntax

where possible:

```rust

// before

#[derive(Component)]

#[require(A(|| A(10))]

struct Foo;

// after

#[derive(Component)]

#[require(A(10)]

struct Foo;

```

In cases where that is not possible, use the expression-style syntax:

```rust

// before

#[derive(Component)]

#[require(A(|| A(10))]

struct Foo;

// after

#[derive(Component)]

#[require(A = A(10)]

struct Foo;

```

---------

Co-authored-by: Alice Cecile <alice.i.cecile@gmail.com>

Co-authored-by: François Mockers <mockersf@gmail.com>

# Objective

- The prepass pipeline has a generic bound on the specialize function

but 95% of it doesn't need it

## Solution

- Move most of the fields to an internal struct and use a separate

specialize function for those fields

## Testing

- Ran the 3d_scene and it worked like before

---

## Migration Guide

If you were using a field of the `PrepassPipeline`, most of them have

now been move to `PrepassPipeline::internal`.

## Notes

Here's the cargo bloat size comparison (from this tool

https://github.com/bevyengine/bevy/discussions/14864):

```

before:

(

"<bevy_pbr::prepass::PrepassPipeline<M> as bevy_render::render_resource::pipeline_specializer::SpecializedMeshPipeline>::specialize",

25416,

0.05582993,

),

after:

(

"<bevy_pbr::prepass::PrepassPipeline<M> as bevy_render::render_resource::pipeline_specializer::SpecializedMeshPipeline>::specialize",

2496,

0.005490916,

),

(

"bevy_pbr::prepass::PrepassPipelineInternal::specialize",

11444,

0.025175499,

),

```

The size for the specialize function that is generic is now much

smaller, so users won't need to recompile it for every material.

# Objective

- Fixes https://github.com/bevyengine/bevy/issues/11682

## Solution

- https://github.com/bevyengine/bevy/pull/4086 introduced an

optimization to not do redundant calculations, but did not take into

account changes to the resource `global_lights`. I believe that my patch

includes the optimization benefit but adds the required nuance to fix

said bug.

## Testing

The example originally given by

[@kirillsurkov](https://github.com/kirillsurkov) and then updated by me

to bevy 15.3 here:

https://github.com/bevyengine/bevy/issues/11682#issuecomment-2746287416

will not have shadows without this patch:

```rust

use bevy::prelude::*;

#[derive(Resource)]

struct State {

x: f32,

}

fn main() {

App::new()

.add_plugins(DefaultPlugins)

.add_systems(Startup, setup)

.add_systems(Update, update)

.insert_resource(State { x: -40.0 })

.run();

}

fn setup(

mut commands: Commands,

mut meshes: ResMut<Assets<Mesh>>,

mut materials: ResMut<Assets<StandardMaterial>>,

) {

commands.spawn((

Mesh3d(meshes.add(Circle::new(4.0))),

MeshMaterial3d(materials.add(Color::WHITE)),

));

commands.spawn((

Mesh3d(meshes.add(Cuboid::new(1.0, 1.0, 1.0))),

MeshMaterial3d(materials.add(Color::linear_rgb(0.0, 1.0, 0.0))),

));

commands.spawn((

PointLight {

shadows_enabled: true,

..default()

},

Transform::from_xyz(4.0, 8.0, 4.0),

));

commands.spawn(Camera3d::default());

}

fn update(mut state: ResMut<State>, mut camera: Query<&mut Transform, With<Camera3d>>) {

let mut camera = camera.single_mut().unwrap();

let t = Vec3::new(state.x, 0.0, 10.0);

camera.translation = t;

camera.look_at(t - Vec3::Z, Vec3::Y);

state.x = 0.0;

}

```

---------

Co-authored-by: Alice Cecile <alice.i.cecile@gmail.com>

Co-authored-by: JMS55 <47158642+JMS55@users.noreply.github.com>

# Objective

- bevy_core_pipeline is getting really big and it's a big bottleneck for

compilation time. A lot of parts of it can be broken up

## Solution

- Add a new bevy_anti_aliasing crate that contains all the anti_aliasing

implementations

- I didn't move any MSAA related code to this new crate because that's a

lot more invasive

## Testing

- Tested the anti_aliasing example to make sure all methods still worked

---

## Showcase

before:

after:

Notice that now bevy_core_pipeline is 1s shorter and bevy_anti_aliasing

now compiles in parallel with bevy_pbr.

## Migration Guide

When using anti aliasing features, you now need to import them from

`bevy::anti_aliasing` instead of `bevy::core_pipeline`

# Objective

- Fixes https://github.com/bevyengine/bevy/issues/18332

## Solution

- Move specialize_shadows to ManageViews so that it can run after

prepare_lights, so that shadow views exist for specialization.

- Unfortunately this means that specialize_shadows is no longer in

PrepareMeshes like the rest of the specialization systems.

## Testing

- Ran anti_aliasing example, switched between the different AA options,

observed no glitches.

# Objective

For materials that aren't being used or a visible entity doesn't have an

instance of, we were unnecessarily constantly checking whether they

needed specialization, saying yes (because the material had never been

specialized for that entity), and failing to look up the material

instance.

## Solution

If an entity doesn't have an instance of the material, it can't possibly

need specialization, so exit early before spending time doing the check.

Fixes#18388.

# Objective

Now that #13432 has been merged, it's important we update our reflected

types to properly opt into this feature. If we do not, then this could

cause issues for users downstream who want to make use of

reflection-based cloning.

## Solution

This PR is broken into 4 commits:

1. Add `#[reflect(Clone)]` on all types marked `#[reflect(opaque)]` that

are also `Clone`. This is mandatory as these types would otherwise cause

the cloning operation to fail for any type that contains it at any

depth.

2. Update the reflection example to suggest adding `#[reflect(Clone)]`

on opaque types.

3. Add `#[reflect(clone)]` attributes on all fields marked

`#[reflect(ignore)]` that are also `Clone`. This prevents the ignored

field from causing the cloning operation to fail.

Note that some of the types that contain these fields are also `Clone`,

and thus can be marked `#[reflect(Clone)]`. This makes the

`#[reflect(clone)]` attribute redundant. However, I think it's safer to

keep it marked in the case that the `Clone` impl/derive is ever removed.

I'm open to removing them, though, if people disagree.

4. Finally, I added `#[reflect(Clone)]` on all types that are also

`Clone`. While not strictly necessary, it enables us to reduce the

generated output since we can just call `Clone::clone` directly instead

of calling `PartialReflect::reflect_clone` on each variant/field. It

also means we benefit from any optimizations or customizations made in

the `Clone` impl, including directly dereferencing `Copy` values and

increasing reference counters.

Along with that change I also took the liberty of adding any missing

registrations that I saw could be applied to the type as well, such as

`Default`, `PartialEq`, and `Hash`. There were hundreds of these to

edit, though, so it's possible I missed quite a few.

That last commit is **_massive_**. There were nearly 700 types to

update. So it's recommended to review the first three before moving onto

that last one.

Additionally, I can break the last commit off into its own PR or into

smaller PRs, but I figured this would be the easiest way of doing it

(and in a timely manner since I unfortunately don't have as much time as

I used to for code contributions).

## Testing

You can test locally with a `cargo check`:

```

cargo check --workspace --all-features

```

I mistakenly thought that with the wgpu upgrade we'd have

`PARTIALLY_BOUND_BINDING_ARRAY` everywhere. Unfortunately this is not

the case. This PR adds a workaround back in.

Closes#18098.

Less data accessed and compared gives better batching performance.

# Objective

- Use a smaller id to represent the lightmap in batch data to enable a

faster implementation of draw streams.

- Improve batching performance for 3D sorted render phases.

## Solution

- 3D batching can use `LightmapSlabIndex` (a `NonMaxU32` which is 4

bytes) instead of the lightmap `AssetId<Image>` (an enum where the

largest variant is a 16-byte UUID) to discern the ability to batch.

## Testing

Tested main (yellow) vs this PR (red) on an M4 Max using the

`many_cubes` example with `WGPU_SETTINGS_PRIO=webgl2` to avoid

GPU-preprocessing, and modifying the materials in `many_cubes` to have

`AlphaMode::Blend` so that they would rely on the less efficient sorted

render phase batching.

<img width="1500" alt="Screenshot_2025-03-15_at_12 17 21"

src="https://github.com/user-attachments/assets/14709bd3-6d06-40fb-aa51-e1d2d606ebe3"

/>

A 44.75us or 7.5% reduction in median execution time of the batch and

prepare sorted render phase system for the `Transparent3d` phase

(handling 160k cubes).

---

## Migration Guide

- Changed: `RenderLightmap::new()` no longer takes an `AssetId<Image>`

argument for the asset id of the lightmap image.

# Objective

Prevents duplicate implementation between IntoSystemConfigs and

IntoSystemSetConfigs using a generic, adds a NodeType trait for more

config flexibility (opening the door to implement

https://github.com/bevyengine/bevy/issues/14195?).

## Solution

Followed writeup by @ItsDoot:

https://hackmd.io/@doot/rJeefFHc1x

Removes IntoSystemConfigs and IntoSystemSetConfigs, instead using

IntoNodeConfigs with generics.

## Testing

Pending

---

## Showcase

N/A

## Migration Guide

SystemSetConfigs -> NodeConfigs<InternedSystemSet>

SystemConfigs -> NodeConfigs<ScheduleSystem>

IntoSystemSetConfigs -> IntoNodeConfigs<InternedSystemSet, M>

IntoSystemConfigs -> IntoNodeConfigs<ScheduleSystem, M>

---------

Co-authored-by: Christian Hughes <9044780+ItsDoot@users.noreply.github.com>

Co-authored-by: Alice Cecile <alice.i.cecile@gmail.com>

# Objective

Because `prepare_assets::<T>` had a mutable reference to the

`RenderAssetBytesPerFrame` resource, no render asset preparation could

happen in parallel. This PR fixes this by using an `AtomicUsize` to

count bytes written (if there's a limit in place), so that the system

doesn't need mutable access.

- Related: https://github.com/bevyengine/bevy/pull/12622

**Before**

<img width="1049" alt="Screenshot 2025-02-17 at 11 40 53 AM"

src="https://github.com/user-attachments/assets/040e6184-1192-4368-9597-5ceda4b8251b"

/>

**After**

<img width="836" alt="Screenshot 2025-02-17 at 1 38 37 PM"

src="https://github.com/user-attachments/assets/95488796-3323-425c-b0a6-4cf17753512e"

/>

## Testing

- Tested on a local project (with and without limiting enabled)

- Someone with more knowledge of wgpu/underlying driver guts should

confirm that this doesn't actually bite us by introducing contention

(i.e. if buffer writing really *should be* serial).

# Overview

Fixes https://github.com/bevyengine/bevy/issues/17869.

# Summary

`WGPU_SETTINGS_PRIO` changes various limits on `RenderDevice`. This is

useful to simulate platforms with lower limits.

However, some plugins only check the limits on `RenderAdapter` (the

actual GPU) - these limits are not affected by `WGPU_SETTINGS_PRIO`. So

the plugins try to use features that are unavailable and wgpu says "oh

no". See https://github.com/bevyengine/bevy/issues/17869 for details.

The PR adds various checks on `RenderDevice` limits. This is enough to

get most examples working, but some are not fixed (see below).

# Testing

- Tested native, with and without "WGPU_SETTINGS=webgl2".

Win10/Vulkan/Nvidia".

- Also WebGL. Win10/Chrome/Nvidia.

```

$env:WGPU_SETTINGS_PRIO = "webgl2"

cargo run --example testbed_3d

cargo run --example testbed_2d

cargo run --example testbed_ui

cargo run --example deferred_rendering

cargo run --example many_lights

cargo run --example order_independent_transparency # Still broken, see below.

cargo run --example occlusion_culling # Still broken, see below.

```

# Not Fixed

While testing I found a few other cases of limits being broken.

"Compatibility" settings (WebGPU minimums) breaks native in various

examples.

```

$env:WGPU_SETTINGS_PRIO = "compatibility"

cargo run --example testbed_3d

In Device::create_bind_group_layout, label = 'build mesh uniforms GPU early occlusion culling bind group layout'

Too many bindings of type StorageBuffers in Stage ShaderStages(COMPUTE), limit is 8, count was 9. Check the limit `max_storage_buffers_per_shader_stage` passed to `Adapter::request_device`

```

`occlusion_culling` breaks fake webgl.

```

$env:WGPU_SETTINGS_PRIO = "webgl2"

cargo run --example occlusion_culling

thread '<unnamed>' panicked at C:\Projects\bevy\crates\bevy_render\src\render_resource\pipeline_cache.rs:555:28:

index out of bounds: the len is 0 but the index is 2

Encountered a panic in system `bevy_render::renderer::render_system`!

```

`occlusion_culling` breaks real webgl.

```

cargo run --example occlusion_culling --target wasm32-unknown-unknown

ERROR app: panicked at C:\Users\T\.cargo\registry\src\index.crates.io-1949cf8c6b5b557f\glow-0.16.0\src\web_sys.rs:4223:9:

Tex storage 2D multisample is not supported

```

OIT breaks fake webgl.

```

$env:WGPU_SETTINGS_PRIO = "webgl2"

cargo run --example order_independent_transparency

In Device::create_bind_group, label = 'mesh_view_bind_group'

Number of bindings in bind group descriptor (28) does not match the number of bindings defined in the bind group layout (25)

```

OIT breaks real webgl

```

cargo run --example order_independent_transparency --target wasm32-unknown-unknown

In Device::create_render_pipeline, label = 'pbr_oit_mesh_pipeline'

Error matching ShaderStages(FRAGMENT) shader requirements against the pipeline

Shader global ResourceBinding { group: 0, binding: 34 } is not available in the pipeline layout

Binding is missing from the pipeline layout

```

# Objective

- Fixes#15460 (will open new issues for further `no_std` efforts)

- Supersedes #17715

## Solution

- Threaded in new features as required

- Made certain crates optional but default enabled

- Removed `compile-check-no-std` from internal `ci` tool since GitHub CI

can now simply check `bevy` itself now

- Added CI task to check `bevy` on `thumbv6m-none-eabi` to ensure

`portable-atomic` support is still valid [^1]

[^1]: This may be controversial, since it could be interpreted as

implying Bevy will maintain support for `thumbv6m-none-eabi` going

forward. In reality, just like `x86_64-unknown-none`, this is a

[canary](https://en.wiktionary.org/wiki/canary_in_a_coal_mine) target to

make it clear when `portable-atomic` no longer works as intended (fixing

atomic support on atomically challenged platforms). If a PR comes

through and makes supporting this class of platforms impossible, then

this CI task can be removed. I however wager this won't be a problem.

## Testing

- CI

---

## Release Notes

Bevy now has support for `no_std` directly from the `bevy` crate.

Users can disable default features and enable a new `default_no_std`

feature instead, allowing `bevy` to be used in `no_std` applications and

libraries.

```toml

# Bevy for `no_std` platforms

bevy = { version = "0.16", default-features = false, features = ["default_no_std"] }

```

`default_no_std` enables certain required features, such as `libm` and

`critical-section`, and as many optional crates as possible (currently

just `bevy_state`). For atomically-challenged platforms such as the

Raspberry Pi Pico, `portable-atomic` will be used automatically.

For library authors, we recommend depending on `bevy` with

`default-features = false` to allow `std` and `no_std` users to both

depend on your crate. Here are some recommended features a library crate

may want to expose:

```toml

[features]

# Most users will be on a platform which has `std` and can use the more-powerful `async_executor`.

default = ["std", "async_executor"]

# Features for typical platforms.

std = ["bevy/std"]

async_executor = ["bevy/async_executor"]

# Features for `no_std` platforms.

libm = ["bevy/libm"]

critical-section = ["bevy/critical-section"]

[dependencies]

# We disable default features to ensure we don't accidentally enable `std` on `no_std` targets, for example.

bevy = { version = "0.16", default-features = false }

```

While this is verbose, it gives the maximum control to end-users to

decide how they wish to use Bevy on their platform.

We encourage library authors to experiment with `no_std` support. For

libraries relying exclusively on `bevy` and no other dependencies, it

may be as simple as adding `#![no_std]` to your `lib.rs` and exposing

features as above! Bevy can also provide many `std` types, such as

`HashMap`, `Mutex`, and `Instant` on all platforms. See

`bevy::platform_support` for details on what's available out of the box!

## Migration Guide

- If you were previously relying on `bevy` with default features

disabled, you may need to enable the `std` and `async_executor`

features.

- `bevy_reflect` has had its `bevy` feature removed. If you were relying

on this feature, simply enable `smallvec` and `smol_str` instead.

---------

Co-authored-by: Alice Cecile <alice.i.cecile@gmail.com>

In 0.11 you could easily access the inverse model matrix inside a WGSL

shader with `transpose(mesh.inverse_transpose_model)`. This was changed

in 0.12 when `inverse_transpose_model` was removed and it's now not as

straightfoward. I wrote a helper function for my own code and thought

I'd submit a pull request in case it would be helpful to others.

## Objective

`insert_or_spawn_batch` is due to be deprecated eventually (#15704), and

removing uses internally will make that easier.

## Solution

Replaced internal uses of `insert_or_spawn_batch` with

`try_insert_batch` (non-panicking variant because

`insert_or_spawn_batch` didn't panic).

All of the internal uses are in rendering code. Since retained rendering

was meant to get rid non-opaque entity IDs, I assume the code was just

using `insert_or_spawn_batch` because `insert_batch` didn't exist and

not because it actually wanted to spawn something. However, I am *not*

confident in my ability to judge rendering code.

# Objective

Component `require()` IDE integration is fully broken, as of #16575.

## Solution

This reverts us back to the previous "put the docs on Component trait"

impl. This _does_ reduce the accessibility of the required components in

rust docs, but the complete erasure of "required component IDE

experience" is not worth the price of slightly increased prominence of

requires in docs.

Additionally, Rust Analyzer has recently started including derive

attributes in suggestions, so we aren't losing that benefit of the

proc_macro attribute impl.

This reverts commit 0b5302d96a.

# Objective

- Fixes#18158

- #17482 introduced rendering changes and was merged a bit too fast

## Solution

- Revert #17482 so that it can be redone and rendering changes discussed

before being merged. This will make it easier to compare changes with

main in the known "valid" state

This is not an issue with the work done in #17482 that is still

interesting

# Objective

Transparently uses simple `EnvironmentMapLight`s to mimic

`AmbientLight`s. Implements the first part of #17468, but I can

implement hemispherical lights in this PR too if needed.

## Solution

- A function `EnvironmentMapLight::solid_color(&mut Assets<Image>,

Color)` is provided to make an environment light with a solid color.

- A new system is added to `SimulationLightSystems` that maps

`AmbientLight`s on views or the world to a corresponding

`EnvironmentMapLight`.

I have never worked with (or on) Bevy before, so nitpicky comments on

how I did things are appreciated :).

## Testing

Testing was done on a modified version of the `3d/lighting` example,

where I removed all lights except the ambient light. I have not included

the example, but can if required.

## Migration

`bevy_pbr::AmbientLight` has been deprecated, so all usages of it should

be replaced by a `bevy_pbr::EnvironmentMapLight` created with

`EnvironmentMapLight::solid_color` placed on the camera. There is no

alternative to ambient lights as resources.

This commit makes the

`mark_meshes_as_changed_if_their_materials_changed` system use the new

`AssetChanged<MeshMaterial3d>` query filter in addition to

`Changed<MeshMaterial3d>`. This ensures that we update the

`MeshInputUniform`, which contains the bindless material slot. Updating

the `MeshInputUniform` fixes problems that occurred when the

`MeshBindGroupAllocator` reallocated meshes in such a way as to change

their bindless slot.

Closes#18102.

# Objective

Fixes https://github.com/bevyengine/bevy/issues/17590.

## Solution

`prepare_volumetric_fog_uniforms` adds a uniform for each combination of

fog volume and view. But it only allocated enough uniforms for one fog

volume per view.

## Testing

Ran the `volumetric_fog` example with 1/2/3/4 fog volumes. Also checked

the `fog_volumes` and `scrolling_fog` examples (without multiple

volumes). Win10/Vulkan/Nvidia.

To test multiple views I tried adding fog volumes to the `split_screen`

example. This doesn't quite work - the fog should be centred on the fox,

but instead it's centred on the window. Same result with and without the

PR, so I'm assuming it's a separate bug.

# Objective

As discussed in #14275, Bevy is currently too prone to panic, and makes

the easy / beginner-friendly way to do a large number of operations just

to panic on failure.

This is seriously frustrating in library code, but also slows down

development, as many of the `Query::single` panics can actually safely

be an early return (these panics are often due to a small ordering issue

or a change in game state.

More critically, in most "finished" products, panics are unacceptable:

any unexpected failures should be handled elsewhere. That's where the

new

With the advent of good system error handling, we can now remove this.

Note: I was instrumental in a) introducing this idea in the first place

and b) pushing to make the panicking variant the default. The

introduction of both `let else` statements in Rust and the fancy system

error handling work in 0.16 have changed my mind on the right balance

here.

## Solution

1. Make `Query::single` and `Query::single_mut` (and other random

related methods) return a `Result`.

2. Handle all of Bevy's internal usage of these APIs.

3. Deprecate `Query::get_single` and friends, since we've moved their

functionality to the nice names.

4. Add detailed advice on how to best handle these errors.

Generally I like the diff here, although `get_single().unwrap()` in

tests is a bit of a downgrade.

## Testing

I've done a global search for `.single` to track down any missed

deprecated usages.

As to whether or not all the migrations were successful, that's what CI

is for :)

## Future work

~~Rename `Query::get_single` and friends to `Query::single`!~~

~~I've opted not to do this in this PR, and smear it across two releases

in order to ease the migration. Successive deprecations are much easier

to manage than the semantics and types shifting under your feet.~~

Cart has convinced me to change my mind on this; see

https://github.com/bevyengine/bevy/pull/18082#discussion_r1974536085.

## Migration guide

`Query::single`, `Query::single_mut` and their `QueryState` equivalents

now return a `Result`. Generally, you'll want to:

1. Use Bevy 0.16's system error handling to return a `Result` using the

`?` operator.

2. Use a `let else Ok(data)` block to early return if it's an expected

failure.

3. Use `unwrap()` or `Ok` destructuring inside of tests.

The old `Query::get_single` (etc) methods which did this have been

deprecated.

## Objective

Alternative to #18001.

- Now that systems can handle the `?` operator, `get_entity` returning

`Result` would be more useful than `Option`.

- With `get_entity` being more flexible, combined with entity commands

now checking the entity's existence automatically, the panic in `entity`

isn't really necessary.

## Solution

- Changed `Commands::get_entity` to return `Result<EntityCommands,

EntityDoesNotExistError>`.

- Removed panic from `Commands::entity`.

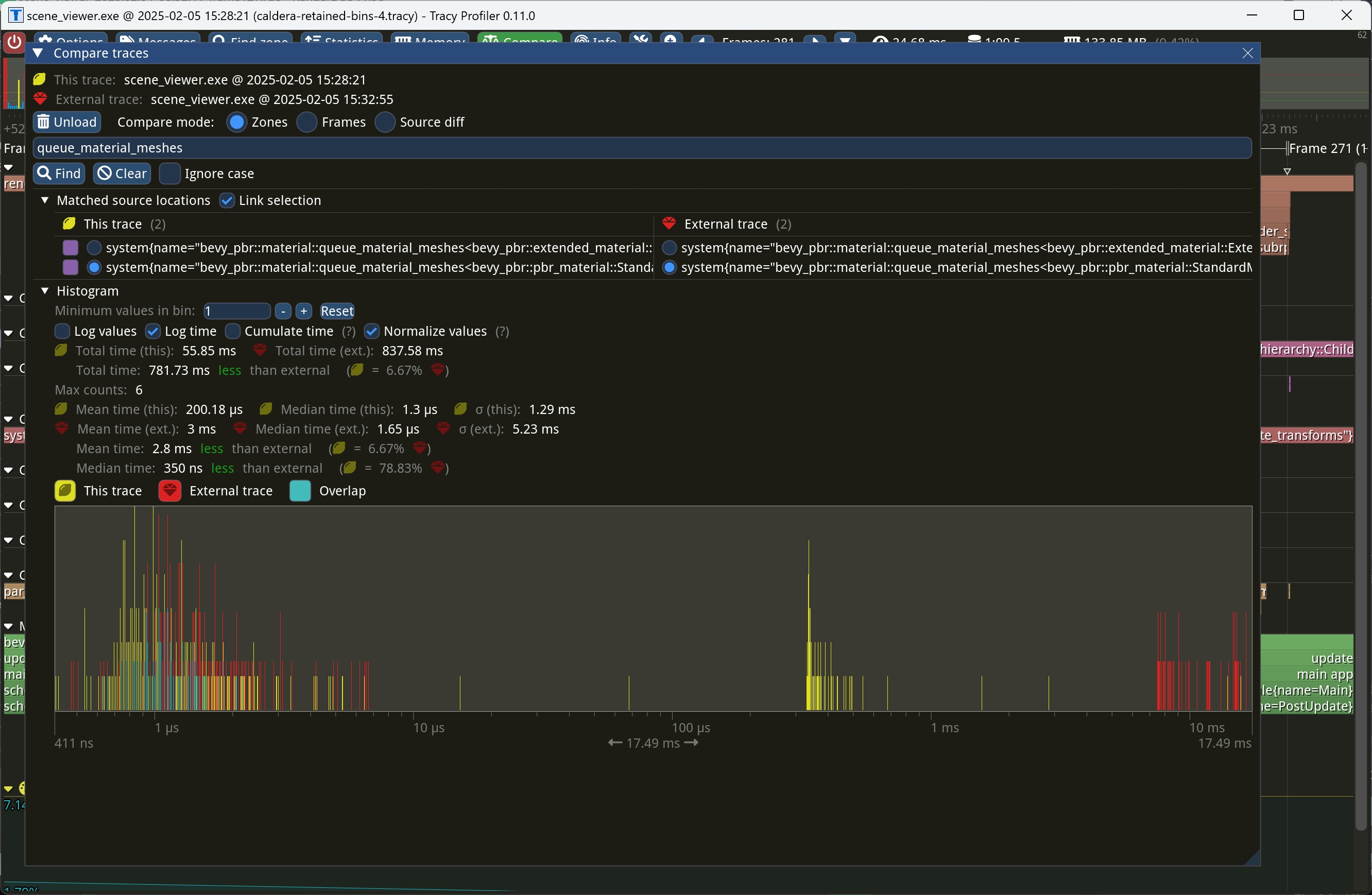

Even though opaque deferred entities aren't placed into the `Opaque3d`

bin, we still want to cache them as though they were, so that we don't

have to re-queue them every frame. This commit implements that logic,

reducing the time of `queue_material_meshes` to near-zero on Caldera.

Currently, the structure-level `#[uniform]` attribute of `AsBindGroup`

creates a binding array of individual buffers, each of which contains

data for a single material. A more efficient approach would be to

provide a single buffer with an array containing all of the data for all

materials in the bind group. Because `StandardMaterial` uses

`#[uniform]`, this can be notably inefficient with large numbers of

materials.

This patch introduces a new attribute on `AsBindGroup`, `#[data]`, which

works identically to `#[uniform]` except that it concatenates all the

data into a single buffer that the material bind group allocator itself

manages. It also converts `StandardMaterial` to use this new

functionality. This effectively provides the "material data in arrays"

feature.

# Objective

Allow prepass to run without ATTRIBUTE_NORMAL.

This is needed for custom materials with non-standard vertex attributes.

For example a voxel material with manually packed vertex data.

Fixes#13054.

This PR covers the first part of the **stale** PR #13569 to only focus

on fixing #13054.

## Solution

- Only push normals `vertex_attributes` when the layout contains

`Mesh::ATTRIBUTE_NORMAL`

## Testing

- Did you test these changes? If so, how?

**Tested the fix on my own project with a mesh without normal

attribute.**

- Are there any parts that need more testing?

**I don't think so.**

- How can other people (reviewers) test your changes? Is there anything

specific they need to know?

**Prepass should not be blocked on a mesh without normal attributes

(with or without custom material).**

- If relevant, what platforms did you test these changes on, and are

there any important ones you can't test?

**Probably irrelevant, but Windows/Vulkan.**

# Objective

- Fixes#17960

## Solution

- Followed the [edition upgrade

guide](https://doc.rust-lang.org/edition-guide/editions/transitioning-an-existing-project-to-a-new-edition.html)

## Testing

- CI

---

## Summary of Changes

### Documentation Indentation

When using lists in documentation, proper indentation is now linted for.

This means subsequent lines within the same list item must start at the

same indentation level as the item.

```rust

/* Valid */

/// - Item 1

/// Run-on sentence.

/// - Item 2

struct Foo;

/* Invalid */

/// - Item 1

/// Run-on sentence.

/// - Item 2

struct Foo;

```

### Implicit `!` to `()` Conversion

`!` (the never return type, returned by `panic!`, etc.) no longer

implicitly converts to `()`. This is particularly painful for systems

with `todo!` or `panic!` statements, as they will no longer be functions

returning `()` (or `Result<()>`), making them invalid systems for

functions like `add_systems`. The ideal fix would be to accept functions

returning `!` (or rather, _not_ returning), but this is blocked on the

[stabilisation of the `!` type

itself](https://doc.rust-lang.org/std/primitive.never.html), which is

not done.

The "simple" fix would be to add an explicit `-> ()` to system

signatures (e.g., `|| { todo!() }` becomes `|| -> () { todo!() }`).

However, this is _also_ banned, as there is an existing lint which (IMO,

incorrectly) marks this as an unnecessary annotation.

So, the "fix" (read: workaround) is to put these kinds of `|| -> ! { ...

}` closuers into variables and give the variable an explicit type (e.g.,

`fn()`).

```rust

// Valid

let system: fn() = || todo!("Not implemented yet!");

app.add_systems(..., system);

// Invalid

app.add_systems(..., || todo!("Not implemented yet!"));

```

### Temporary Variable Lifetimes

The order in which temporary variables are dropped has changed. The

simple fix here is _usually_ to just assign temporaries to a named

variable before use.

### `gen` is a keyword

We can no longer use the name `gen` as it is reserved for a future

generator syntax. This involved replacing uses of the name `gen` with

`r#gen` (the raw-identifier syntax).

### Formatting has changed

Use statements have had the order of imports changed, causing a

substantial +/-3,000 diff when applied. For now, I have opted-out of

this change by amending `rustfmt.toml`

```toml

style_edition = "2021"

```

This preserves the original formatting for now, reducing the size of

this PR. It would be a simple followup to update this to 2024 and run

`cargo fmt`.

### New `use<>` Opt-Out Syntax

Lifetimes are now implicitly included in RPIT types. There was a handful

of instances where it needed to be added to satisfy the borrow checker,

but there may be more cases where it _should_ be added to avoid

breakages in user code.

### `MyUnitStruct { .. }` is an invalid pattern

Previously, you could match against unit structs (and unit enum

variants) with a `{ .. }` destructuring. This is no longer valid.

### Pretty much every use of `ref` and `mut` are gone

Pattern binding has changed to the point where these terms are largely

unused now. They still serve a purpose, but it is far more niche now.

### `iter::repeat(...).take(...)` is bad

New lint recommends using the more explicit `iter::repeat_n(..., ...)`

instead.

## Migration Guide

The lifetimes of functions using return-position impl-trait (RPIT) are

likely _more_ conservative than they had been previously. If you

encounter lifetime issues with such a function, please create an issue

to investigate the addition of `+ use<...>`.

## Notes

- Check the individual commits for a clearer breakdown for what

_actually_ changed.

---------

Co-authored-by: François Mockers <francois.mockers@vleue.com>

# Objective

`Eq`/`PartialEq` are currently implemented for `MeshMaterial{2|3}d` only

through the derive macro. Since we don't have perfect derive yet, the

impls are only present for `M: Eq` and `M: PartialEq`. On the other

hand, I want to be able to compare material components for my toy

reactivity project.

## Solution

Switch to manual `Eq`/`PartialEq` impl.

## Testing

Boy I hope this didn't break anything!

PR #17898 regressed this, causing much of #17970. This commit fixes the

issue by freeing and reallocating materials in the

`MaterialBindGroupAllocator` on change. Note that more efficiency is

possible, but I opted for the simple approach because (1) we should fix

this bug ASAP; (2) I'd like #17965 to land first, because that unlocks

the biggest potential optimization, which is not recreating the bind

group if it isn't necessary to do so.

We might not be able to prepare a material on the first frame we

encounter a mesh using it for various reasons, including that the

material hasn't been loaded yet or that preparing the material is

exceeding the per-frame cap on number of bytes to load. When this

happens, we currently try to find the material in the

`MaterialBindGroupAllocator`, fail, and then fall back to group 0, slot

0, the default `MaterialBindGroupId`, which is obviously incorrect.

Worse, we then fail to dirty the mesh and reextract it when we *do*

finish preparing the material, so the mesh will continue to be rendered

with an incorrect material.

This patch fixes both problems. In `collect_meshes_for_gpu_building`, if

we fail to find a mesh's material in the `MeshBindGroupAllocator`, then

we detect that case, bail out, and add it to a list,

`MeshesToReextractNextFrame`. On subsequent frames, we process all the

meshes in `MeshesToReextractNextFrame` as though they were changed. This

ensures both that we don't render a mesh if its material hasn't been

loaded and that we start rendering the mesh once its material does load.

This was first noticed in the intermittent Pixel Eagle failures in the

`testbed_3d` patch in #17898, although the problem has actually existed

for some time. I believe it just so happened that the changes to the

allocator in that PR caused the problem to appear more commonly than it

did before.

This patch fixes two bugs in the new non-bindless material allocator

that landed in PR #17898:

1. A debug assertion to prevent double frees had been flipped: we

checked to see whether the slot was empty before freeing, while we

should have checked to see whether the slot was full.

2. The non-bindless allocator returned `None` when querying a slab that

hadn't been prepared yet instead of returning a handle to that slab.

This resulted in a 1-frame delay when modifying materials. In the

`animated_material` example, this resulted in the meshes never showing

up at all, because that example changes every material every frame.

Together with #17979, this patch locally fixes the problems with

`animated_material` on macOS that were reported in #17970.

Two-phase occlusion culling can be helpful for shadow maps just as it

can for a prepass, in order to reduce vertex and alpha mask fragment

shading overhead. This patch implements occlusion culling for shadow

maps from directional lights, when the `OcclusionCulling` component is

present on the entities containing the lights. Shadow maps from point

lights are deferred to a follow-up patch. Much of this patch involves

expanding the hierarchical Z-buffer to cover shadow maps in addition to

standard view depth buffers.

The `scene_viewer` example has been updated to add `OcclusionCulling` to

the directional light that it creates.

This improved the performance of the rend3 sci-fi test scene when

enabling shadows.

Currently, Bevy's implementation of bindless resources is rather

unusual: every binding in an object that implements `AsBindGroup` (most

commonly, a material) becomes its own separate binding array in the

shader. This is inefficient for two reasons:

1. If multiple materials reference the same texture or other resource,

the reference to that resource will be duplicated many times. This

increases `wgpu` validation overhead.

2. It creates many unused binding array slots. This increases `wgpu` and

driver overhead and makes it easier to hit limits on APIs that `wgpu`

currently imposes tight resource limits on, like Metal.

This PR fixes these issues by switching Bevy to use the standard

approach in GPU-driven renderers, in which resources are de-duplicated

and passed as global arrays, one for each type of resource.

Along the way, this patch introduces per-platform resource limits and

bumps them from 16 resources per binding array to 64 resources per bind

group on Metal and 2048 resources per bind group on other platforms.

(Note that the number of resources per *binding array* isn't the same as

the number of resources per *bind group*; as it currently stands, if all

the PBR features are turned on, Bevy could pack as many as 496 resources

into a single slab.) The limits have been increased because `wgpu` now

has universal support for partially-bound binding arrays, which mean

that we no longer need to fill the binding arrays with fallback

resources on Direct3D 12. The `#[bindless(LIMIT)]` declaration when

deriving `AsBindGroup` can now simply be written `#[bindless]` in order

to have Bevy choose a default limit size for the current platform.

Custom limits are still available with the new

`#[bindless(limit(LIMIT))]` syntax: e.g. `#[bindless(limit(8))]`.

The material bind group allocator has been completely rewritten. Now

there are two allocators: one for bindless materials and one for

non-bindless materials. The new non-bindless material allocator simply

maintains a 1:1 mapping from material to bind group. The new bindless

material allocator maintains a list of slabs and allocates materials

into slabs on a first-fit basis. This unfortunately makes its

performance O(number of resources per object * number of slabs), but the

number of slabs is likely to be low, and it's planned to become even

lower in the future with `wgpu` improvements. Resources are

de-duplicated with in a slab and reference counted. So, for instance, if

multiple materials refer to the same texture, that texture will exist

only once in the appropriate binding array.

To support these new features, this patch adds the concept of a

*bindless descriptor* to the `AsBindGroup` trait. The bindless

descriptor allows the material bind group allocator to probe the layout

of the material, now that an array of `BindGroupLayoutEntry` records is

insufficient to describe the group. The `#[derive(AsBindGroup)]` has

been heavily modified to support the new features. The most important

user-facing change to that macro is that the struct-level `uniform`

attribute, `#[uniform(BINDING_NUMBER, StandardMaterial)]`, now reads

`#[uniform(BINDLESS_INDEX, MATERIAL_UNIFORM_TYPE,

binding_array(BINDING_NUMBER)]`, allowing the material to specify the

binding number for the binding array that holds the uniform data.

To make this patch simpler, I removed support for bindless

`ExtendedMaterial`s, as well as field-level bindless uniform and storage

buffers. I intend to add back support for these as a follow-up. Because

they aren't in any released Bevy version yet, I figured this was OK.

Finally, this patch updates `StandardMaterial` for the new bindless

changes. Generally, code throughout the PBR shaders that looked like

`base_color_texture[slot]` now looks like

`bindless_2d_textures[material_indices[slot].base_color_texture]`.

This patch fixes a system hang that I experienced on the [Caldera test]

when running with `caldera --random-materials --texture-count 100`. The

time per frame is around 19.75 ms, down from 154.2 ms in Bevy 0.14: a

7.8× speedup.

[Caldera test]: https://github.com/DGriffin91/bevy_caldera_scene

Deferred rendering currently doesn't support occlusion culling. This PR

implements it in a straightforward way, mirroring what we already do for

the non-deferred pipeline.

On the rend3 sci-fi base test scene, this resulted in roughly a 2×

speedup when applied on top of my other patches. For that scene, it was

useful to add another option, `--add-light`, which forces the addition

of a shadow-casting light, to the scene viewer, which I included in this

patch.

This commit restructures the multidrawable batch set builder for better

performance in various ways:

* The bin traversal is optimized to make the best use of the CPU cache.

* The inner loop that iterates over the bins, which is the hottest part

of `batch_and_prepare_binned_render_phase`, has been shrunk as small as

possible.

* Where possible, multiple elements are added to or reserved from GPU

buffers as a batch instead of one at a time.

* Methods that LLVM wasn't inlining have been marked `#[inline]` where

doing so would unlock optimizations.

This code has also been refactored to avoid duplication between the

logic for indexed and non-indexed meshes via the introduction of a

`MultidrawableBatchSetPreparer` object.

Together, this improved the `batch_and_prepare_binned_render_phase` time

on Caldera by approximately 2×.

Eventually, we should optimize the batchable-but-not-multidrawable and

unbatchable logic as well, but these meshes are much rarer, so in the

interests of keeping this patch relatively small I opted to leave those

to a follow-up.

# Objective

Fix incorrect mesh culling where objects (particularly directional

shadows) were being incorrectly culled during the early preprocessing

phase. The issue manifested specifically on Apple M1 GPUs but not on

newer devices like the M4. The bug was in the

`view_frustum_intersects_obb` function, where including the w component

(plane distance) in the dot product calculations led to false positive

culling results. This caused objects to be incorrectly culled before

shadow casting could begin.

## Issue Details

The problem of missing shadows is reproducible on Apple M1 GPUs as of

this commit (bisected):

```

00722b8d0 Make indirect drawing opt-out instead of opt-in, enabling multidraw by default. (#16757)

```

and as recent as this commit:

```

c818c9214 Add option to animate materials in many_cubes (#17927)

```

- The frustum culling calculation incorrectly included the w component

(plane distance) when transforming basis vectors

- The relative radius calculation should only consider directional

transformation (xyz), not positional information (w)

- This caused false positive culling specifically on M1 devices likely

due to different device-specific floating-point behavior

- When objects were incorrectly culled, `early_instance_count` never

incremented, leading to missing geometry in the shadow pass

## Testing

- Tested on M1 and M4 devices to verify the fix

- Verified shadows and geometry render correctly on both platforms

- Confirmed the solution matches the existing Rust implementation's

behavior for calculating the relative radius:

c818c92143/crates/bevy_render/src/primitives/mod.rs (L77-L87)

- The fix resolves a mathematical error in the frustum culling

calculation while maintaining correct culling behavior across all

platforms.

---

## Showcase

`c818c9214`

<img width="1284" alt="c818c9214"

src="https://github.com/user-attachments/assets/fe1c7ea9-b13d-422e-b12d-f1cd74475213"

/>

`mate-h/frustum-cull-fix`

<img width="1283" alt="frustum-cull-fix"

src="https://github.com/user-attachments/assets/8a9ccb2a-64b6-4d5e-a17d-ac4798da5b51"

/>

The `check_visibility` system currently follows this algorithm:

1. Store all meshes that were visible last frame in the

`PreviousVisibleMeshes` set.

2. Determine which meshes are visible. For each such visible mesh,

remove it from `PreviousVisibleMeshes`.

3. Mark all meshes that remain in `PreviousVisibleMeshes` as invisible.

This algorithm would be correct if the `check_visibility` were the only

system that marked meshes visible. However, it's not: the shadow-related

systems `check_dir_light_mesh_visibility` and

`check_point_light_mesh_visibility` can as well. This results in the

following sequence of events for meshes that are in a shadow map but

*not* visible from a camera:

A. `check_visibility` runs, finds that no camera contains these meshes,

and marks them hidden, which sets the changed flag.

B. `check_dir_light_mesh_visibility` and/or

`check_point_light_mesh_visibility` run, discover that these meshes

are visible in the shadow map, and marks them as visible, again

setting the `ViewVisibility` changed flag.

C. During the extraction phase, the mesh extraction system sees that

`ViewVisibility` is changed and re-extracts the mesh.

This is inefficient and results in needless work during rendering.

This patch fixes the issue in two ways:

* The `check_dir_light_mesh_visibility` and

`check_point_light_mesh_visibility` systems now remove meshes that they

discover from `PreviousVisibleMeshes`.

* Step (3) above has been moved from `check_visibility` to a separate

system, `mark_newly_hidden_entities_invisible`. This system runs after

all visibility-determining systems, ensuring that

`PreviousVisibleMeshes` contains only those meshes that truly became

invisible on this frame.

This fix dramatically improves the performance of [the Caldera

benchmark], when combined with several other patches I've submitted.

[the Caldera benchmark]:

https://github.com/DGriffin91/bevy_caldera_scene

PR #17688 broke motion vector computation, and therefore motion blur,

because it enabled retention of `MeshInputUniform`s, and

`MeshInputUniform`s contain the indices of the previous frame's

transform and the previous frame's skinned mesh joint matrices. On frame

N, if a `MeshInputUniform` is retained on GPU from the previous frame,

the `previous_input_index` and `previous_skin_index` would refer to the

indices for frame N - 2, not the index for frame N - 1.

This patch fixes the problems. It solves these issues in two different

ways, one for transforms and one for skins:

1. To fix transforms, this patch supplies the *frame index* to the

shader as part of the view uniforms, and specifies which frame index

each mesh's previous transform refers to. So, in the situation described

above, the frame index would be N, the previous frame index would be N -

1, and the `previous_input_frame_number` would be N - 2. The shader can

now detect this situation and infer that the mesh has been retained, and

can therefore conclude that the mesh's transform hasn't changed.

2. To fix skins, this patch replaces the explicit `previous_skin_index`

with an invariant that the index of the joints for the current frame and

the index of the joints for the previous frame are the same. This means

that the `MeshInputUniform` never has to be updated even if the skin is

animated. The downside is that we have to copy joint matrices from the

previous frame's buffer to the current frame's buffer in

`extract_skins`.

The rationale behind (2) is that we currently have no mechanism to

detect when joints that affect a skin have been updated, short of

comparing all the transforms and setting a flag for

`extract_meshes_for_gpu_building` to consume, which would regress

performance as we want `extract_skins` and

`extract_meshes_for_gpu_building` to be able to run in parallel.

To test this change, use `cargo run --example motion_blur`.

Currently, the specialized pipeline cache maps a (view entity, mesh

entity) tuple to the retained pipeline for that entity. This causes two

problems:

1. Using the view entity is incorrect, because the view entity isn't

stable from frame to frame.

2. Switching the view entity to a `RetainedViewEntity`, which is

necessary for correctness, significantly regresses performance of

`specialize_material_meshes` and `specialize_shadows` because of the

loss of the fast `EntityHash`.

This patch fixes both problems by switching to a *two-level* hash table.

The outer level of the table maps each `RetainedViewEntity` to an inner

table, which maps each `MainEntity` to its pipeline ID and change tick.

Because we loop over views first and, within that loop, loop over

entities visible from that view, we hoist the slow lookup of the view

entity out of the inner entity loop.

Additionally, this patch fixes a bug whereby pipeline IDs were leaked

when removing the view. We still have a problem with leaking pipeline

IDs for deleted entities, but that won't be fixed until the specialized

pipeline cache is retained.

This patch improves performance of the [Caldera benchmark] from 7.8×

faster than 0.14 to 9.0× faster than 0.14, when applied on top of the

global binding arrays PR, #17898.

[Caldera benchmark]: https://github.com/DGriffin91/bevy_caldera_scene

Currently, Bevy rebuilds the buffer containing all the transforms for

joints every frame, during the extraction phase. This is inefficient in

cases in which many skins are present in the scene and their joints

don't move, such as the Caldera test scene.

To address this problem, this commit switches skin extraction to use a

set of retained GPU buffers with allocations managed by the offset

allocator. I use fine-grained change detection in order to determine

which skins need updating. Note that the granularity is on the level of

an entire skin, not individual joints. Using the change detection at

that level would yield poor performance in common cases in which an

entire skin is animated at once. Also, this patch yields additional

performance from the fact that changing joint transforms no longer

requires the skinned mesh to be re-extracted.

Note that this optimization can be a double-edged sword. In

`many_foxes`, fine-grained change detection regressed the performance of

`extract_skins` by 3.4x. This is because every joint is updated every

frame in that example, so change detection is pointless and is pure

overhead. Because the `many_foxes` workload is actually representative

of animated scenes, this patch includes a heuristic that disables

fine-grained change detection if the number of transformed entities in

the frame exceeds a certain fraction of the total number of joints.

Currently, this threshold is set to 25%. Note that this is a crude

heuristic, because it doesn't distinguish between the number of

transformed *joints* and the number of transformed *entities*; however,

it should be good enough to yield the optimum code path most of the

time.

Finally, this patch fixes a bug whereby skinned meshes are actually

being incorrectly retained if the buffer offsets of the joints of those

skinned meshes changes from frame to frame. To fix this without

retaining skins, we would have to re-extract every skinned mesh every

frame. Doing this was a significant regression on Caldera. With this PR,

by contrast, mesh joints stay at the same buffer offset, so we don't

have to update the `MeshInputUniform` containing the buffer offset every

frame. This also makes PR #17717 easier to implement, because that PR

uses the buffer offset from the previous frame, and the logic for

calculating that is simplified if the previous frame's buffer offset is

guaranteed to be identical to that of the current frame.

On Caldera, this patch reduces the time spent in `extract_skins` from

1.79 ms to near zero. On `many_foxes`, this patch regresses the

performance of `extract_skins` by approximately 10%-25%, depending on

the number of foxes. This has only a small impact on frame rate.

The GPU can fill out many of the fields in `IndirectParametersMetadata`

using information it already has:

* `early_instance_count` and `late_instance_count` are always

initialized to zero.

* `mesh_index` is already present in the work item buffer as the

`input_index` of the first work item in each batch.

This patch moves these fields to a separate buffer, the *GPU indirect

parameters metadata* buffer. That way, it avoids having to write them on

CPU during `batch_and_prepare_binned_render_phase`. This effectively

reduces the number of bits that that function must write per mesh from

160 to 64 (in addition to the 64 bits per mesh *instance*).

Additionally, this PR refactors `UntypedPhaseIndirectParametersBuffers`

to add another layer, `MeshClassIndirectParametersBuffers`, which allows

abstracting over the buffers corresponding indexed and non-indexed

meshes. This patch doesn't make much use of this abstraction, but

forthcoming patches will, and it's overall a cleaner approach.

This didn't seem to have much of an effect by itself on

`batch_and_prepare_binned_render_phase` time, but subsequent PRs

dependent on this PR yield roughly a 2× speedup.

Appending to these vectors is performance-critical in

`batch_and_prepare_binned_render_phase`, so `RawBufferVec`, which

doesn't have the overhead of `encase`, is more appropriate.

The `output_index` field is only used in direct mode, and the

`indirect_parameters_index` field is only used in indirect mode.

Consequently, we can combine them into a single field, reducing the size

of `PreprocessWorkItem`, which

`batch_and_prepare_{binned,sorted}_render_phase` must construct every

frame for every mesh instance, from 96 bits to 64 bits.

# Objective

Update typos, fix new typos.

1.29.6 was just released to fix an

[issue](https://github.com/crate-ci/typos/issues/1228) where January's

corrections were not included in the binaries for the last release.

Reminder: typos can be tossed in the monthly [non-critical corrections

issue](https://github.com/crate-ci/typos/issues/1221).

## Solution

I chose to allow `implementors`, because a good argument seems to be

being made [here](https://github.com/crate-ci/typos/issues/1226) and

there is now a PR to address that.

## Discussion

Should I exclude `bevy_mikktspace`?

At one point I think we had an informal policy of "don't mess with

mikktspace until https://github.com/bevyengine/bevy/pull/9050 is merged"

but it doesn't seem like that is likely to be merged any time soon.

I think these particular corrections in mikktspace are fine because

- The same typo mistake seems to have been fixed in that PR

- The entire file containing these corrections was deleted in that PR

## Typo of the Month

correspindong -> corresponding

Currently, invocations of `batch_and_prepare_binned_render_phase` and

`batch_and_prepare_sorted_render_phase` can't run in parallel because

they write to scene-global GPU buffers. After PR #17698,

`batch_and_prepare_binned_render_phase` started accounting for the

lion's share of the CPU time, causing us to be strongly CPU bound on

scenes like Caldera when occlusion culling was on (because of the

overhead of batching for the Z-prepass). Although I eventually plan to

optimize `batch_and_prepare_binned_render_phase`, we can obtain

significant wins now by parallelizing that system across phases.

This commit splits all GPU buffers that

`batch_and_prepare_binned_render_phase` and

`batch_and_prepare_sorted_render_phase` touches into separate buffers

for each phase so that the scheduler will run those phases in parallel.

At the end of batch preparation, we gather the render phases up into a

single resource with a new *collection* phase. Because we already run

mesh preprocessing separately for each phase in order to make occlusion

culling work, this is actually a cleaner separation. For example, mesh

output indices (the unique ID that identifies each mesh instance on GPU)

are now guaranteed to be sequential starting from 0, which will simplify

the forthcoming work to remove them in favor of the compute dispatch ID.

On Caldera, this brings the frame time down to approximately 9.1 ms with

occlusion culling on.

* Use texture atomics rather than buffer atomics for the visbuffer

(haven't tested perf on a raster-heavy scene yet)

* Unfortunately to clear the visbuffer we now need a compute pass to

clear it. Using wgpu's clear_texture function internally uses a buffer

-> image copy that's insanely expensive. Ideally it should be using

vkCmdClearColorImage, which I've opened an issue for

https://github.com/gfx-rs/wgpu/issues/7090. For now we'll have to stick

with a custom compute pass and all the extra code that brings.

* Faster resolve depth pass by discarding 0 depth pixels instead of

redundantly writing zero (2x faster for big depth textures like shadow

views)

## Objective

Get rid of a redundant Cargo feature flag.

## Solution

Use the built-in `target_abi = "sim"` instead of a custom Cargo feature

flag, which is set for the iOS (and visionOS and tvOS) simulator. This

has been stable since Rust 1.78.

In the future, some of this may become redundant if Wgpu implements

proper supper for the iOS Simulator:

https://github.com/gfx-rs/wgpu/issues/7057

CC @mockersf who implemented [the original

fix](https://github.com/bevyengine/bevy/pull/10178).

## Testing

- Open mobile example in Xcode.

- Launch the simulator.

- See that no errors are emitted.

- Remove the code cfg-guarded behind `target_abi = "sim"`.

- See that an error now happens.

(I haven't actually performed these steps on the latest `main`, because

I'm hitting an unrelated error (EDIT: It was

https://github.com/bevyengine/bevy/pull/17637). But tested it on

0.15.0).

---

## Migration Guide

> If you're using a project that builds upon the mobile example, remove

the `ios_simulator` feature from your `Cargo.toml` (Bevy now handles

this internally).

Currently, we look up each `MeshInputUniform` index in a hash table that

maps the main entity ID to the index every frame. This is inefficient,

cache unfriendly, and unnecessary, as the `MeshInputUniform` index for

an entity remains the same from frame to frame (even if the input

uniform changes). This commit changes the `IndexSet` in the `RenderBin`

to an `IndexMap` that maps the `MainEntity` to `MeshInputUniformIndex`

(a new type that this patch adds for more type safety).

On Caldera with parallel `batch_and_prepare_binned_render_phase`, this

patch improves that function from 3.18 ms to 2.42 ms, a 31% speedup.

# Objective

- Fixes#17797

## Solution

- `mesh` in `bevy_pbr::mesh_bindings` is behind a `ifndef

MESHLET_MESH_MATERIAL_PASS`. also gate `get_tag` which uses this `mesh`

## Testing

- Run the meshlet example

# Objective

Since previously we only had the alpha channel available, we stored the

mean of the transmittance in the aerial view lut, resulting in a grayer

fog than should be expected.

## Solution

- Calculate transmittance to scene in `render_sky` with two samples from

the transmittance lut

- use dual-source blending to effectively have per-component alpha

blending

Currently, we *sweep*, or remove entities from bins when those entities

became invisible or changed phases, during `queue_material_meshes` and